z-Series Modernization and Migration

Under the best of circumstances, mainframe systems are complex, expensive, and difficult to scale. In today’s world, applications written for mainframe legacy systems also present significant operational challenges to customers compounded by the dwindling pool of engineers who specialize in these outdated technologies. Many organizations want to migrate their legacy applications to the cloud but to do so they need to go through a lengthy migration process that is made more challenging by the complexity of mainframe applications.

Our Advice

Critical Insight

The most common tactic is for the organization to better realize their z/Series options and adopt a strategy built on complexity and workload understanding. To make the evident, obvious, the options here for the non-commodity are not as broad as with commodity server platforms and the mainframe is arguably the most widely used and complex non-commodity platform on the market.

Impact and Result

This research will help you:

- Evaluate the future viability of this platform.

- Assess the fit and purpose, and determine TCO

- Develop strategies for overcoming potential challenges.

- Determine the future of this platform for your organization.

z/Series Modernization and Migration Research & Tools

Besides the small introduction, subscribers and consulting clients within this management domain have access to:

1. z/Series Modernization and Migration Guide – A brief deck that outlines key migration options and considerations for the z/Series platform.

This blueprint will help you assess the fit, purpose, and price; develop strategies for overcoming potential challenges; and determine the future of z/Series for your organization.

- z/Series Modernization and Migration Storyboard

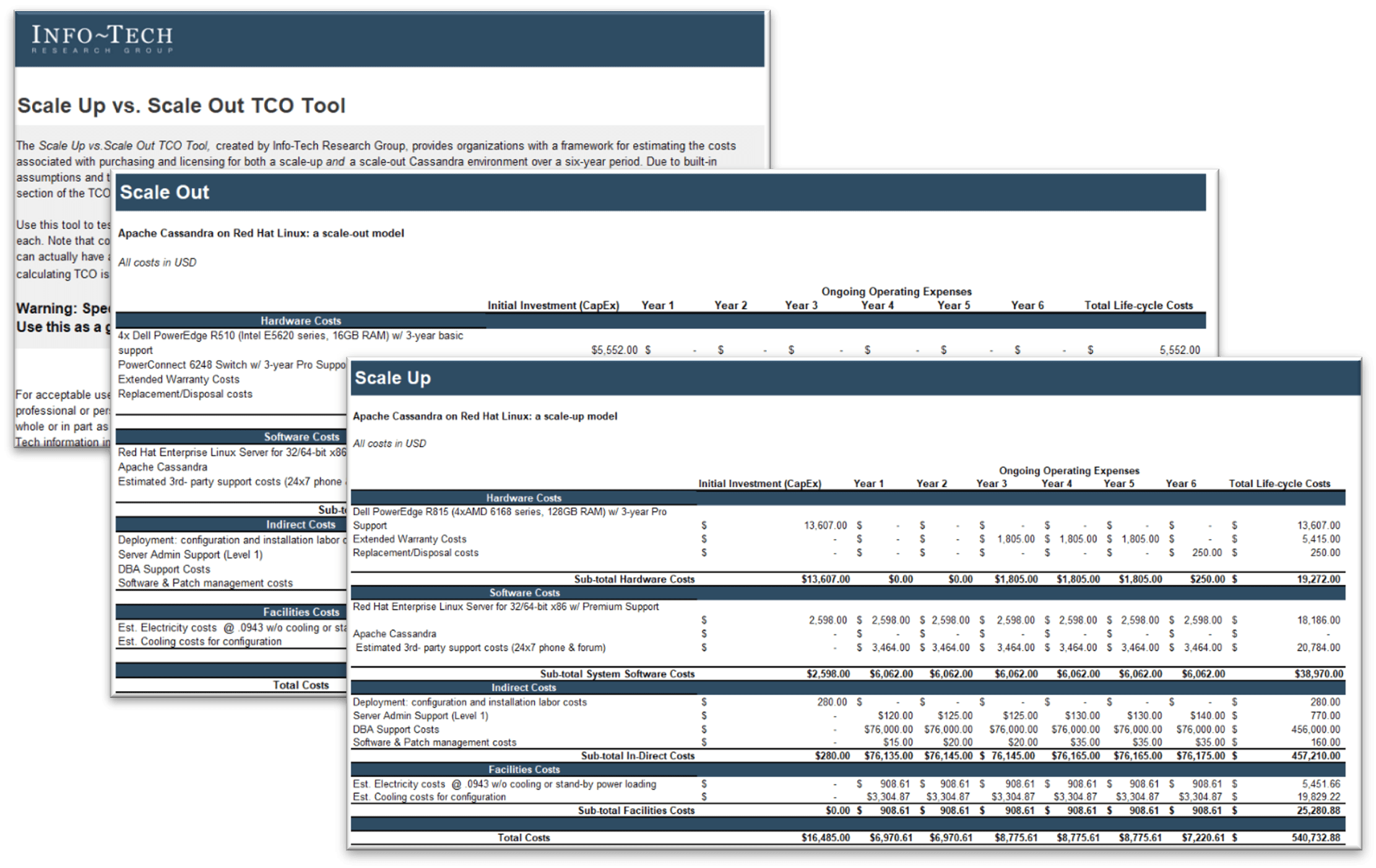

2. Scale Up vs. Scale Out TCO Tool – A tool that provides organizations with a framework for TCO.

Use this tool to play with the pre-populated values or insert your own amounts to compare possible database decisions, and determine the TCO of each. Note that common assumptions can often be false; for example, open-source Cassandra running on many inexpensive commodity servers can actually have a higher TCO over six years than a Cassandra environment running on a larger single expensive piece of hardware. Therefore, calculating TCO is an essential part of the database decision process.

- Scale Up vs. Scale Out TCO Tool

Further reading

z/Series Modernization and Migration

The biggest migration is yet to come.

Executive Summary

Info-Tech Insight

“A number of market conditions have coalesced in a way that is increasingly driving existing mainframe customers to consider running their application workloads on alternative platforms. In 2020, the World Economic Forum noted that 42% of core skills required to perform existing jobs are expected to change by 2022, and that more than 1 billion workers need to be reskilled by 2030.” – Dale Vecchio

Your Challenge |

It seems like anytime there’s a new CIO who is not from the mainframe world there is immediate pressure to get off this platform. However, just as there is a high financial commitment required to stay on System Z, moving off is risky and potentially more costly. You need to truly understand the scale and complexity ahead of the organization. |

|---|---|

Common Obstacles |

Under the best of circumstances, mainframe systems are complex, expensive, and difficult to scale. In today’s world, applications written for mainframe legacy systems also present significant operational challenges to customers compounded by the dwindling pool of engineers who specialize in these outdated technologies. Many organizations want to migrate their legacy applications to the cloud, but to do so they need to go through a lengthy migration process that is made more challenging by the complexity of mainframe applications. |

Info-Tech Approach |

The most common tactic is for the organization to better realize its z/Series options and adopt a strategy built on complexity and workload understanding. To make the evident, obvious: the options here for the non-commodity are not as broad as with commodity server platforms and the mainframe is arguably the most widely used and complex non-commodity platform on the market. |

Review

We help IT leaders make the most of their z/Series environment

Problem statement: The z/Series remains a vital platform for many businesses and continues to deliver exceptional reliability and performance and play a key role in the enterprise. With the limited and aging resources at hand, CIOs and the like must continually review and understand their migration path with the same regard as any other distributed system roadmap. |

This research is designed for: IT strategic direction decision makers. IT managers responsible for an existing z/Series platform. Organizations evaluating platforms for mission critical applications. |

This research will help you:

|

Analyst Perspective

Good Luck.

|

Modernize the mainframe … here we go again. Prior to 2020, most organizations were muddling around in “year eleven of the four-year plan” to exit the mainframe platform where a medium-term commitment to the platform existed. Since 2020, it appears the appetite for the mainframe platform changed. Again. Discussions mostly seem to be about what the options are beyond hardware outsourcing or re-platforming to “cloud” migration of workloads – mostly planning and strategy topics. A word of caution: it would appear unwise to stand in front of the exit door for fear of being trampled. Hardware expirations between now and 2025 are motivating hosting deployments. Others are in migration activities, and some have already decommissioned and migrated but now are trying to rehab the operations team now lacking direction and/or structure. |

Darin Stahl |

The mainframe “fidget spinner”

Thinking of modernizing your mainframe can cause you angst so grab a fidget spinner and relax because we have you covered!

External Business Pressures:

- Digital transformation

- Modernization programs

- Compliance and regulations

- TCO

Internal Considerations:

- Reinvest

- Migrate to a new platform

- Evaluate public and vendor cloud alternatives

- Hosting versus infrastructure outsourcing

Info-Tech Insight

With multiple control points to be addressed, care must be taken to simplify your options while addressing all concerns to ease operational load.

The analyst call review

“Who has Darin talked with?” – Troy Cheeseman

Dating back to 2011, Darin Stahl has been the primary z/Series subject matter expert within the Infrastructure & Operations Research team. Below represents the percentage of calls, per industry, where z/Series advisory has been provided by Darin*: 37% - State Government 19% - Insurance 11% - Municipality 8% - Federal Government 8% - Financial Services 5% - Higher Education 3% - Retail 3% - Hospitality/Resort 3% - Logistics and Transportation 3% - Utility Based on the Info-Tech call history, there is a consistent cross section of industry members who not only rely upon the mainframe but are also considering migration options. |

Note:Of course, this only represents industries who are Info-Tech members and who called for advisory services about the mainframe. There may well be more Info-Tech members with mainframes who have no topic to discuss with us about the mainframe specifically. Why do we mention this? We caution against suggesting things like, ”somewhat less than 50% of mainframes live in state data centers” or any other extrapolated inference from this data. Our viewpoint and discussion is based on the cases and the calls that we have taken over the years. *37+ enterprise calls were reviewed and sampled. |

Scale out versus scale up

For most workloads “scale out" (e.g. virtualized cloud or IaaS ) is going to provide obvious and quantifiable benefits. However, with some workloads (extremely large analytics or batch processing ) a "scale up" approach is more optimal. But the scale up is really limited to very specific workloads. Despite some assumptions, the gains made when moving from scale up to scale out are not linear. Obviously, when you scale out from a performance perspective you experience a drop in what a single unit of compute can do. Additionally, there will be latency introduced in the form of network overhead, transactions, and replication into operations that were previously done just bypassing object references within a single frame. Some applications or use cases will have to be architected or written differently (thinking about the high-demand analytic workloads at large scale). Remember the “grid computing” craze that hit us during the early part of this century? It was advantageous for many to distribute work across a grid of computing devices for applications but the advantage gained was contingent on the workload able to be parsed out as work units and then pulled back together through the application. There can be some interesting and negative consequences for analytics or batch operations in a large scale as mentioned above. Bottom line, as experienced previously with Microfocus mainframe ports to x86, the batch operations simply take much longer to complete. |

Big Data Considerations*:

|

Consider your resourcing

Below is a summary of concerns regarding core mainframe skills:

|

The Challenge An aging workforce, specialized skills, and high salary expectations

The In-House Solution: Build your mentorship program to create a viable succession plan

|

Understand your options

Migrate to another platform |

Use a hosting provider |

Outsource |

Re-platform (cloud/vendors) |

Reinvest |

|---|---|---|---|---|

There are several challenges to overcome in a migration project, from finding an appropriate alternative platform to rewriting legacy code. Many organizations have incurred huge costs in the attempt, only to be unsuccessful in the end, so make this decision carefully. |

Organizations often have highly sensitive data on their mainframes (e.g. financial data), so many of these organizations are reluctant to have this data live outside of their four walls. However, the convenience of using a hosting provider makes this an attractive option to consider. |

The most common tactic is for the organization to adopt some level of outsourcing for the non-commodity platform, retaining the application support/development in-house. |

A customer can “re-platform” the non-commodity workload into public cloud offerings or in a few offerings |

If you’re staying with the mainframe and keeping it in-house, it’s important to continue to invest in this platform, keep it current, and look for opportunities to optimize its value. |

Migrate

Having perpetual plans to migrate handcuffs your ability to invest in your mainframe, extend its value, and improve cost effectiveness.

If this sounds like your organization, it’s time to do the analysis so you can decide and get clarity on the future of the mainframe in your organization.

|

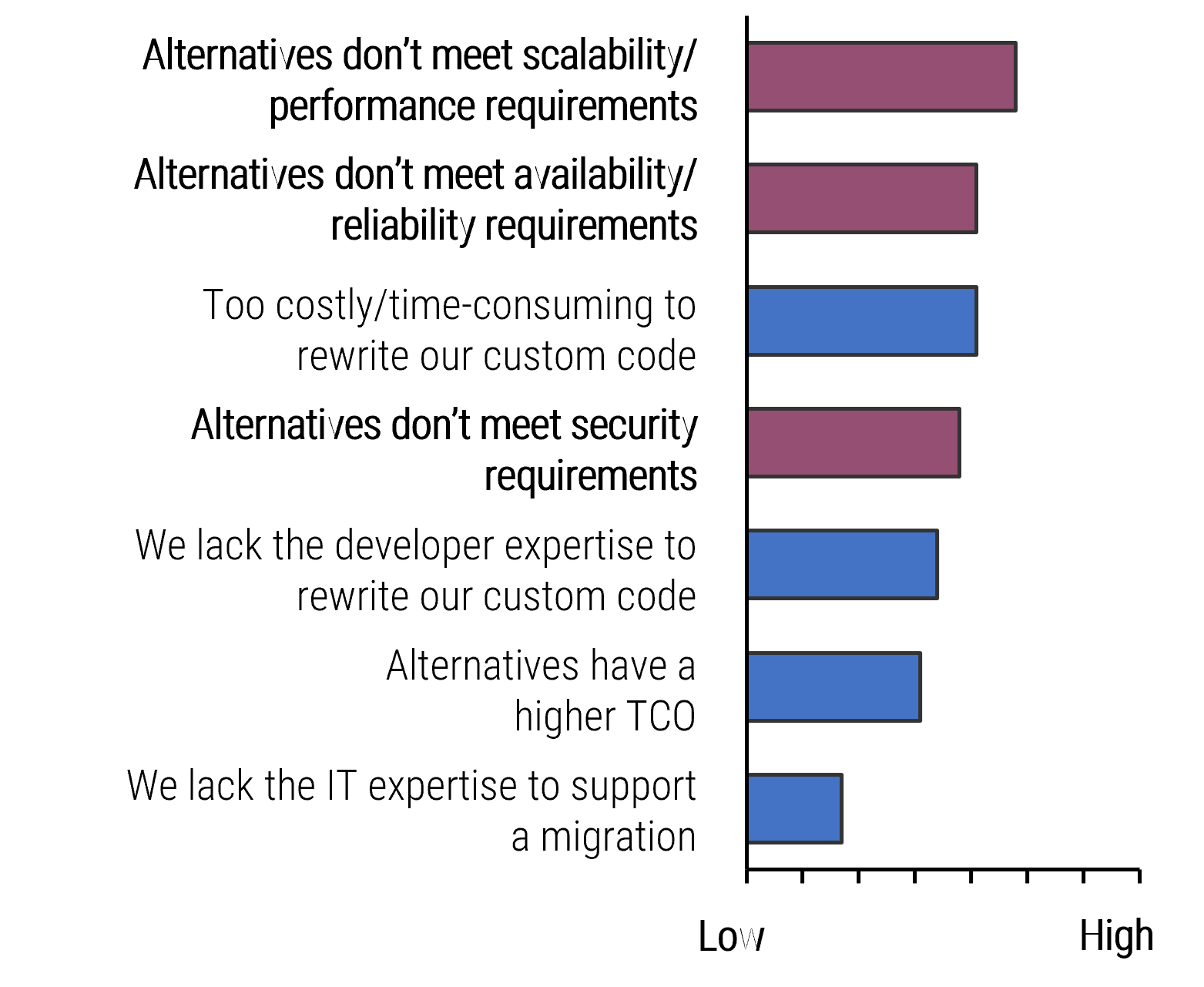

*3 of the top 4 challenges related to shortfalls of alternative platforms

|

*Source: Maximize the Value of IBM Mainframes in My Business |

Hosting

Using a hosting provider is typically more cost-effective than running your mainframe in-house.

Potential for reduced costs

- Hosting enables you to reduce or eliminate your mainframe staff.

- Economies of scale enable hosting providers to reduce software licensing costs. They also have more buying power to negotiate better terms.

- Power and cooling costs are also transferred to the hosting provider.

Reliable infrastructure and experienced staff

- A quality hosting provider will have 24/7 monitoring, full redundancy, and proven disaster recovery capabilities.

- The hosting provider will also have a larger mainframe staff, so they don’t have the same risk of suddenly being without those advanced critical skills.

So, what are the risks?

- A transition to a hosting provider usually means eliminating or significantly reducing your in-house mainframe staff. With that loss of in-house expertise, it will be next to impossible to bring the mainframe back in-house, and you become highly dependent on your hosting provider.

Outsourcing

The most common tactic is for the organization to adopt some level of outsourcing for the non-commodity platform, retaining the application support/development in-house. The options here for the non-commodity (z/Series, IBM Power platforms, for example) are not as broad as with commodity server platforms. More confusingly, the term “outsourcing” for these can include: |

Traditional/Colocation – A customer transitions their hardware environment to a provider’s data center. The provider can then manage the hardware and “system.” Onsite Outsourcing – Here a provider will support the hardware/system environment at the client’s site. The provider may acquire the customer’s hardware and provide software licenses. This could also include hiring or “rebadging” staff supporting the platform. This type of arrangement is typically part of a larger services or application transformation. While low risk, it is not as cost-effective as other deployment models. Managed Hosting – A customer transitions their legacy application environment to an off-prem hosted multi-tenanted environment. It will provide the most cost savings following the transition, stabilization, and disposal of existing environment. Some providers will provide software licensing, and some will also support “Bring Your Own,” as permitted by IBM terms for example. |

Info-Tech Insight Technical debt for non-commodity platforms isn’t only hardware based. Moving an application written for the mainframe onto a “cheaper” hardware platform (or outsourced deployment) leaves the more critical problems and frequently introduces a raft of new ones. |

Re-platform – z/Series COBOL Cloud

Re-platforming is not trivial.

While the majority of the coded functionality (JCLs, programs, etc.) migrate easily, there will be a need to re-code or re-write objects – especially if any object, code, or location references are not exactly the same in the new environment. Micro Focus has solid experience in this but if consider it within the context of an 80/20 rule (the actual metrics might be much better than that), meaning that some level of rework would have to be accomplished as an overhead to the exercise. Build that thought into your thinking and business case. |

AWS Cloud

Azure Cloud

Micro Focus COBOL (Visual COBOL)

|

Re-platform – z/Series (Non-COBOL)

But what if it's not COBOL?

Yeah, a complication for this situation is the legacy code. While re-platforming/re-hosting non-COBOL code is not new, we have not had many member observations compared to the re-platforming/re-hosting of COBOL functionality initiatives. That being said, there are a couple of interesting opportunities to explore. |

NTT Data Services (GLOBAL)

ModernSystems (or ModSys) has relevant experience.

ATOS, as a hosting vendor mostly referenced by customers with global locations in a short-term transition posture, could be an option. Lastly, the other Managed Services vendors with NATURAL and Adabas capabilities: |

Reinvest

By contrast, reducing the use of your mainframe makes it less cost-effective and more challenging to retain in-house expertise.

- For organizations that have migrated applications off the mainframe (at least partly to reduce dependency on the platform), inevitably there remains a core set of mission critical applications that cannot be moved off for reasons described on the “Migrate” slide. This is when the mainframe becomes a costly burden:

- TCO is relatively high due to low utilization.

- In-house expertise declines as workload declines and current staffing allocations become harder to justify.

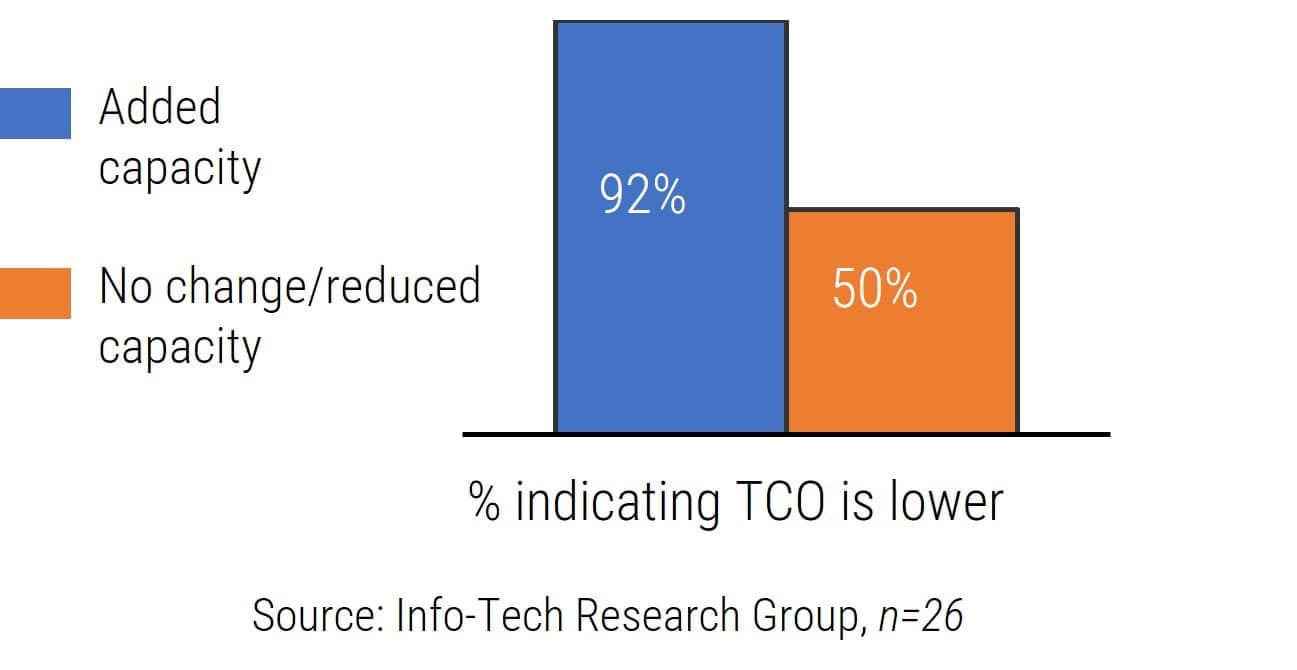

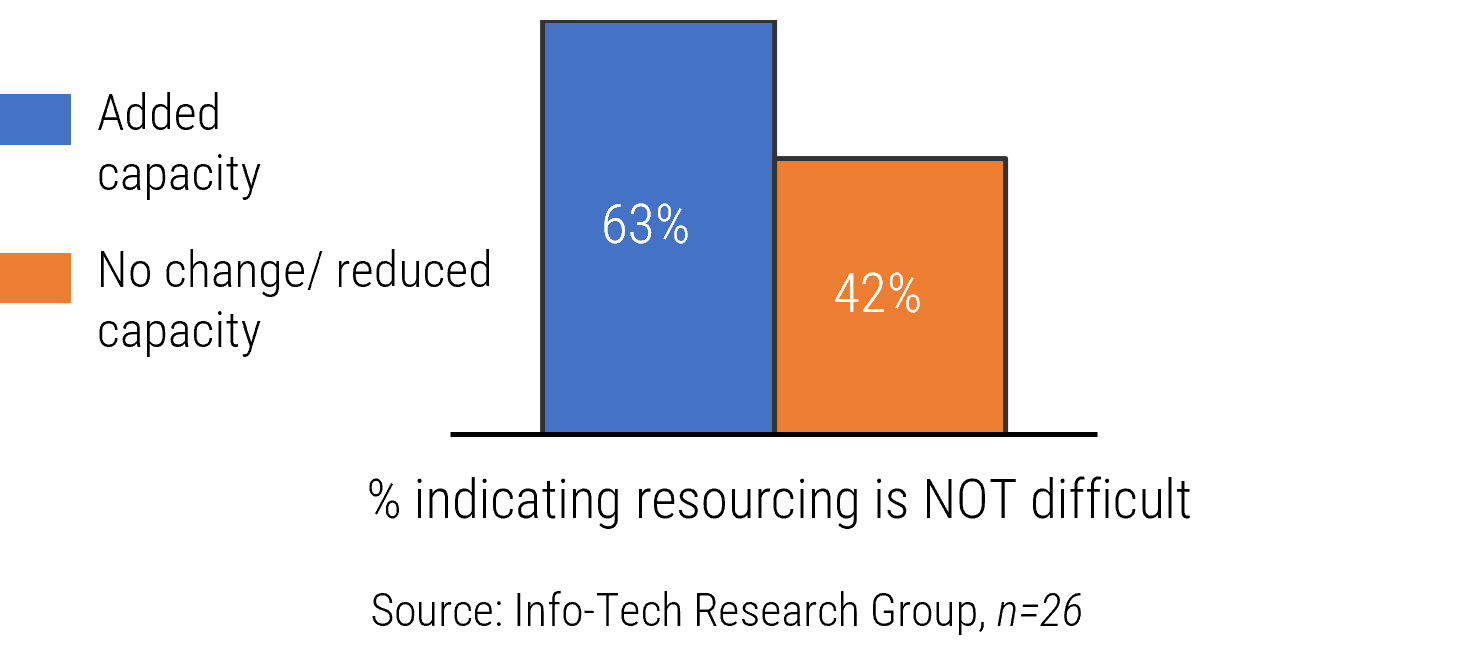

- Organizations that are instead adding capacity and finding new ways to use this platform have lower cost concerns and resourcing challenges. The charts below illustrate this correlation. While some capacity growth is due to normal business growth, some is also due to new workloads, and it reflects an ongoing commitment to the platform.

*92% of organizations that added capacity said TCO is lower than for commodity servers (compared to 50% of those who did not add capacity) |

*63% of organizations that added capacity said finding resources is not very difficult (compared to 42% of those who did not add capacity) |

|

|

An important thought about data migration

Mainframe data migrations – “VSAM, IMS, etc.”

| Temporary workaround. This would align with a technical solution allowing the VASM files to be accessed using platforms other than on mainframe hardware (Micro Focus or other file store trickery). This can be accomplished relatively quickly but does run the risk of technology obsolesce for the workaround at some point in the future. Bulk conversion. This method would involve the extract/transform/load of the historical records into the new application platform. Often the order of the conversion is completed on work newest to oldest (the idea is that the newest historical records would have the highest likelihood of an access need), but all files would be converted to the new application and the old data store destroyed. Forward convert, which would have files undergo the extract/transform/load conversion into the new application as they are accessed or reopened. This method would keep historical records indefinitely or until they are converted – or the legal retention schedule allows for their destruction (hopefully no file must be kept forever). This could be a cost-efficient approach since the historical files remaining on the VSAM platform would be shrunk over time based on demand from the district attorney process. The conversion process could be automated and scripted, with a QR step allowing for the records to be deleted from the old platform. |

Info-Tech Insight It is not usual for organizations to leverage options #2 and #3 above to move the functionality forward while containing the scope creep and costs for the data conversions. |

Enterprise class job scheduling

Job scheduling or data center automation?

- Enterprise class job scheduling solutions enable complex unattended batched programmatically conditioned task/job scheduling.

- Data center automation (DCIM) software automates and orchestrates the processes and workflow for infrastructure operations including provisioning, configuring, patching of physical, virtual, and cloud servers, and monitoring of tasks involved in maintaining the operations of a data center or Infrastructure environment.

- While there maybe some overlap and or confusion between data center automation and enterprise class job scheduling solutions, data center automation (DCIM) software solutions are least likely to have support for non-commodity server platforms and lack robust scheduling functionality.

Note: Enterprise job scheduling is a topic with low member interest or demand. Since our published research is driven by members’ interest and needs, the lack of activity or member demand would obviously be a significant influence into our ability to aggregate shared member insight, trends, or best practices in our published agenda.

Enterprise class job scheduling features

The feature set for these tools is long and comprehensive. The feature list below is not exhaustive as specific tools may have additional product capabilities. At a minimum, the solutions offered by the vendors in the list below will have the following capabilities:

|

|

Understand your vendors and tools

List and compare the job scheduling features of each vendor.

- This is not presented as an exhaustive list.

- The list relies on observations aggregated from analyst engagements with Info-Tech Research Group members. Those member discussions tend to be heavily tilted toward solutions supporting non-commodity platforms.

- Nothing is implied about a solution suitability or capability by the order of presentation or inclusion or absence in this list.

✓ Advanced Systems Concepts ✓ BMC ✓ Broadcom ✓ HCL ✓ Fortra |

✓ Redwood ✓ SMA Technologies ✓ StoneBranch ✓ Tidal Software ✓ Vinzant Software |

Info-Tech Insight

Creating vendor profiles will help quickly filter the solution providers that directly meet your z/Series needs.

Advanced Systems Concepts

ActiveBatch

| Workload Management: | ||

Summary Founded in 1981, ASCs ActiveBatch “provides a central automation hub for scheduling and monitoring so that business-critical systems, like CRM, ERP, Big Data, BI, ETL tools, work order management, project management, and consulting systems, work together seamlessly with minimal human intervention.”* URL Coverage: Global |

Amazon EC2 Hadoop Ecosystem IBM Cognos DataStage IBM PureData (Netezza) Informatica Cloud Microsoft Azure Microsoft Dynamics AX Microsoft SharePoint Microsoft Team Foundation Server |

Oracle EBS Oracle PeopleSoft SAP BusinessObjects ServiceNow Teradata VMware Windows Linux Unix IBM i |

*Advanced Systems Concepts, Inc.

BMC

Control-M

Workload Management: | ||

Summary Founded in 1980, BMCs Control-M product “simplifies application and data workflow orchestration on premises or as a service. It makes it easy to build, define, schedule, manage, and monitor production workflows, ensuring visibility, reliability, and improving SLAs.”* URL bmc.com/it-solutions/control-m.html Coverage: Global | AWS Azure Google Cloud Platform Cognos IBM InfoSphere DataStage SAP HANA Oracle EBS Oracle PeopleSoft BusinessObjects | ServiceNow Teradata VMware Windows Linux Unix IBM i IBM z/OS zLinux |

*BMC

Broadcom

Atomic Automation

Autosys Workload Automation

Workload Management: | ||

Summary Broadcom offers Atomic Automation and Autosys Workload Automation which ”gives you the agility, speed and reliability required for effective digital business automation. From a single unified platform, Atomic centrally provides the orchestration and automation capabilities needed accelerate your digital transformation and support the growth of your company.”* URL broadcom.com/products/software/automation/automic-automation broadcom.com/products/software/automation/autosys Coverage: Global

| Windows MacOS Linux UNIX AWS Azure Google Cloud Platform VMware z/OS zLinux System i OpenVMS Banner Ecometry | Hadoop Oracle EBS Oracle PeopleSoft SAP BusinessObjects ServiceNow Teradata VMware Windows Linux Unix IBM i |

HCL

Workload Automation

Workload Management: | |||

Summary “HCL Workload Automation streamlined modelling, advanced AI and open integration for observability. Accelerate the digital transformation of modern enterprises, ensuring business agility and resilience with our latest version of one stop automation platform. Orchestrate unattended and event-driven tasks for IT and business processes from legacy to cloud and kubernetes systems.”* URL hcltechsw.com/workload-automation Coverage: Global

| Windows MacOS Linux UNIX AWS Azure Google Cloud Platform VMware z/OS zLinux System i OpenVMS IBM SoftLayer IBM BigInsights | IBM Cognos Hadoop Microsoft Dynamics 365 Microsoft Dynamics AX Microsoft SQL Server Oracle E-Business Suite PeopleSoft SAP ServiceNow Apache Oozie Informatica PowerCenter IBM InfoSphere DataStage Salesforce BusinessObjects BI | IBM Sterling Connect:Direct IBM WebSphere MQ IBM Cloudant Apache Spark |

Fortra

JAMS Scheduler

Workload Management: | ||

Summary Fortra’s “JAMS is a centralized workload automation and job scheduling solution that runs, monitors, and manages jobs and workflows that support critical business processes. JAMS reliably orchestrates the critical IT processes that run your business. Our comprehensive workload automation and job scheduling solution provides a single pane of glass to manage, execute, and monitor jobs—regardless of platforms or applications.”* URL Coverage: Global

| OpenVMS OS/400 Unix Windows z/OS SAP Oracle Microsoft Infor Workday AWS Azure Google Cloud Compute ServiceNow Salesforce | Micro Focus Microsoft Dynamics 365 Microsoft Dynamics AX Microsoft SQL Server MySQL NeoBatch Netezza Oracle PL/SQL Oracle E-Business Suite PeopleSoft SAP SAS Symitar |

*JAMS

Redwood

Redwood SaaS

Workload Management: | ||

Summary Founded in 1993 and delivered as a SaaS solution, ”Redwood lets you orchestrate securely and reliably across any application, service or server, in the cloud or on-premises, all inside a single platform. Automation solutions are at the core of critical business operations such as forecasting, replenishment, reconciliation, financial close, order to cash, billing, reporting, and more. Enterprises in every industry — from manufacturing, utility, retail, and biotech to healthcare, banking, and aerospace.”* URL Coverage: Global

| OpenVMS OS/400 Unix Windows z/OS SAP Oracle Microsoft Infor Workday AWS Azure Google Cloud Compute ServiceNow Salesforce | Github Office 365 Slack Dropbox Tableau Informatica SAP BusinessObjects Cognos Microsoft Power BI Amazon QuickSight VMware Xen Kubernetes |

Fortra

Robot Scheduler

Workload Management: | |

Summary “Robot Schedule’s workload automation capabilities allow users to automate everything from simple jobs to complex, event-driven processes on multiple platforms and centralize management from your most reliable system: IBM i. Just create a calendar of when and how jobs should run, and the software will do the rest.”* URL fortra.com/products/job-scheduling-software-ibm-i Coverage: Global

| IBM i (System i, iSeries, AS/400) AIX/UNIX Linux Windows SQL/Server Domino JD Edwards EnterpriseOne SAP Automate Schedule (formerly Skybot Scheduler) |

SMA Technologies

OpCon

Workload Management: | |||

Summary Founded in1980, SMA offers to “save time, reduce error, and free your IT staff to work on more strategic contributions with OpCon from SMA Technologies. OpCon offers powerful, easy-to-use workload automation and orchestration to eliminate manual tasks and manage workloads across business-critical operations. It's the perfect fit for financial institutions, insurance companies, and other transactional businesses.”* URL Coverage: Global | Windows Linux Unix z/Series IBM i Unisys Oracle SAP Microsoft Dynamics AX Infor M3 Sage Cegid Temenos | FICS Microsoft Azure Data Management Microsoft Azure VM Amazon EC2/AWS Web Services RESTful Docker Google Cloud VMware ServiceNow Commvault Microsoft WSUS Microsoft Orchestrator | Java JBoss Asysco AMT Tuxedo ART Nutanix Corelation Symitar Fiserv DNA Fiserv XP2 |

StoneBranch

Universal Automation Center (UAC)

Workload Management: | |||

Summary Founded in 1999, ”the Stonebranch Universal Automation Center (UAC) is an enterprise-grade business automation solution that goes beyond traditional job scheduling. UAC's event-based workload automation solution is designed to automate and orchestrate system jobs and tasks across all mainframe, on-prem, and hybrid IT environments. IT operations teams gain complete visibility and advanced control with a single web-based controller, while removing the need to run individual job schedulers across platforms.”* URL stonebranch.com/it-automation-solutions/enterprise-job-scheduling Coverage: Global | Windows Linux Unix z/Series Apache Kafka AWS Databricks Docker GitHub Google Cloud Informatica | Jenkins Jscape Kubernetes Microsoft Azure Microsoft SQL Microsoft Teams PagerDuty PeopleSoft Petnaho RedHat Ansible Salesforce | SAP ServiceNow Slack SMTP and IMAP Snowflake Tableau VMware |

Tidal Software

Workload Automation

Workload Management: | |||

Summary Founded in 1979, Tidal’s Workload Automation will “simplify management and execution of end-to-end business processes with our unified automation platform. Orchestrate workflows whether they're running on-prem, in the cloud or hybrid environments.”* URL Coverage: Global | CentOS Linux Microsoft Windows Server Open VMS Oracle Cloud Oracle Enterprise Linux Red Hat Enterprise Server Suse Enterprise Tandem NSK Ubuntu UNIX HPUX (PA-RISC, Itanium) Solaris (Sparc, X86) | AIX, iSeries z/Linux z/OS Amazon AWS Microsoft Azure Oracle OCI Google Cloud ServiceNow Kubernetes VMware Cisco UCS SAP R/3 & SAP S/4HANA Oracle E-Business | Oracle ERP Cloud PeopleSoft JD Edwards Hadoop Oracle DB Microsoft SQL SAP BusinessObjects IBM Cognos FTP/FTPS/SFTP Informatica |

Vinzant Software

Global ECS

Workload Management: | |

Summary Founded in 1987, Global ECS can “simplify operations in all areas of production with the GECS automation framework. Use a single solution to schedule, coordinate and monitor file transfers, database operations, scripts, web services, executables and SAP jobs. Maximize efficiency for all operations across multiple business units intelligently and automatically.”* URL Coverage: Global | Windows Linux Unix iSeries SAP R/3 & SAP S/4HANA Oracle, SQL/Server |

Activity

Scale Out or Scale Up

Activities:

- Complete the Scale Up vs. Scale Out TCO Tool.

- Compare total lifecycle costs to determine TCO.

This activity involves the following participants:

IT strategic direction decision makers

IT managers responsible for an existing z/Series platform

Organizations evaluating platforms for mission critical applications

Outcomes of this step:

- Completed Scale Up vs. Scale Out TCO Tool

Info-Tech Insight

This checkpoint process creates transparency around agreement costs with the business and gives the business an opportunity to re-evaluate its requirements for a potentially leaner agreement.

Scale out versus scale up activity

The Scale Up vs. Scale Out TCO Tool provides organizations with a framework for estimating the costs associated with purchasing and licensing for a scale-up and scale-out environment over a multi-year period. Use this tool to:

|  |

Info-Tech InsightWatch out for inaccurate financial information. Ensure that the financials for cost match your maintenance and contract terms. | Use the Scale Up vs. Scale Out TCO Tool to determine your TCO options. |

Related Info-Tech Research

Effectively Acquire Infrastructure Services

Acquiring a service is like buying an experience. Don’t confuse the simplicity of buying hardware with buying an experience.

Outsource IT Infrastructure to Improve System Availability, Reliability, and Recovery

There are very few IT infrastructure components you should be housing internally – outsource everything else.

Build Your Infrastructure Roadmap

Move beyond alignment: Put yourself in the driver’s seat for true business value.

Make the most of cloud for your organization.

Drive consensus by outlining how your organization will use the cloud.

Build a Strategy for Big Data Platforms

Know where to start and where to focus attention in the implementation of a big data strategy.

Improve your RFPs to gain leverage and get better results.

Research Authors

|

Darin Stahl, Principal Research Advisor, Info-Tech Research Group Darin is a Principal Research Advisor within the Infrastructure Practice, and leveraging 38+ years of experience, his areas of focus include: IT Operations Management, Service Desk, Infrastructure Outsourcing, Managed Services, Cloud Infrastructure, DRP/BCP, Printer Management, Managed Print Services, Application Performance Monitoring/ APM, Managed FTP, non-commodity servers (z/Series, mainframe, IBM i, AIX, Power PC). |

|

Troy Cheeseman, Practice Lead, Info-Tech Research Group Troy has over 25 years of IT management experience and has championed large enterprise-wide technology transformation programs, remote/home office collaboration and remote work strategies, BCP, IT DRP, IT Operations and expense management programs, international right placement initiatives, and large technology transformation initiatives (M&A). Additionally, he has deep experience working with IT solution providers and technology (cloud) start-ups. |

Bibliography

“AWS Announces AWS Mainframe Modernization.” Business Wire, 30 Nov. 2021.

de Valence, Phil. “Migrating a Mainframe to AWS in 5 Steps with Astadia?” AWS, 23 Mar. 2018.

Graham, Nyela. “New study shows mainframes still popular despite the rise of cloud—though times are changing…fast?” WatersTechnology, 12 Sept. 2022.

“Legacy applications can be revitalized with API.” MuleSoft, 2022.

Vecchio, Dale. “The Benefits of Running Mainframe Applications on LzLabs Software Defined Mainframe® & Microsoft Azure.” LzLabs Sites, Mar. 2021.

Buying Options

z-Series Modernization and Migration

IT Risk Management · IT Leadership & Strategy implementation · Operational Management · Service Delivery · Organizational Management · Process Improvements · ITIL, CORM, Agile · Cost Control · Business Process Analysis · Technology Development · Project Implementation · International Coordination · In & Outsourcing · Customer Care · Multilingual: Dutch, English, French, German, Japanese · Entrepreneur

Tymans Group is a brand by Gert Taeymans BV

Gert Taeymans bv

Europe: Koning Albertstraat 136, 2070 Burcht, Belgium — VAT No: BE0685.974.694 — phone: +32 (0) 468.142.754

USA: 4023 KENNETT PIKE, SUITE 751, GREENVILLE, DE 19807 — Phone: 1-917-473-8669

Copyright 2017-2022 Gert Taeymans BV