Further reading

Improve Requirements Gathering

Back to basics: great products are built on great requirements.

Analyst Perspective

A strong process for business requirements gathering is essential for application project success. However, most organizations do not take a strategic approach to optimizing how they conduct business analysis and requirements definition.

"Robust business requirements are the basis of a successful project. Without requirements that correctly articulate the underlying needs of your business stakeholders, projects will fail to deliver value and involve significant rework. In fact, an Info-Tech study found that of projects that fail over two-thirds fail due to poorly defined business requirements.

Despite the importance of good business requirements to project success, many organizations struggle to define a consistent and repeatable process for requirements gathering. This results in wasted time and effort from both IT and the business, and generates requirements that are incomplete and of dubious value. Additionally, many business analysts lack the competencies and analytical techniques needed to properly execute the requirements gathering process.

This research will help you get requirements gathering right by developing a set of standard operating procedures across requirements elicitation, analysis, and validation. It will also help you identify and fine-tune the business analyst competencies necessary to make requirements gathering a success."

– Ben Dickie, Director, Enterprise Applications, Info-Tech Research Group

Our understanding of the problem

This Research is Designed For:

- The IT applications director who has accountability for ensuring that requirements gathering procedures are both effective and efficient.

- The designated business analyst or requirements gathering professional who needs a concrete understanding of how to execute upon requirements gathering SOPs.

This Research Will Help You:

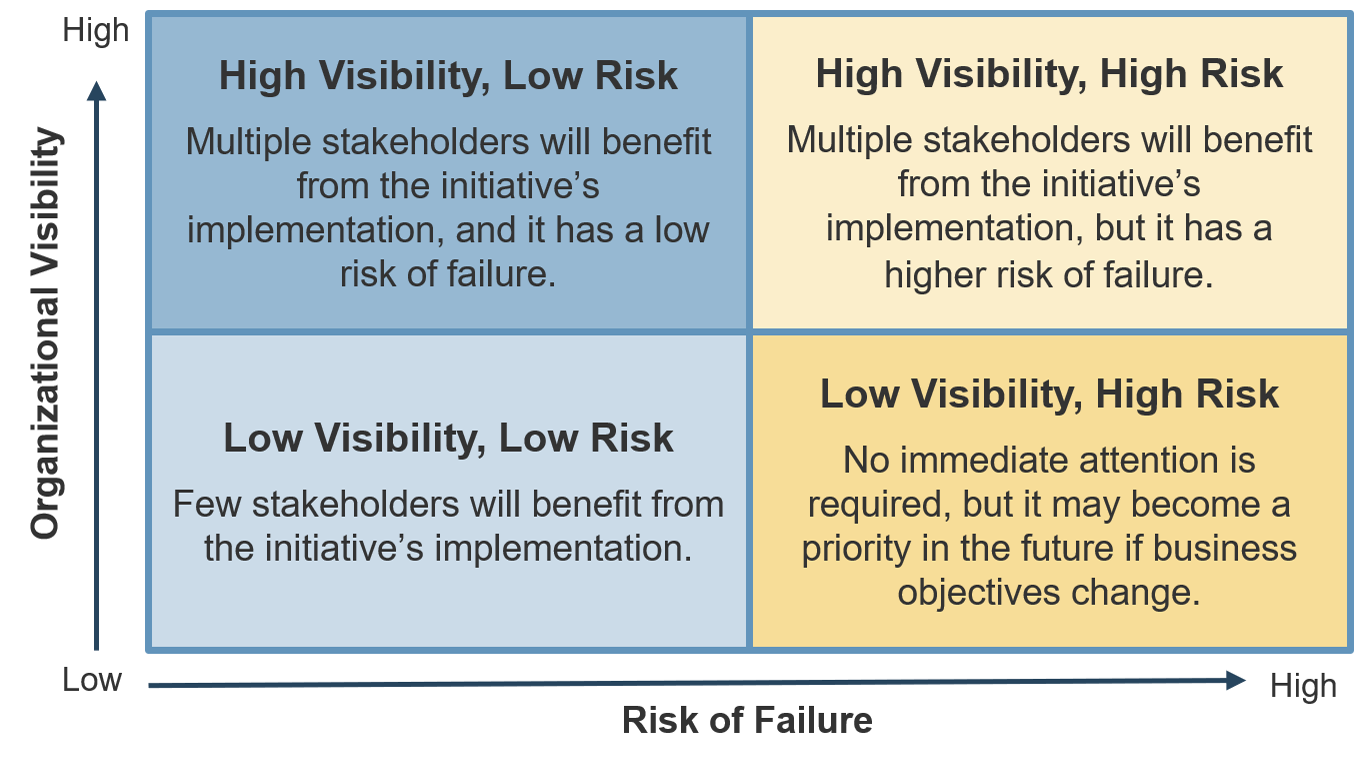

- Diagnose your current state and identify (and prioritize) gaps that exist between your target requirements gathering needs and your current capabilities and processes.

- Build a requirements gathering SOP that prescribes a framework for requirements governance and technology usage, as well as techniques for elicitation, analysis, and validation.

This Research Will Also Assist:

- The business partner/stakeholder who is interested in ways to work with IT to improve upon existing procedures for requirements gathering.

- Systems analysts and developers who need to understand how business requirements are effectively gathered upstream.

This Research Will Help Them:

- Understand the significance and importance of business requirements gathering on overall project success and value alignment.

- Create rules of engagement for assisting IT with the collection of requirements from the right stakeholders in a timely fashion.

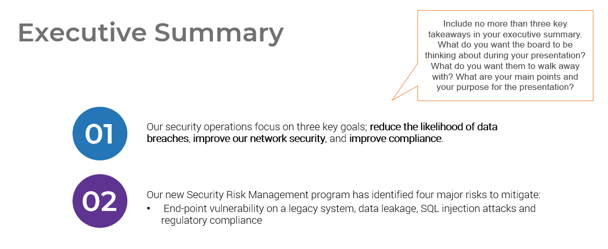

Executive summary

Situation

- Strong business requirements are essential to project success – inadequate requirements are the number one reason that projects fail.

- Organizations need a consistent, repeatable, and prescriptive set of standard operating procedures (SOPs) that dictate how business requirements gathering should be conducted.

Complication

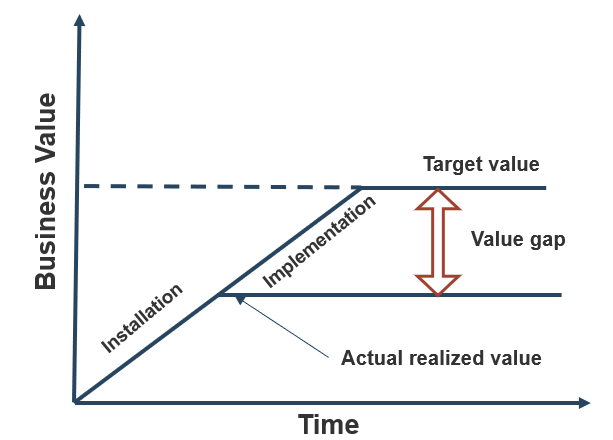

- If proper due diligence for requirements gathering is not conducted, then the applications that IT is deploying won’t meet business objectives, and they will fail to deliver adequate business value.

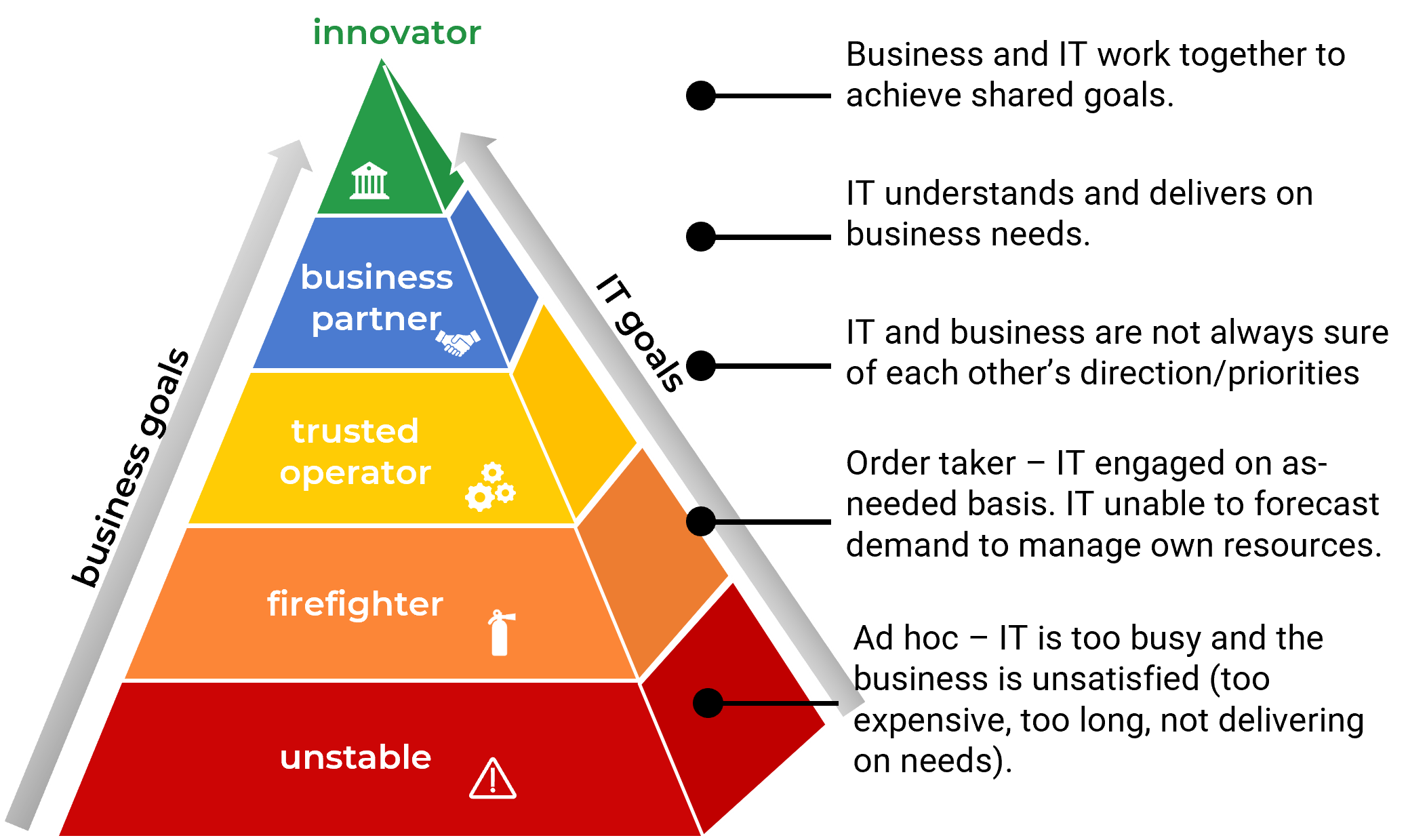

- Inaccurate requirements definition can lead to significant amounts of project rework and hurt the organization’s financial performance. It will also damage the relationship between IT and the business.

Resolution

- To avoid delivering makeshift solutions (paving the cow path), organizations need to gather requirements with the desired future state in mind. Organizations need to keep an open mind when gathering requirements.

- Creating a unified set of SOPs is essential for effectively gathering requirements; these procedures should cover not just elicitation, analysis, and validation, but also include process governance and documentation.

- BAs who conduct requirements gathering must demonstrate proven competencies for stakeholder management, analytical techniques, and the ability to speak the language of both the business and IT.

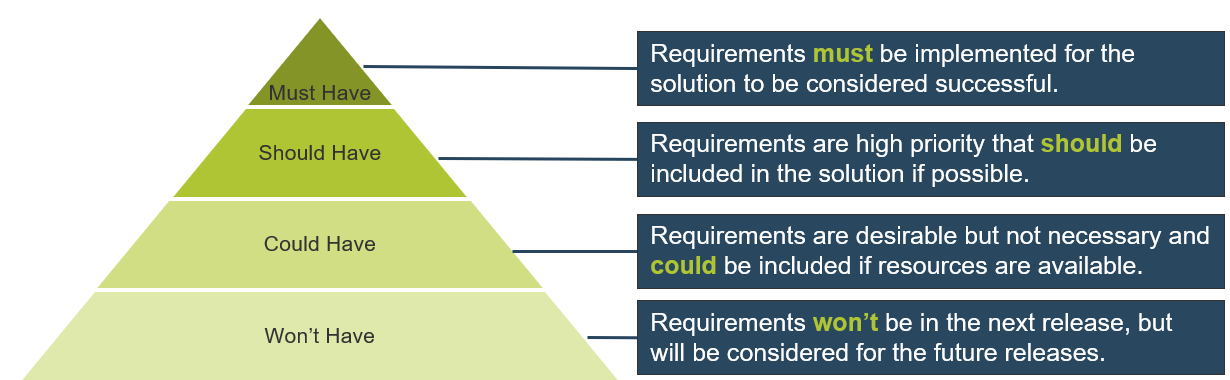

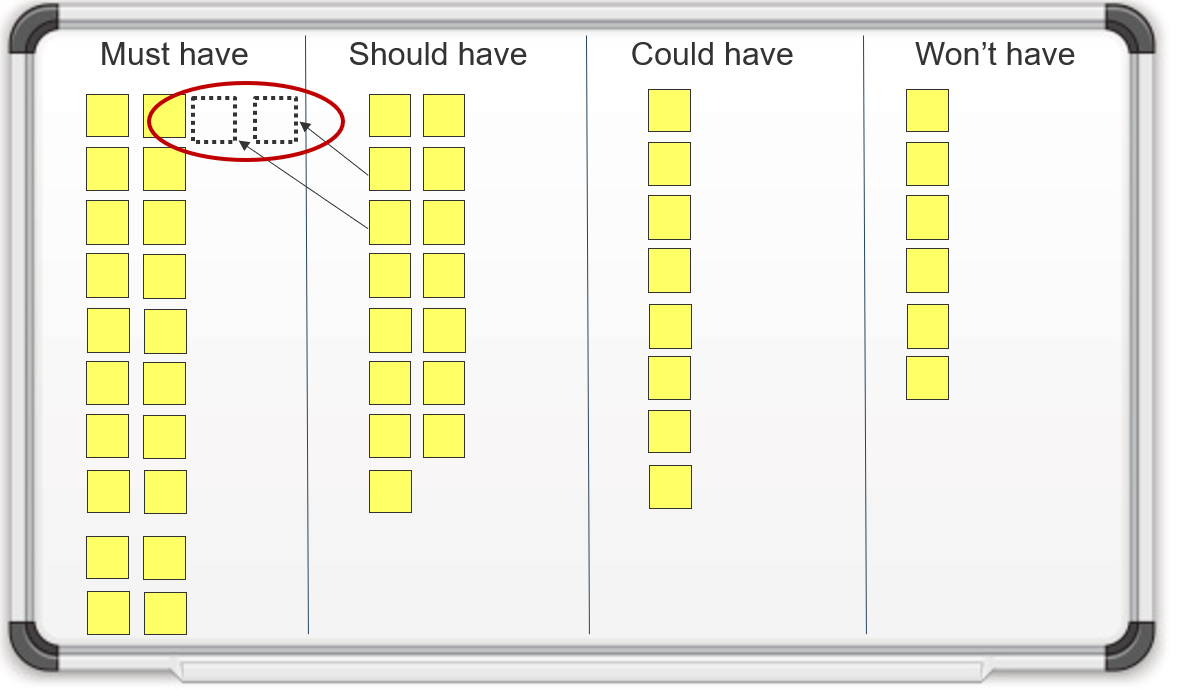

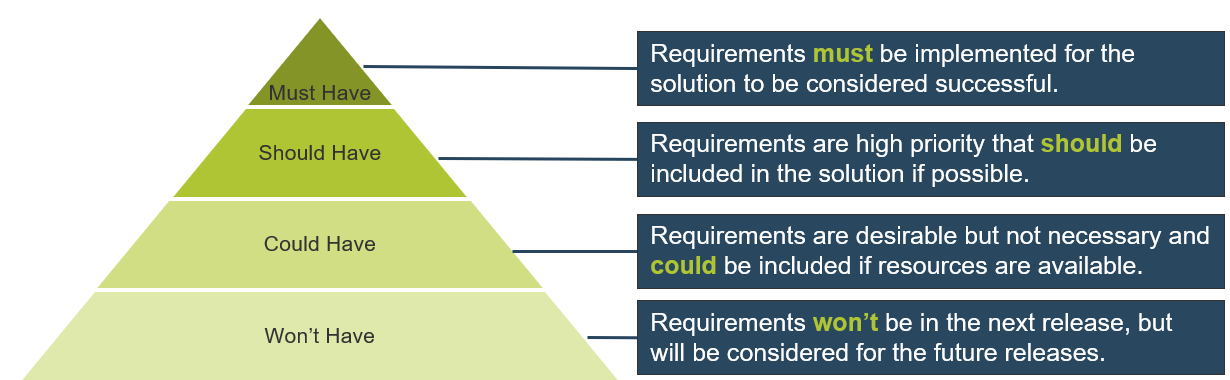

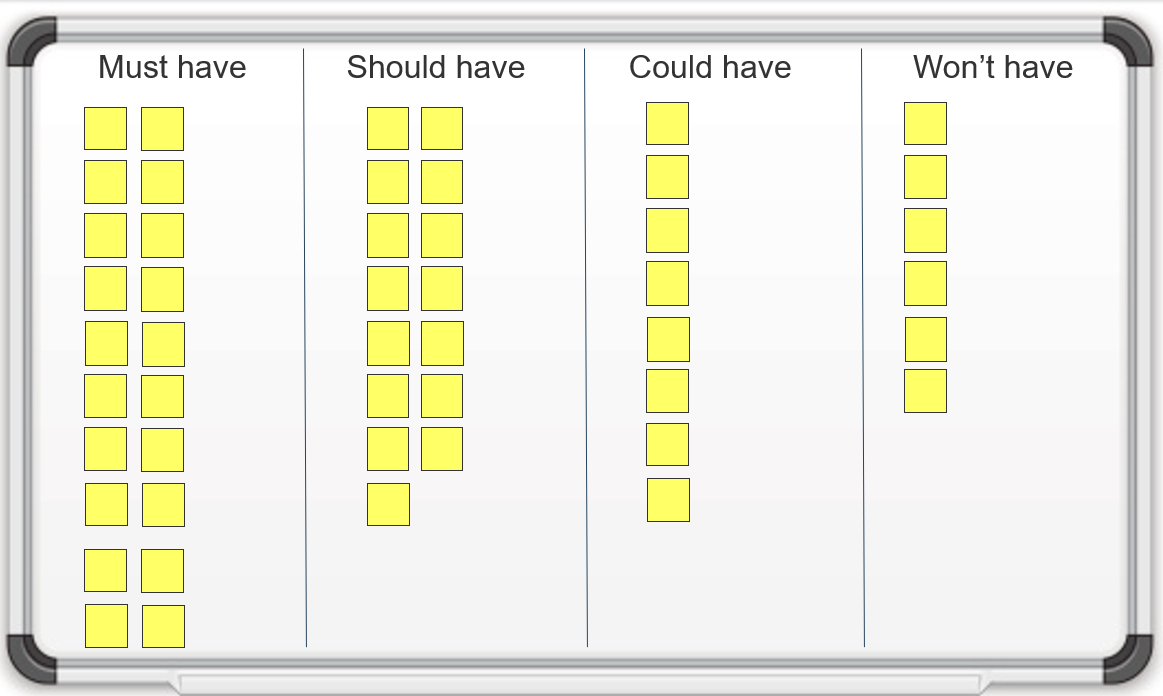

- An improvement in requirements analysis will strengthen the relationship between business and IT, as more and more applications satisfy stakeholder needs. More importantly, the applications delivered by IT will meet all of the must-have and at least some of the nice-to-have requirements, allowing end users to execute their day-to-day responsibilities.

Info-Tech Insight

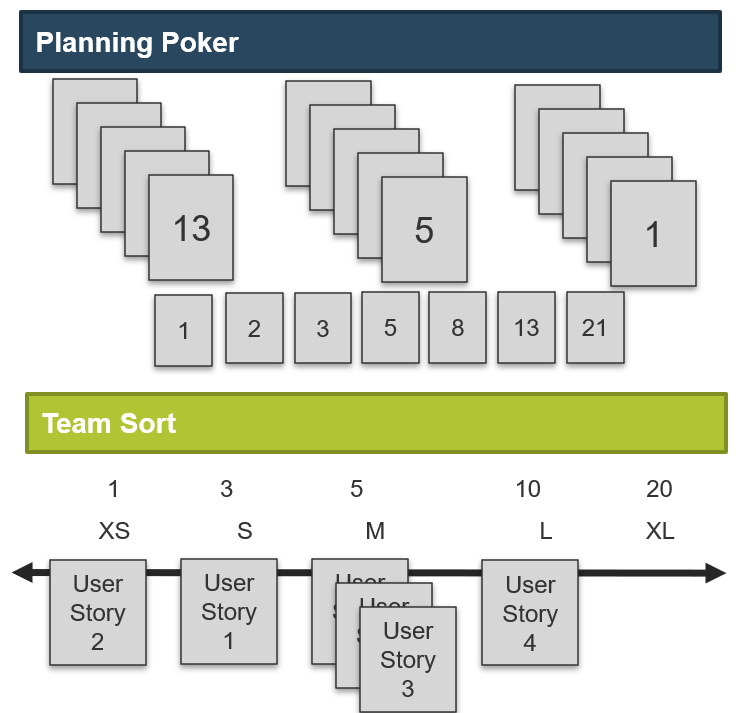

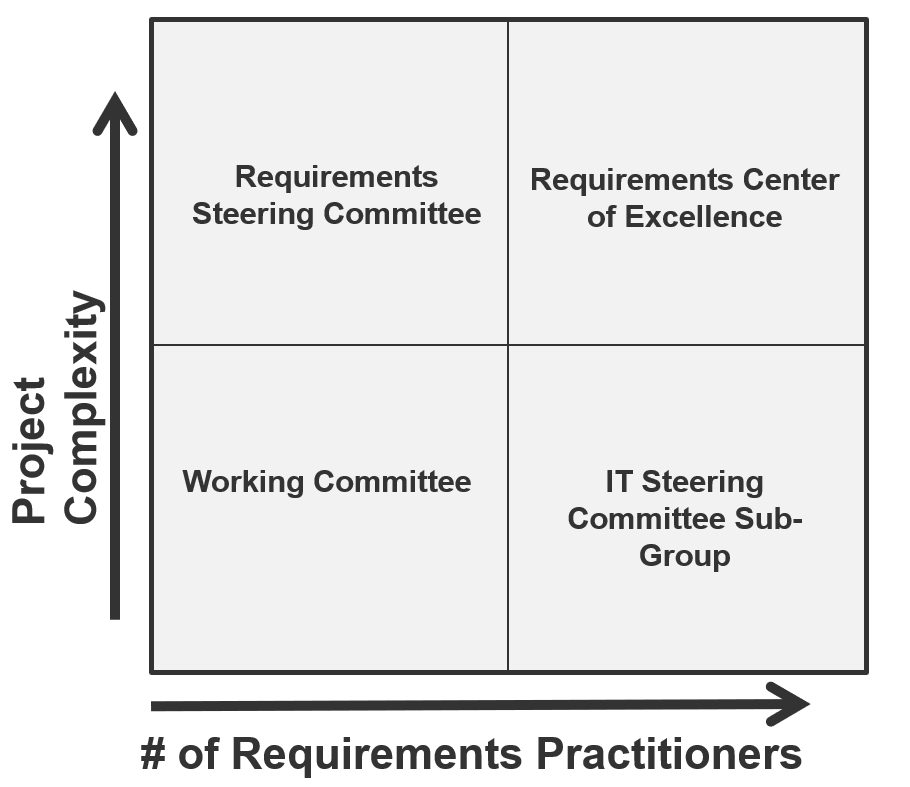

- Requirements gathering SOPs should be prescriptive based on project complexity. Complex projects will require more analytical rigor. Simpler projects can be served by more straightforward techniques like user story development.

- Business analysts (BA) can make or break the execution of the requirements gathering process.

A strong process still needs to be executed well by BAs with the right blend of skills and knowledge.

Understand what constitutes a strong business requirement

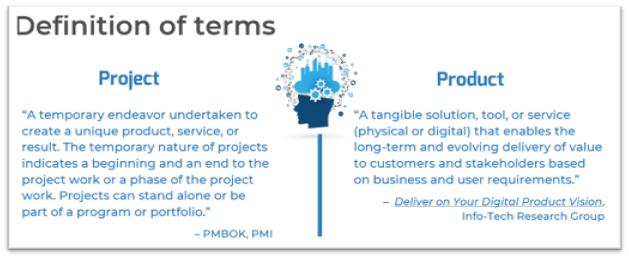

A business requirement is a statement that clearly outlines the functional capability that the business needs from a system or application. There are several attributes to look at in requirements:

Verifiable

Stated in a way that can be easily tested

Unambiguous

Free of subjective terms and can only be interpreted in one way

Complete

Contains all relevant information

Consistent

Does not conflict with other requirements

Achievable

Possible to accomplish with budgetary and technological constraints

Traceable

Trackable from inception through to testing

Unitary

Addresses only one thing and cannot be decomposed into multiple requirements

Agnostic

Doesn’t pre-suppose a specific vendor or product

Not all requirements will meet all of the attributes.

In some situations, an insight will reveal new requirements. This requirement will not follow all of the attributes listed above and that’s okay. If a new insight changes the direction of the project, re-evaluate the scope of the project.

Attributes are context specific.

Depending on the scope of the project, certain attributes will carry more weight than others. Weigh the value of each attribute before elicitation and adjust as required. For example, verifiable will be a less-valued attribute when developing a client-facing website with no established measuring method/software.

Build a firm foundation: requirements gathering is an essential step in any project, but many organizations struggle

Proper requirements gathering is critical for delivering business value from IT projects, but it remains an elusive and perplexing task for most organizations. You need to have a strategy for end-to-end requirements gathering, or your projects will consistently fail to meet business expectations.

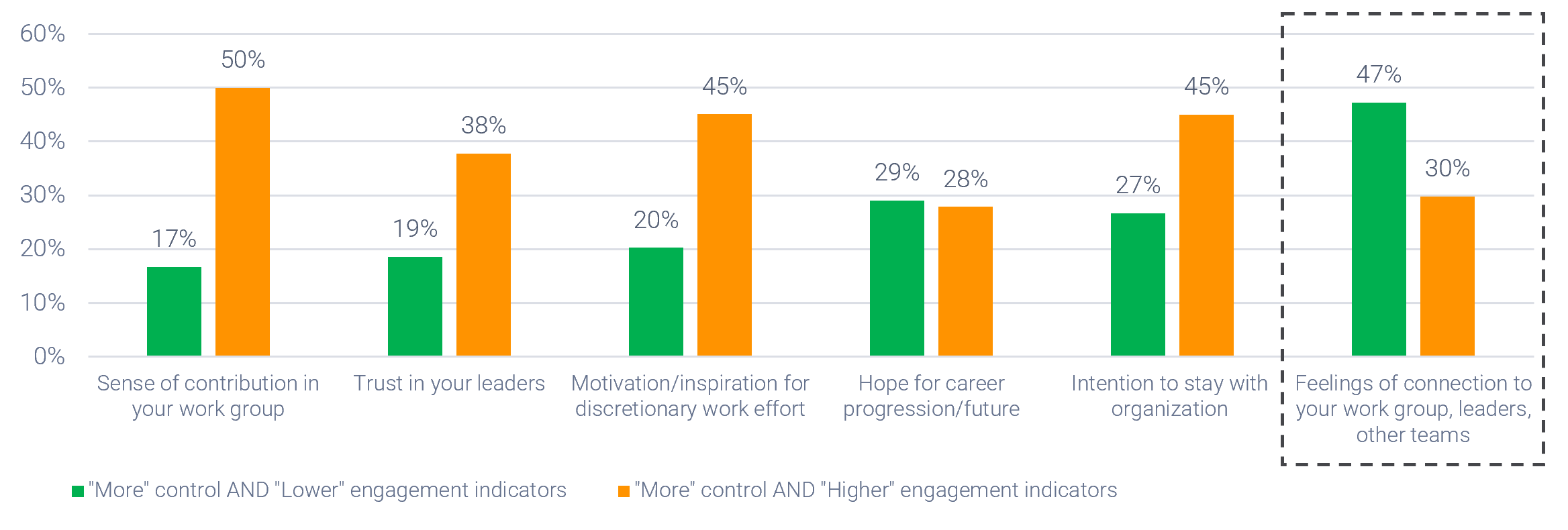

50% of project rework is attributable to problems with requirements. (Info-Tech Research Group)

45% of delivered features are utilized by end users. (The Standish Group)

78% of IT professionals believe the business is “usually” or “always” out of sync with project requirements. (Blueprint Software Systems)

45% of IT professionals admit to being “fuzzy” about the details of a project’s business objectives. (Blueprint Software Systems)

Requirements gathering is truly an organization-spanning issue, and it falls directly on the IT directors who oversee projects to put prudent SOPs in place for managing the requirements gathering process. Despite its importance, the majority of organizations have challenges with requirements gathering.

What happens when requirements are no longer effective?

- Poor requirements can have a very visible and negative impact on deployed apps.

- IT receives the blame for any project shortcomings or failures.

- IT loses its credibility and ability to champion future projects.

- Late projects use IT resources longer than planned.

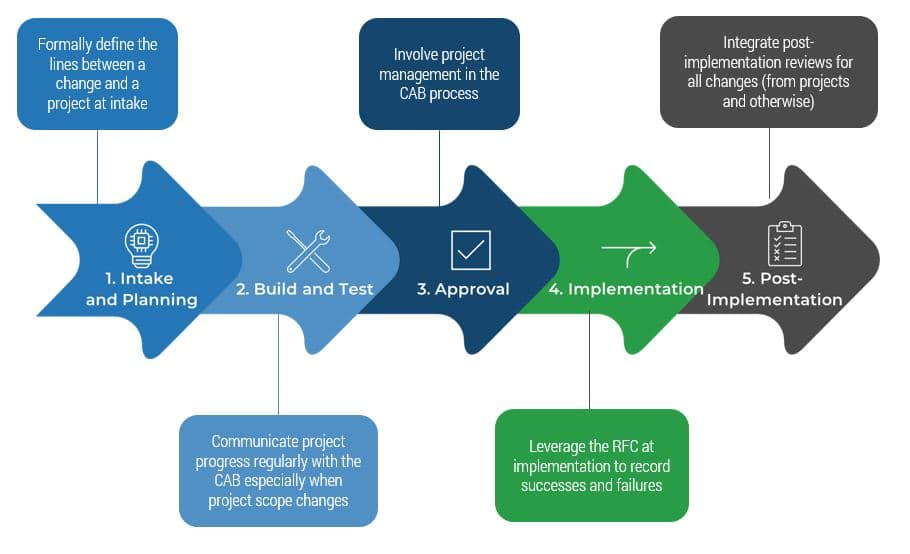

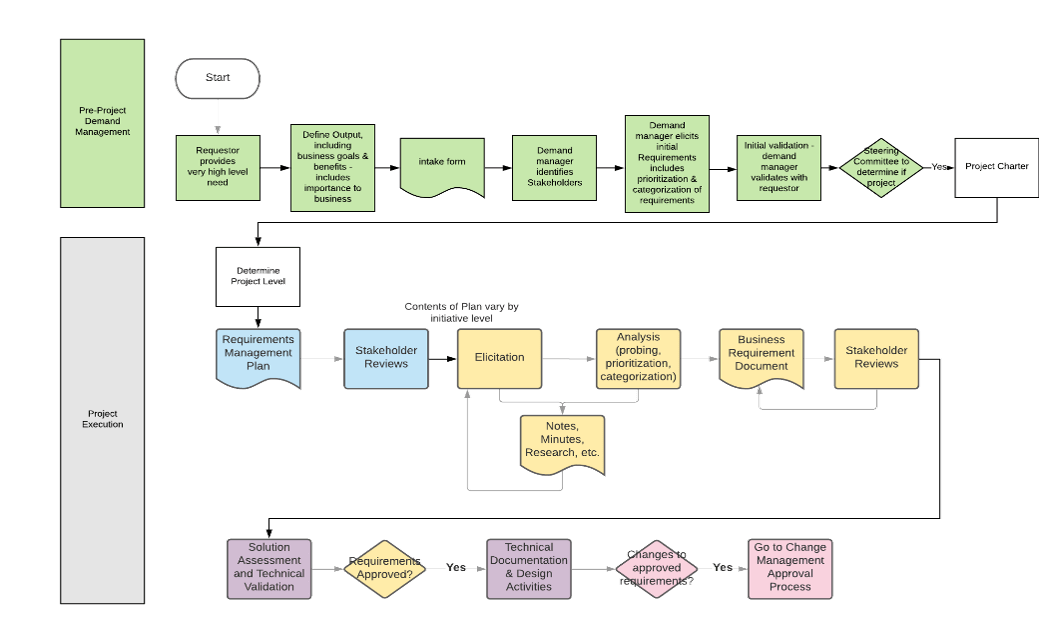

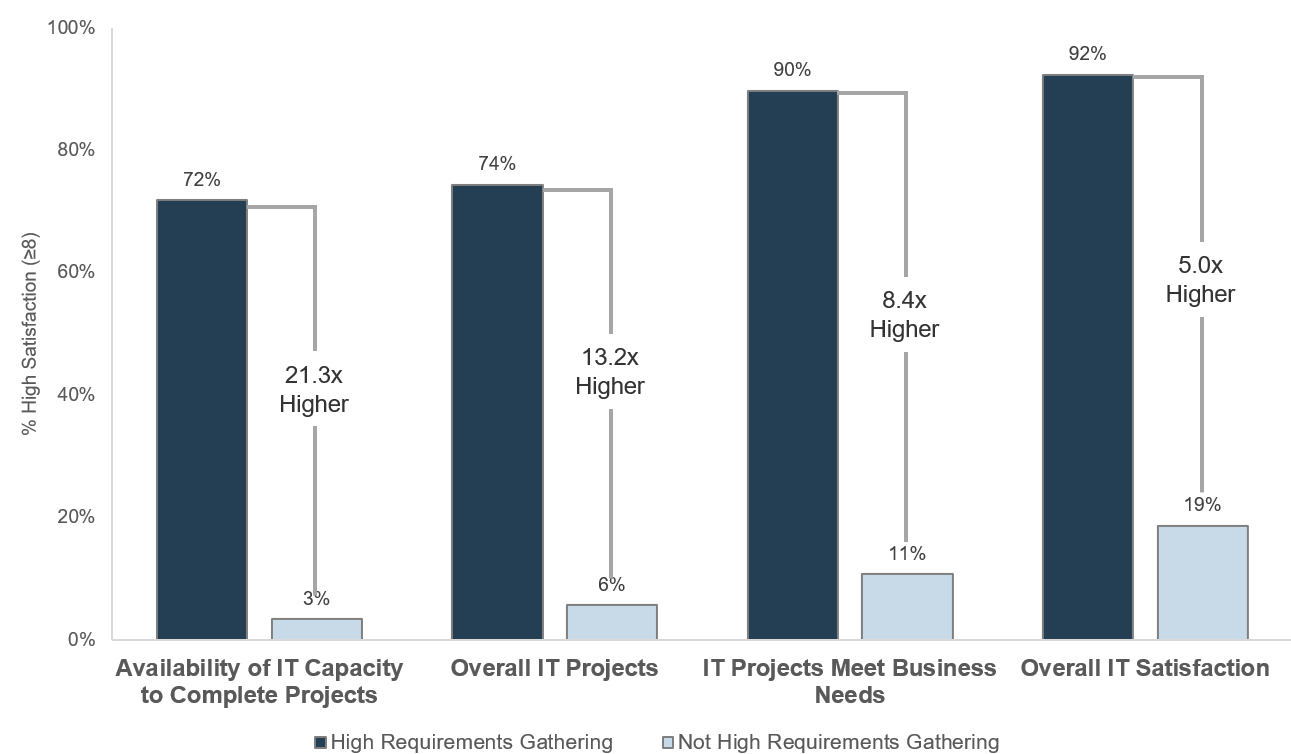

Requirements gathering is a core component of the overall project lifecycle that must be given its due diligence

PMBOK’s Five Phase Project Lifecycle

Initiate – Plan: Requirements Gathering Lives Here – Execute – Control – Close

Inaccurate requirements is the 2nd most common cause of project failure (Project Management Institute ‒ Smartsheet).

Requirements gathering is a critical stage of project planning.

Depending on whether you take an Agile or Waterfall project management approach, it can be extended into the initiate and execute phases of the project lifecycle.

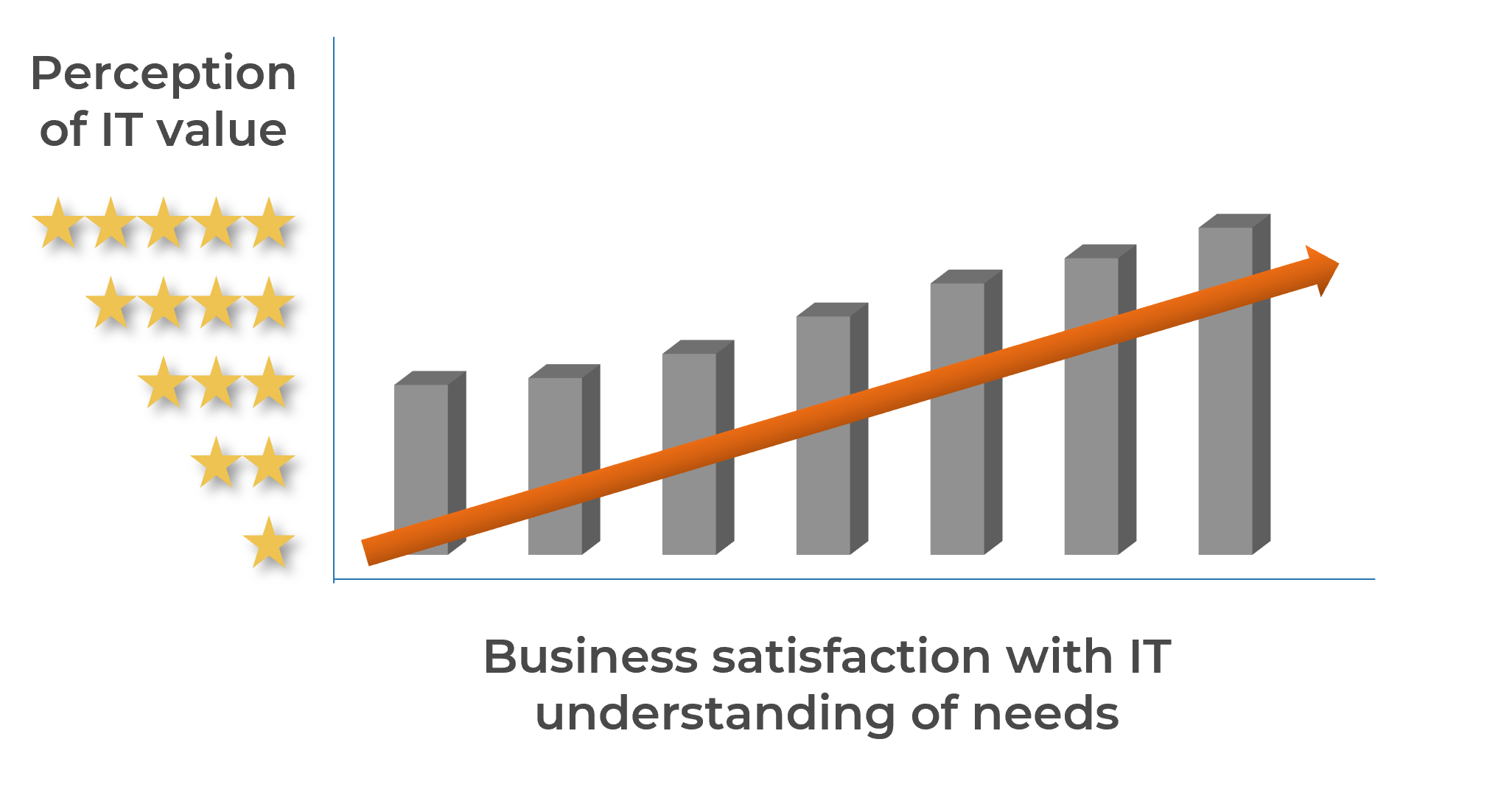

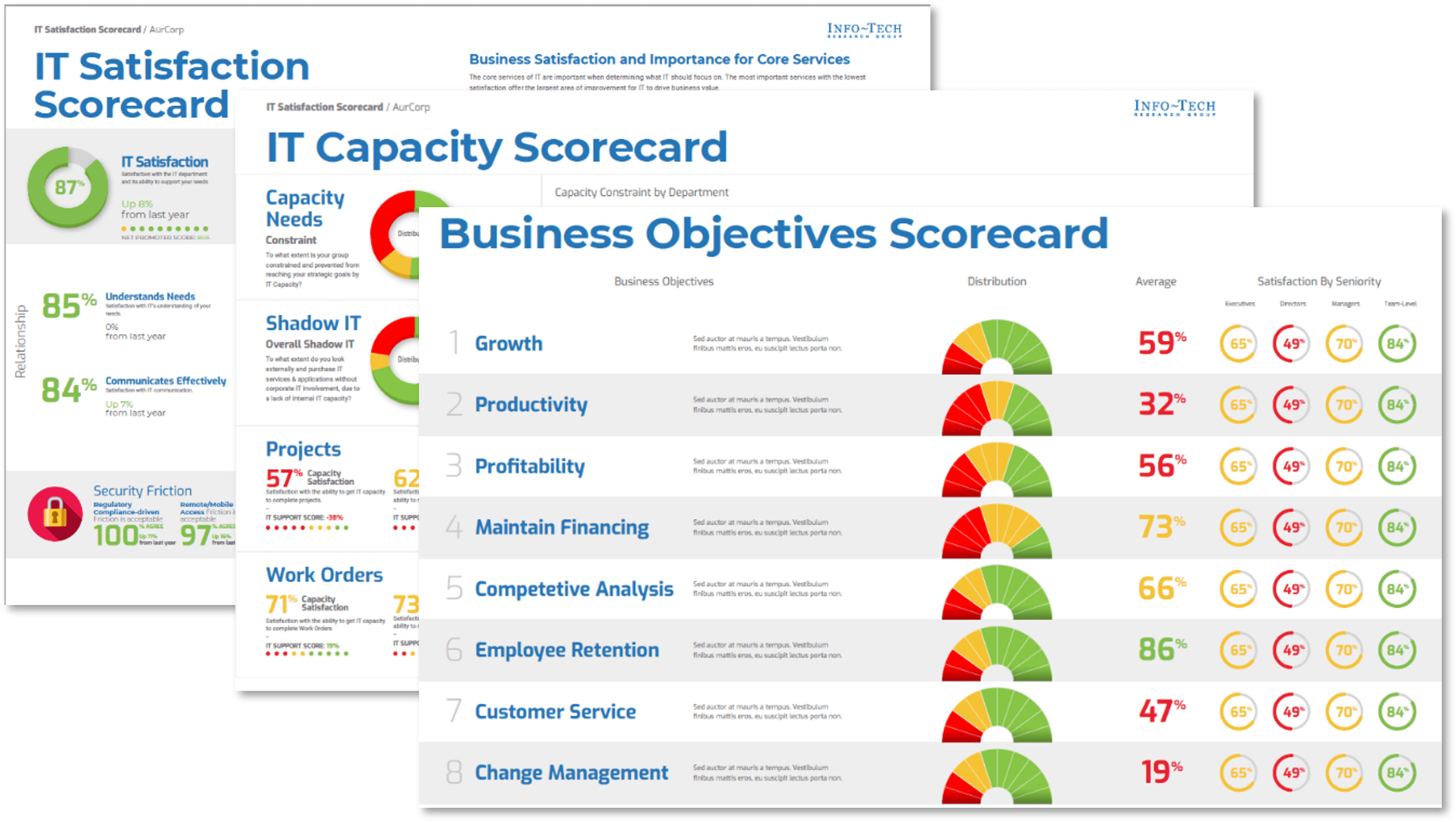

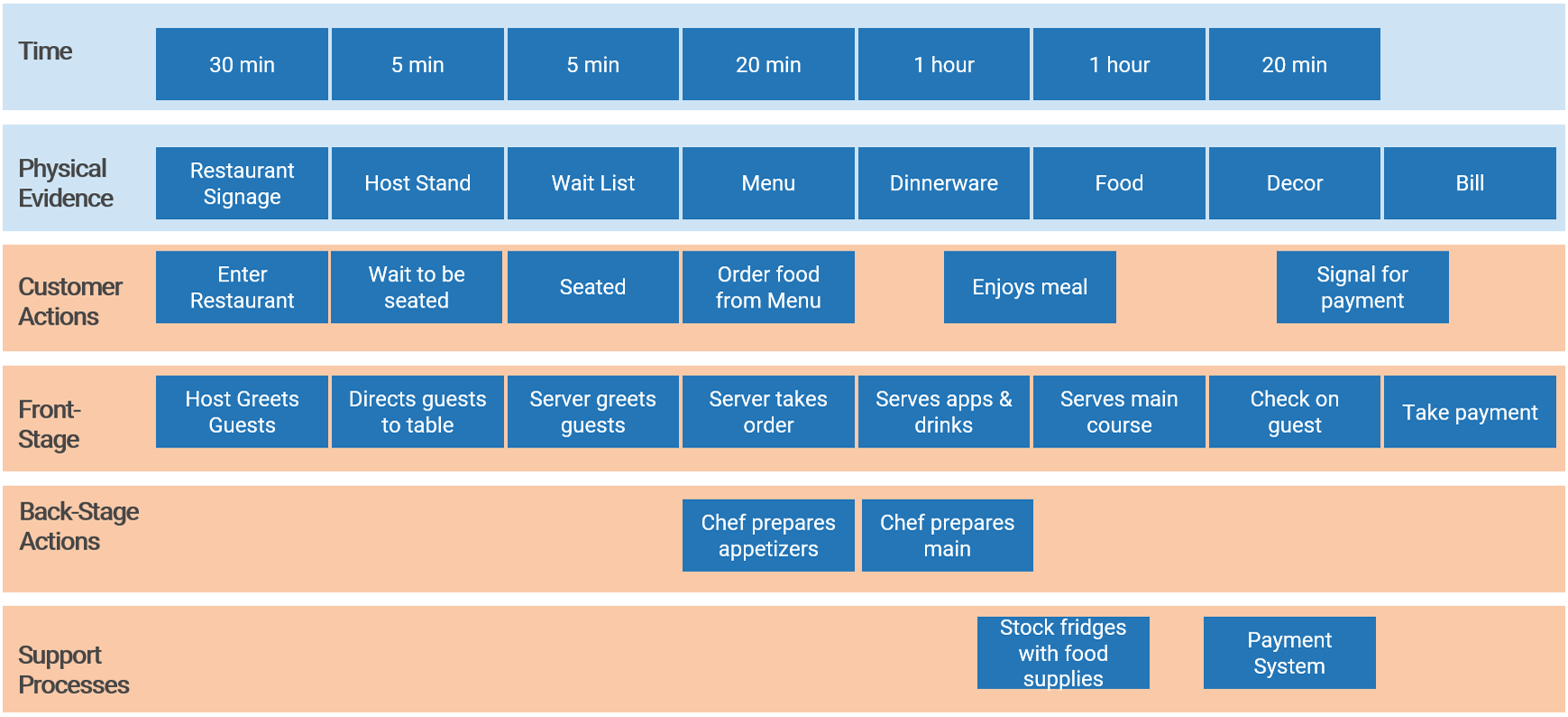

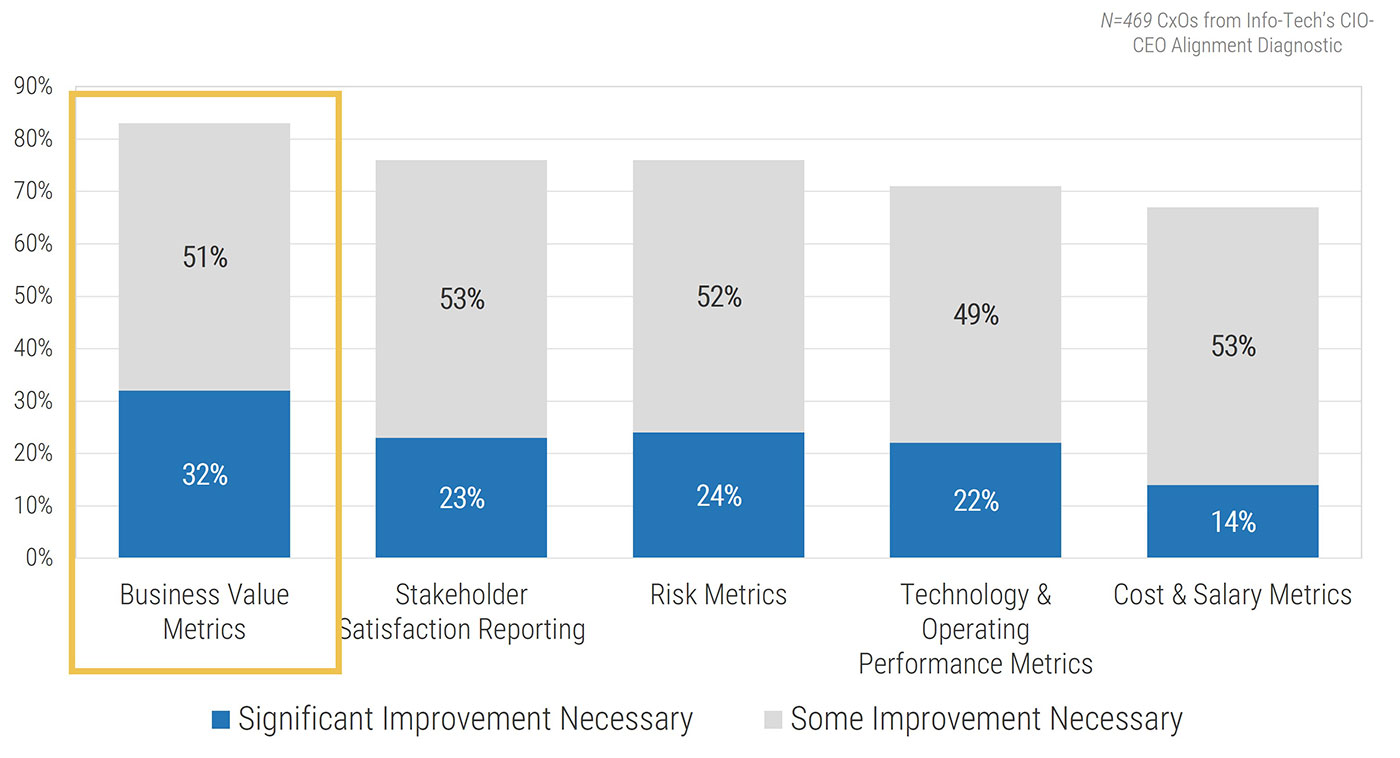

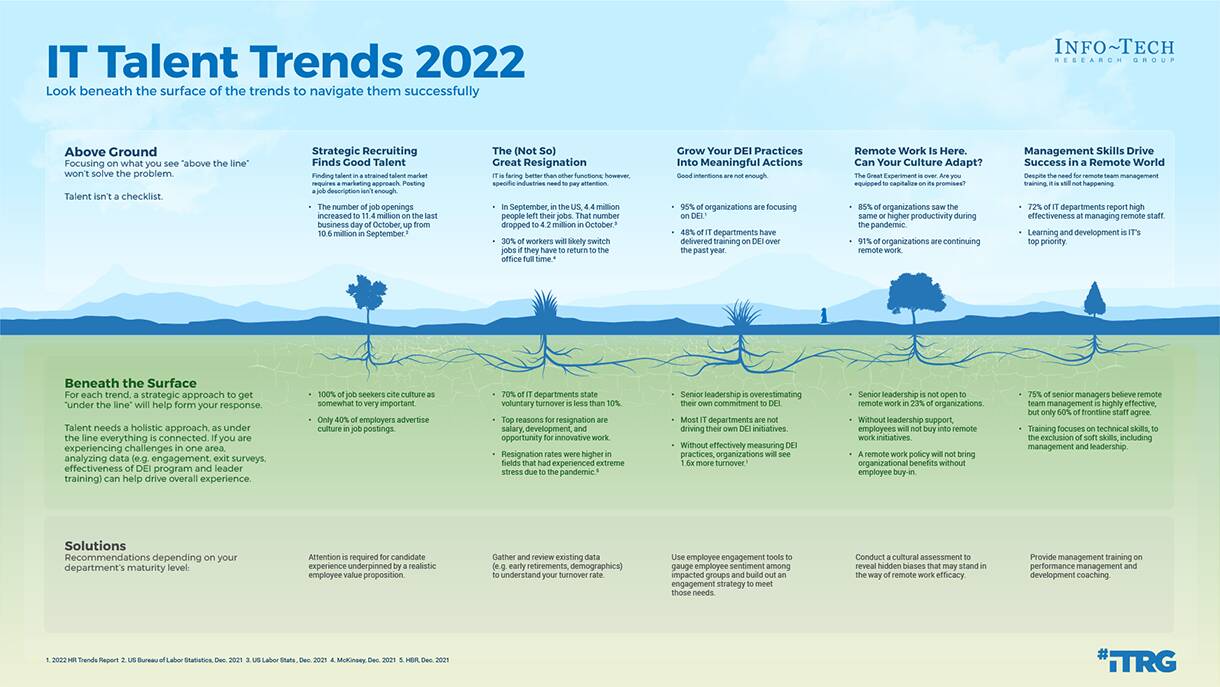

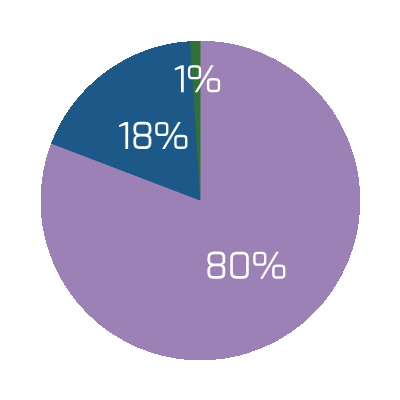

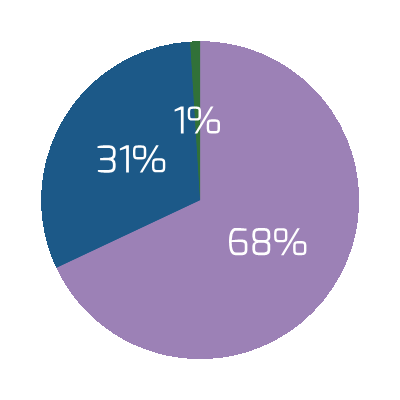

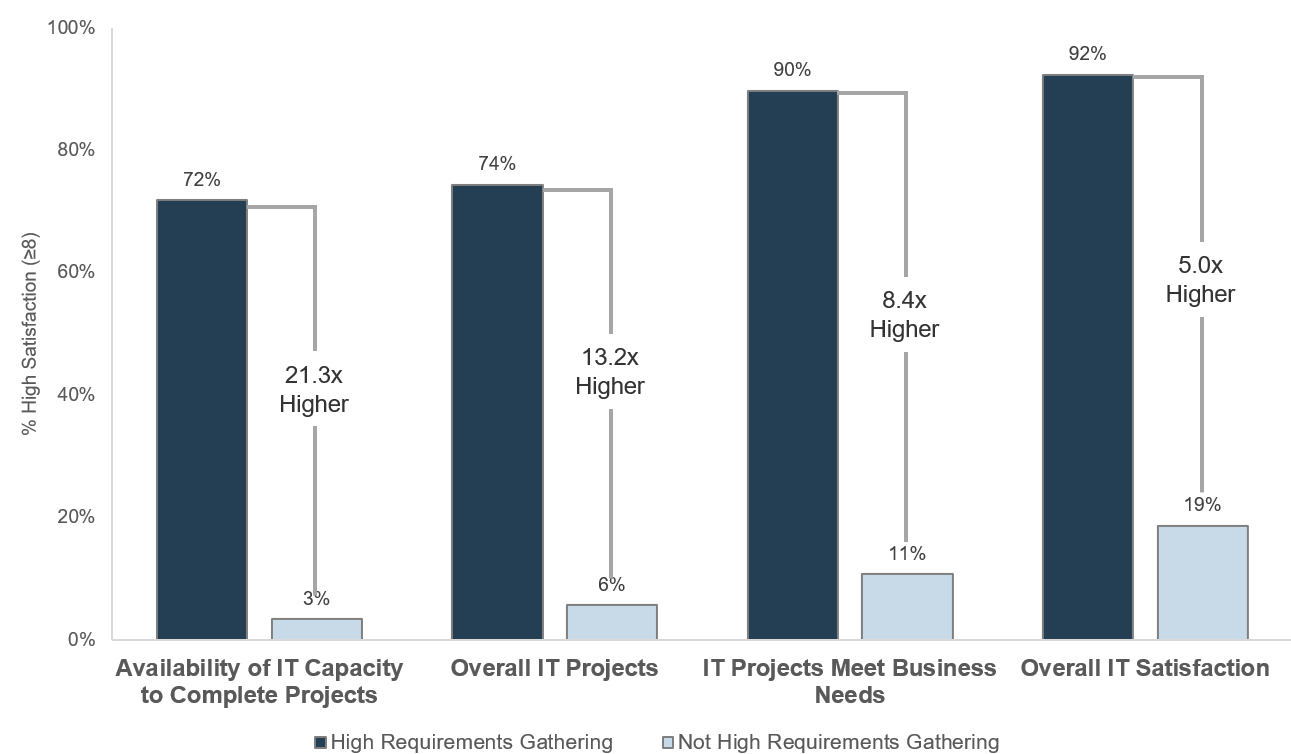

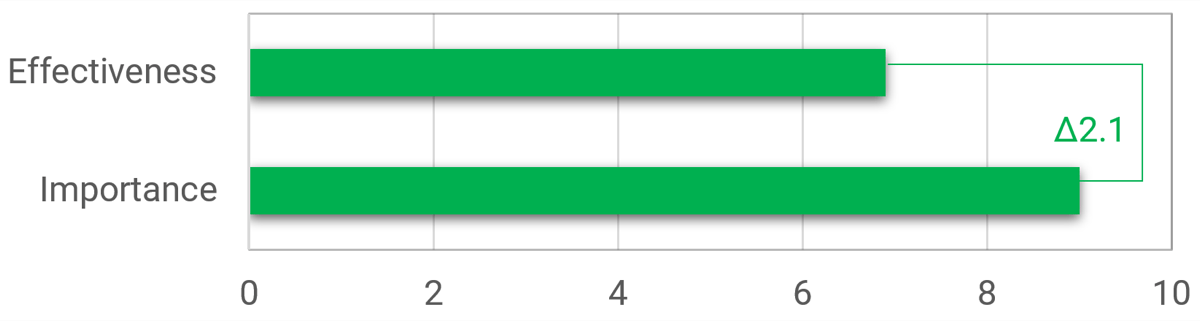

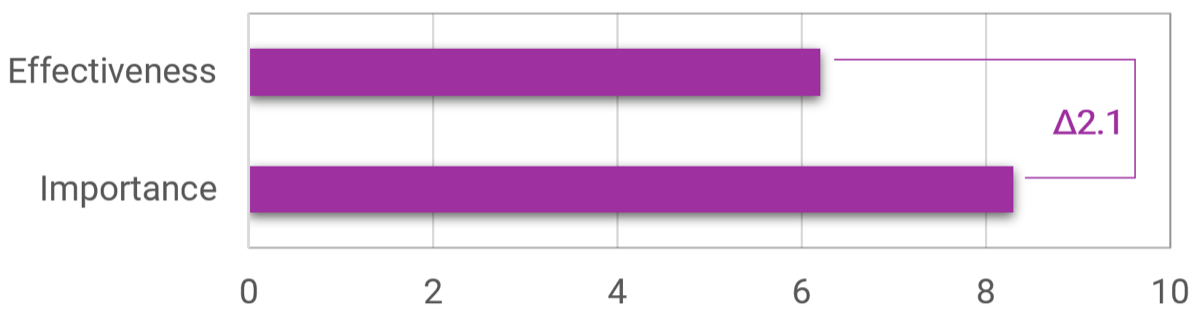

Strong stakeholder satisfaction with requirements gathering results in higher satisfaction in other areas

Organizations that had high satisfaction with requirements gathering were more likely to be highly satisfied with the other areas of IT. In fact, 72% of organizations that had high satisfaction with requirements gathering were also highly satisfied with the availability of IT capacity to complete projects.

Note: High satisfaction was classified as organizations with a score greater or equal to 8.

Not high satisfaction was every other organization that scored below 8 on the area questions.

N=395 organizations from Info-Tech’s CIO Business Vision diagnostic

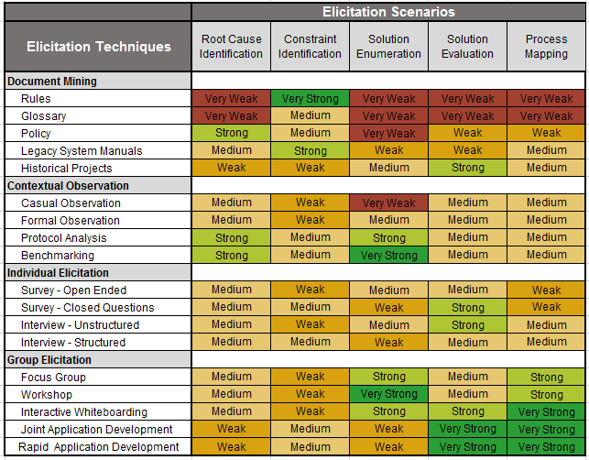

Requirements gathering efforts are filled with challenges; review these pitfalls to avoid in your optimization efforts

The challenges that afflict requirements gathering are multifaceted and often systemic in nature. There isn’t a single cure that will fix all of your requirements gathering problems, but an awareness of frequently encountered challenges will give you a basis for where to consider establishing better SOPs. Commonly encountered challenges include:

Process Challenges

- Requirements may be poorly documented, or not documented at all.

- Elicitation methods may be inappropriate (e.g. using a survey when collaborative whiteboarding is needed).

- Elicitation methods may be poorly executed.

- IT and business units may not be communicating requirements in the same terms/language.

- Requirements that conflict with one another may not be identified during analysis.

- Requirements cannot be traced from origin to testing.

Stakeholder Challenges

- Stakeholders may be unaware of the requirements needed for the ideal solution.

- Stakeholders may have difficulty properly articulating their desired requirements.

- Stakeholders may have difficulty gaining consensus on the ideal solution.

- Relevant stakeholders may not be consulted on requirements.

- Sign-off may not be received from the proper stakeholders.

70% of projects fail due to poor requirements. (Info-Tech Research Group)

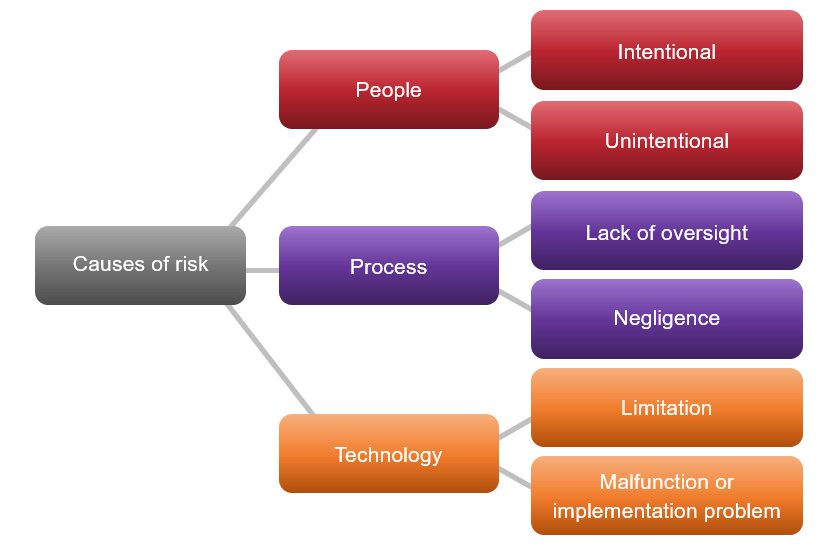

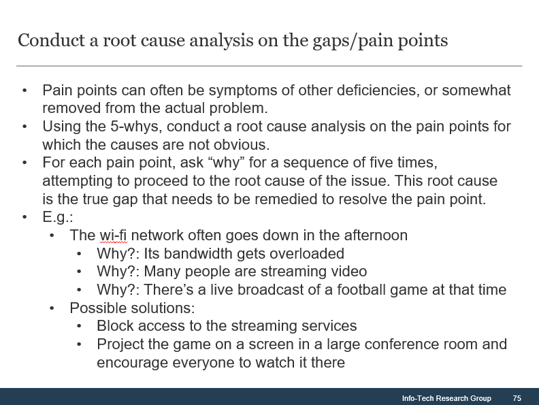

Address the root cause of poor requirements to increase project success

Root Causes of Poor Requirements Gathering:

- Requirements gathering procedures don’t exist.

- Requirements gathering procedures exist but aren’t followed.

- There isn't enough time allocated to the requirements gathering phase.

- There isn't enough involvement or investment secured from business partners.

- There is no senior leadership involvement or mandate to fix requirements gathering.

- There are inadequate efforts put towards obtaining and enforcing sign-off.

Outcomes of Poor Requirements Gathering:

- Rework due to poor requirements leads to costly overruns.

- Final deliverables are of poor quality.

- Final deliverables are implemented late.

- Predicted gains from deployed applications are not realized.

- There are low feature utilization rates by end users.

- There are high levels of end-user dissatisfaction.

- There are high levels of project sponsor dissatisfaction.

Info-Tech Insight

Requirements gathering is the number one failure point for most development or procurement projects that don’t deliver value. This has been and continues to be the case as most organizations still don't get requirements gathering right. Overcoming organizational cynicism can be a major obstacle when it is time to optimize the requirements gathering process.

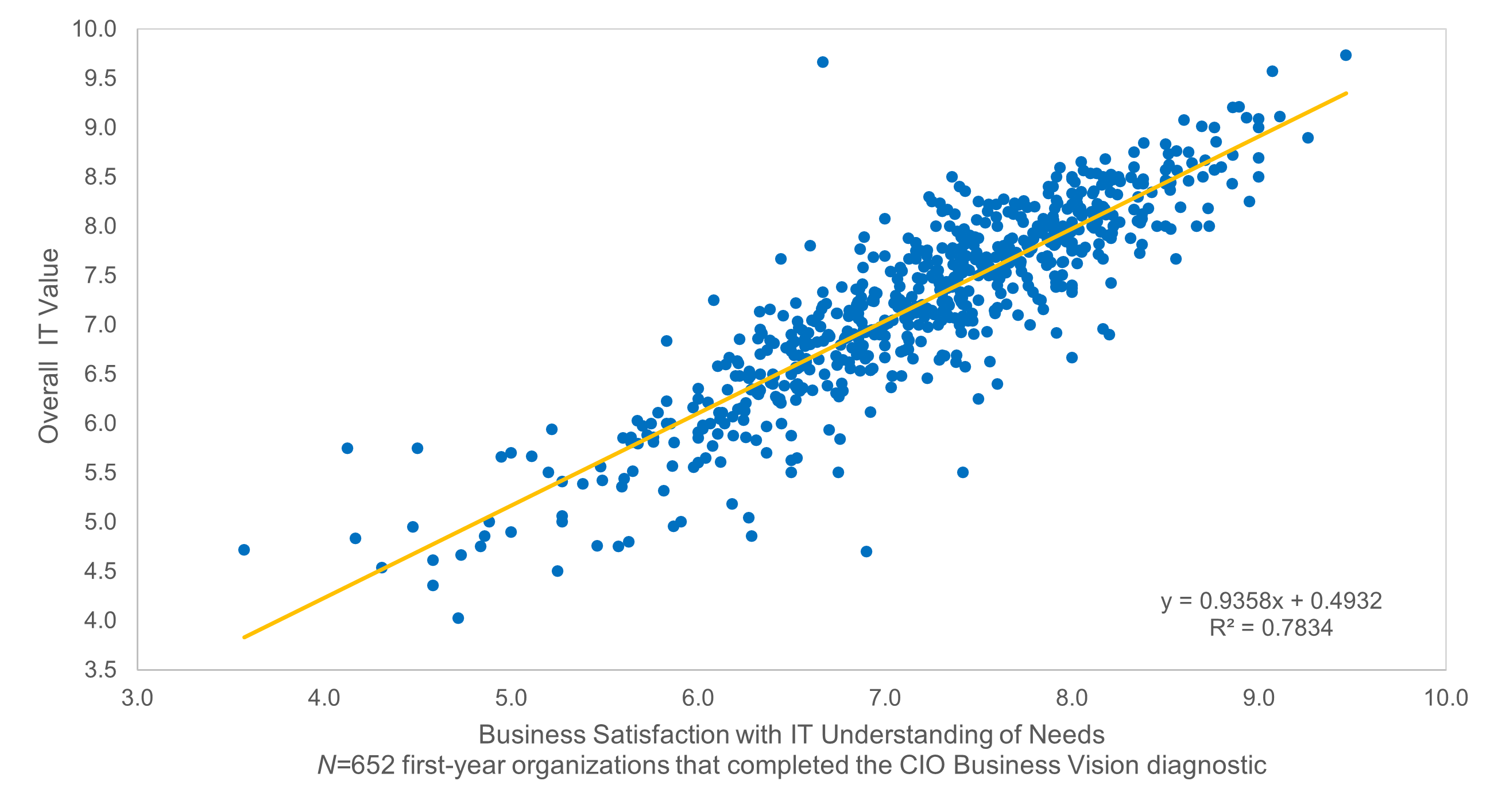

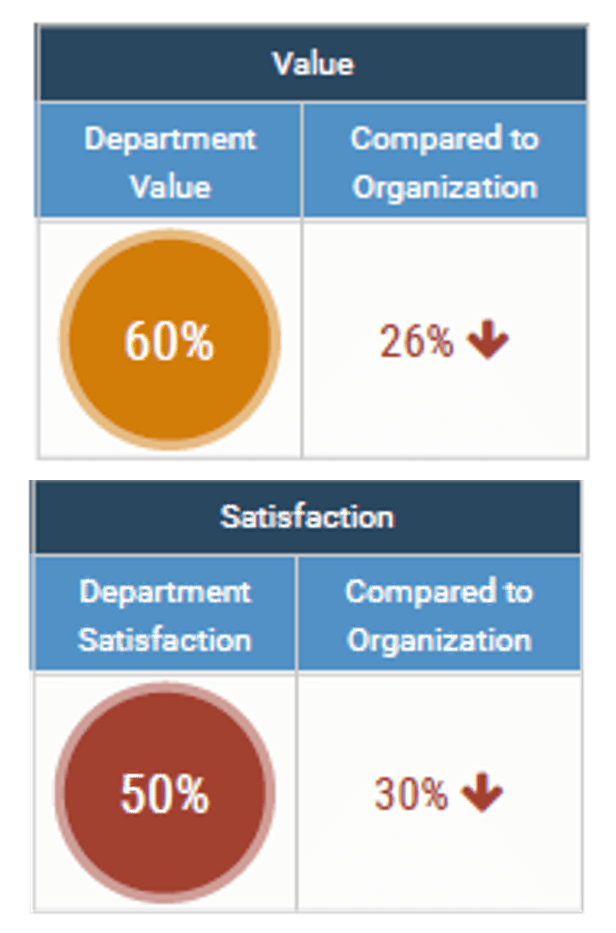

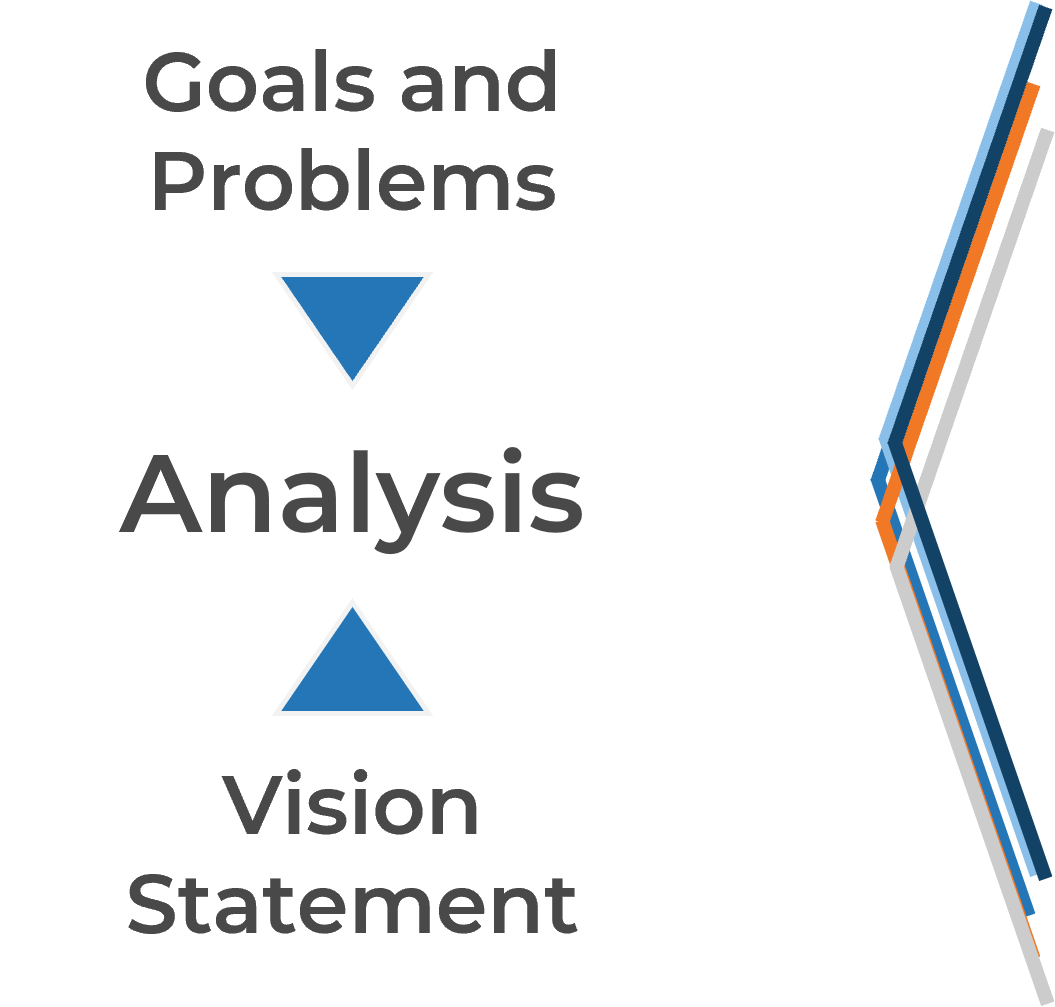

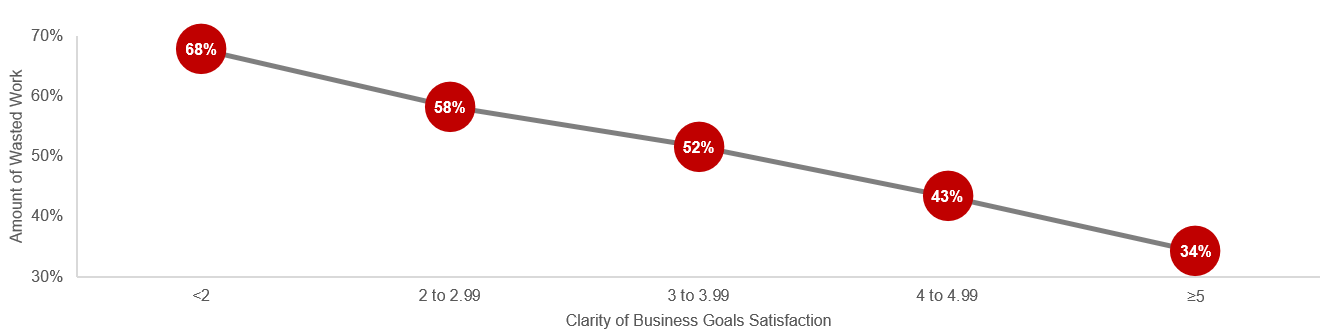

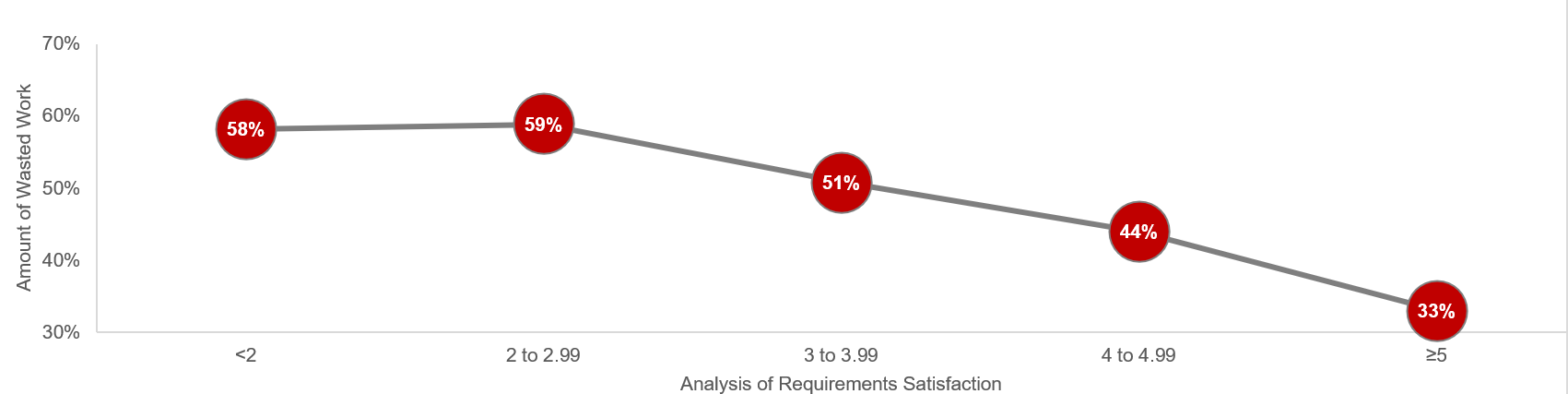

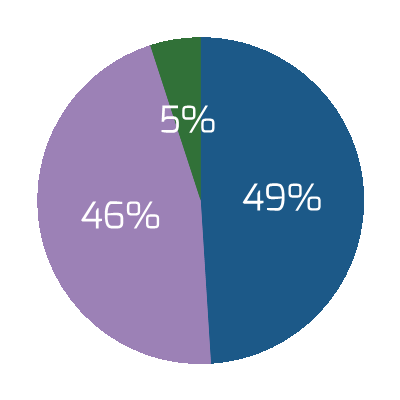

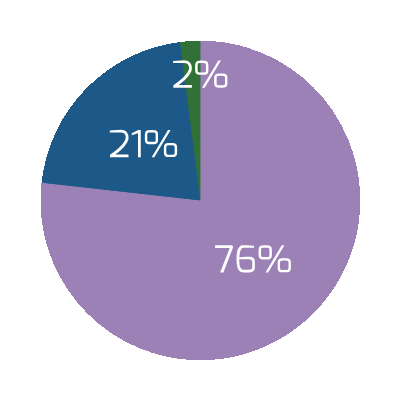

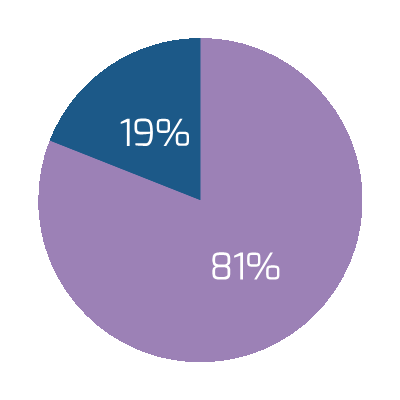

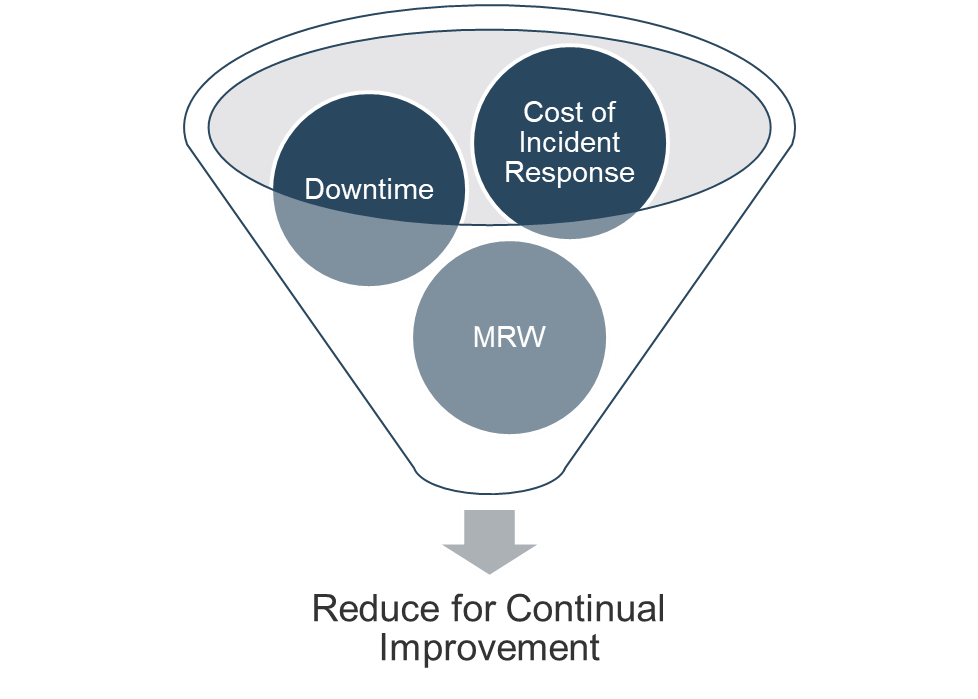

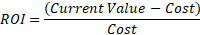

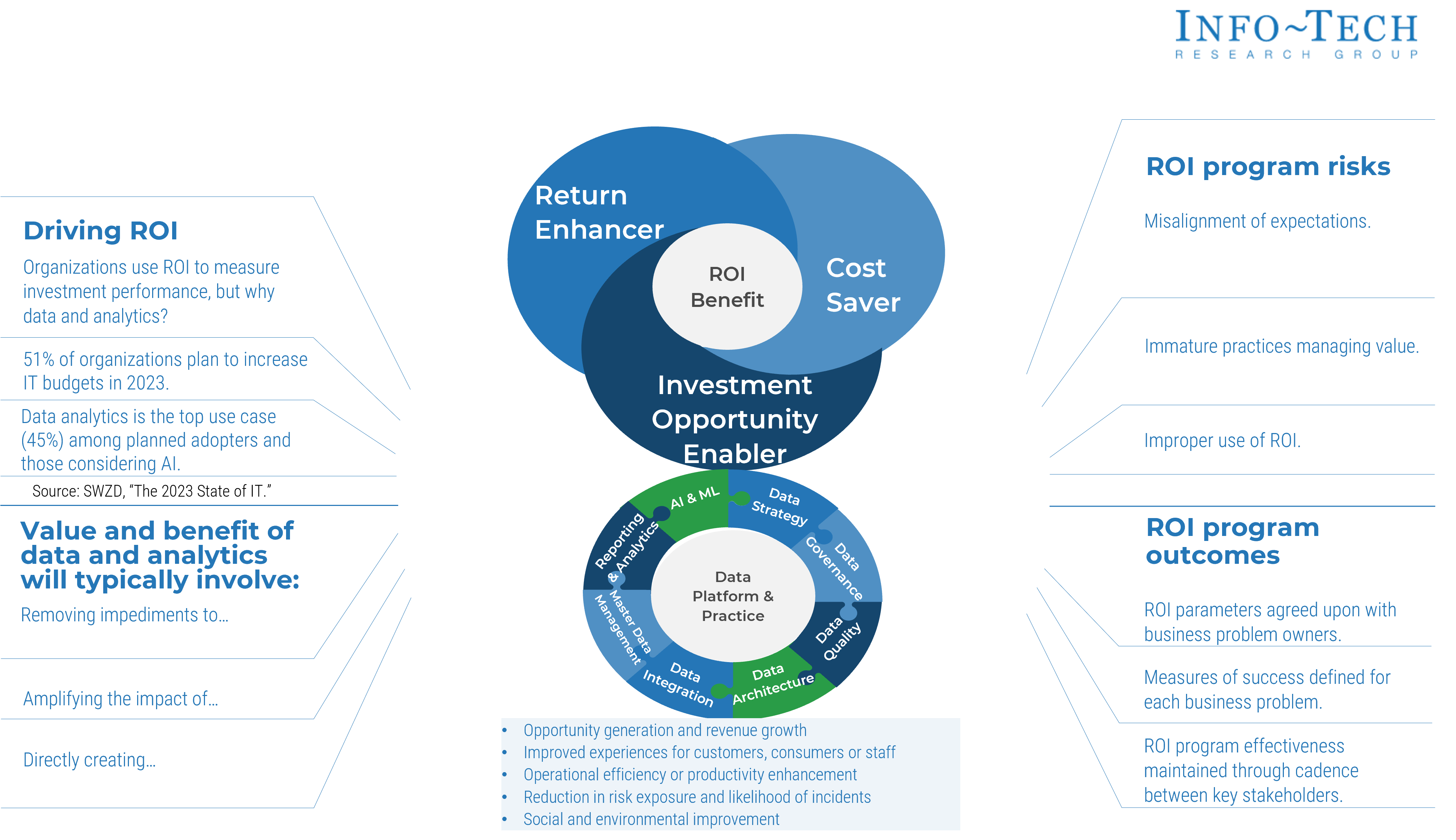

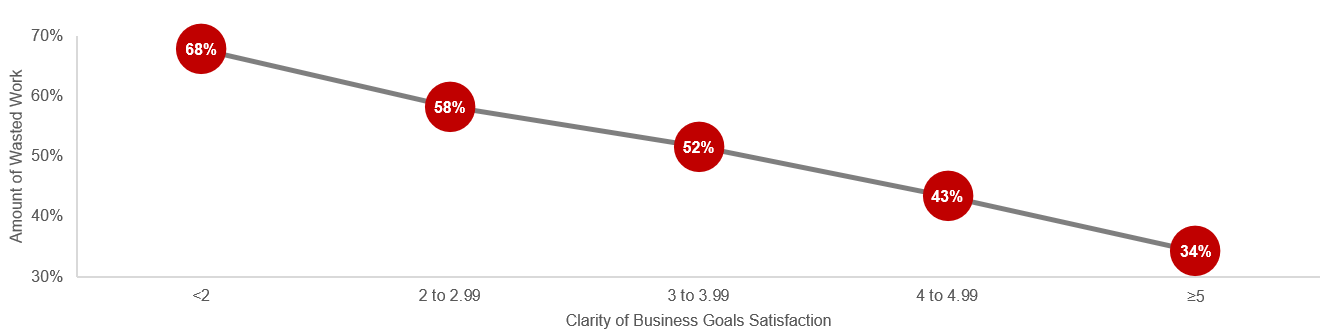

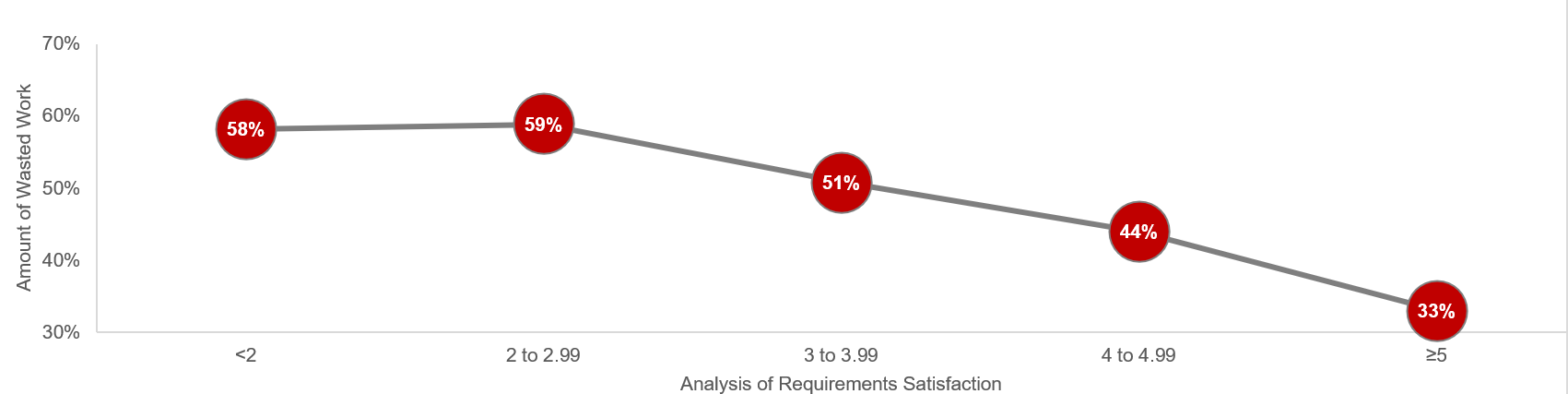

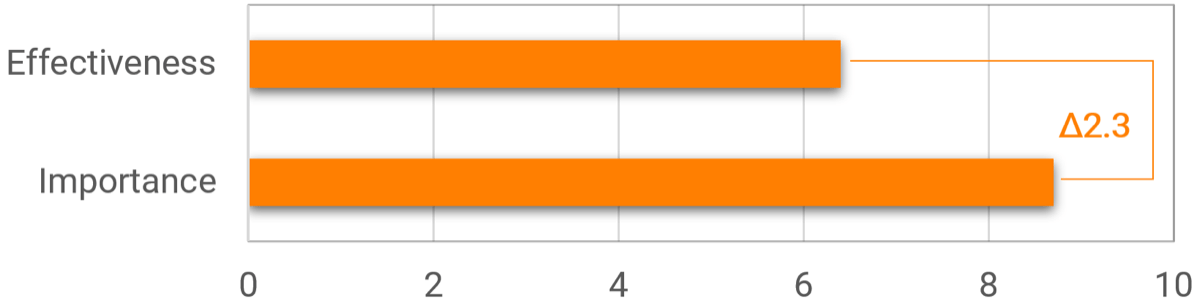

Reduce wasted project work with clarity of business goals and analysis of requirements

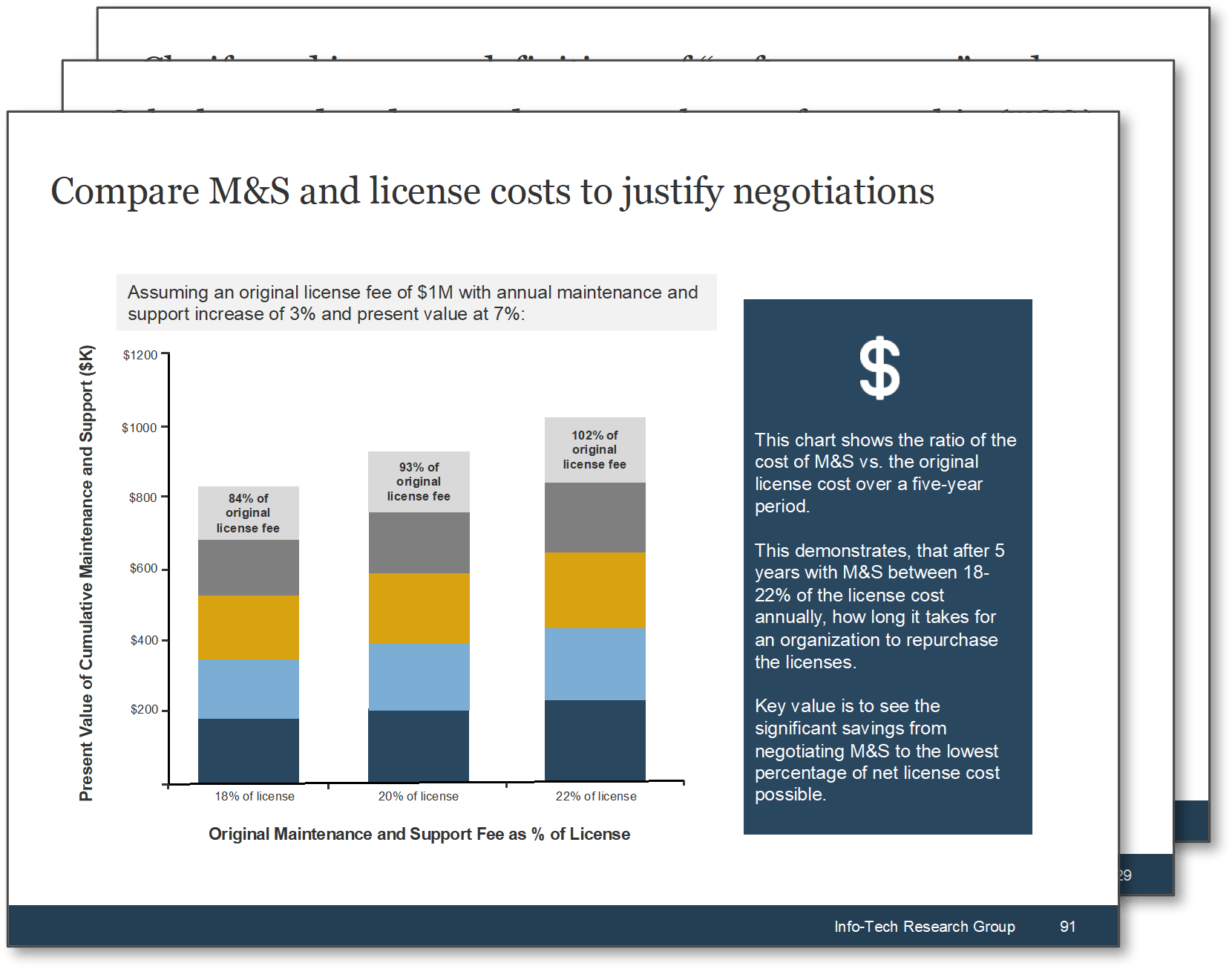

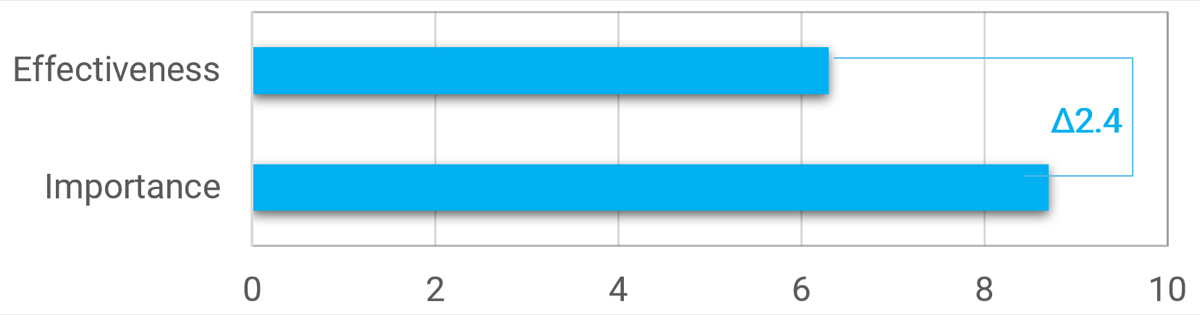

You can reduce the amount of wasted work by making sure you have clear business goals. In fact, you could see an improvement of as much as 50% by going from a low level of satisfaction with clarity of business goals (<2) to a high level of satisfaction (≥5).

Likewise, you could see an improvement of as much as 43% by going from a low level of satisfaction with analysis of requirements (less than 2) to a high level of satisfaction (greater than or equal to 5).

Note: Waste is measured by the amount of cancelled projects; suboptimal assignment of resources; analyzing, fixing, and re-deploying; inefficiency, and unassigned resources.

N=200 teams from the Project Portfolio Management diagnostic

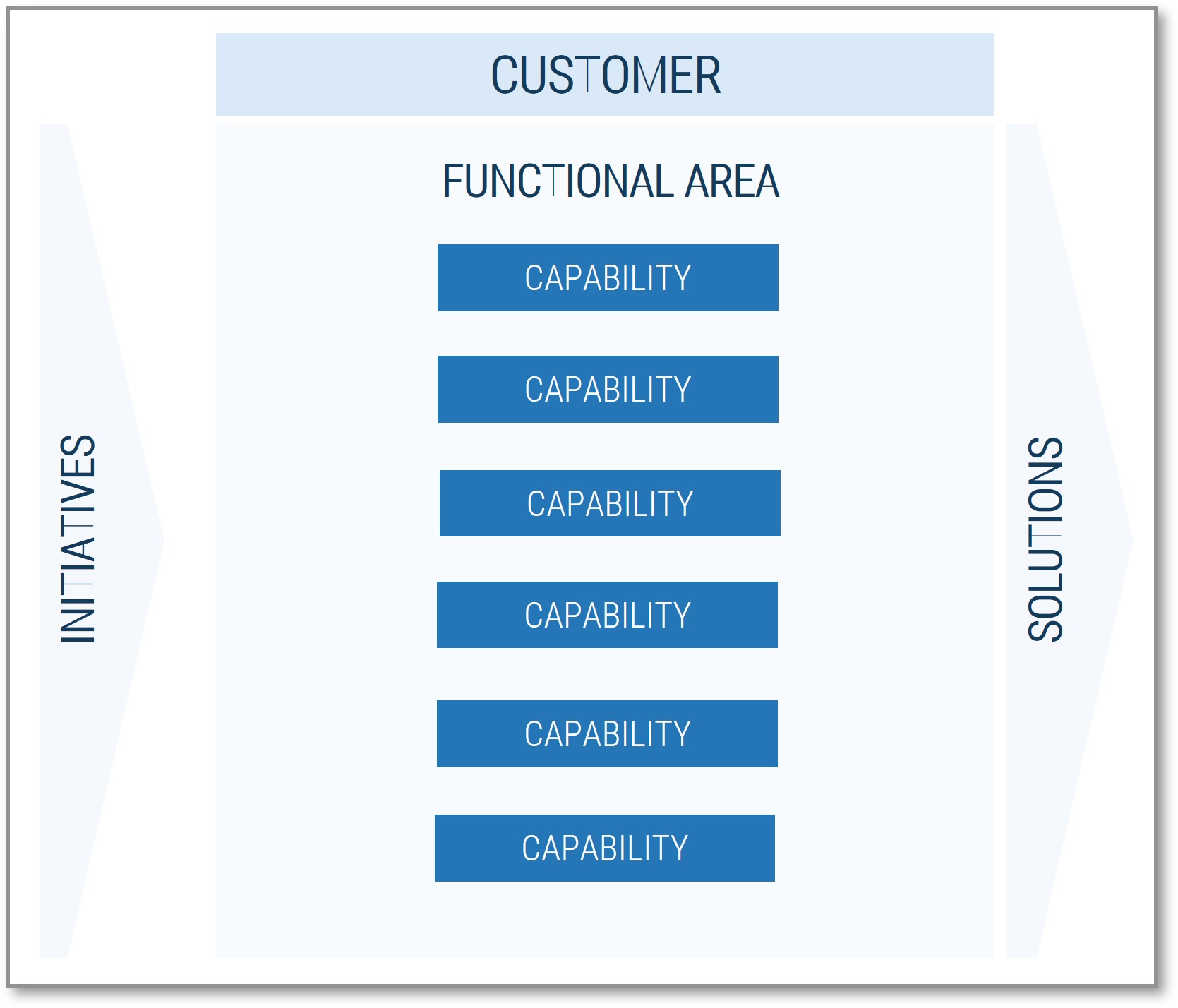

Effective requirements gathering supports other critical elements of project management success

Good intentions and hard work aren’t enough to make a project successful. As you proceed with a project, step back and assess the critical success factors. Make sure that the important inputs and critical activities of requirements gathering are supporting, not inhibiting, project success.

- Streamlined Project Intake

- Strong Stakeholder Management

- Defined Project Scope

- Effective Project Management

- Environmental Analysis

Don’t improvise: have a structured, end-to-end approach for successfully gathering useful requirements

Creating a unified SOP guide for requirements elicitation, analysis, and validation is a critical step for requirements optimization; it gives your BAs a common frame of reference for conducting requirements gathering.

- The key to requirements optimization is to establish a strong set of SOPs that provide direction on how your organization should be executing requirements gathering processes. This SOP guide should be a holistic document that walks your BAs through a requirements gathering project from beginning to end.

- An SOP that is put aside is useless; it must be well communicated to BAs. It should be treated as the veritable manifesto of requirements management in your organization.

Info-Tech Insight

Having a standardized approach to requirements management is critical, and SOPs should be the responsibility of a group. The SOP guide should cover all of the major bases of requirements management. In addition to providing a walk-through of the process, an SOP also clarifies requirements governance.

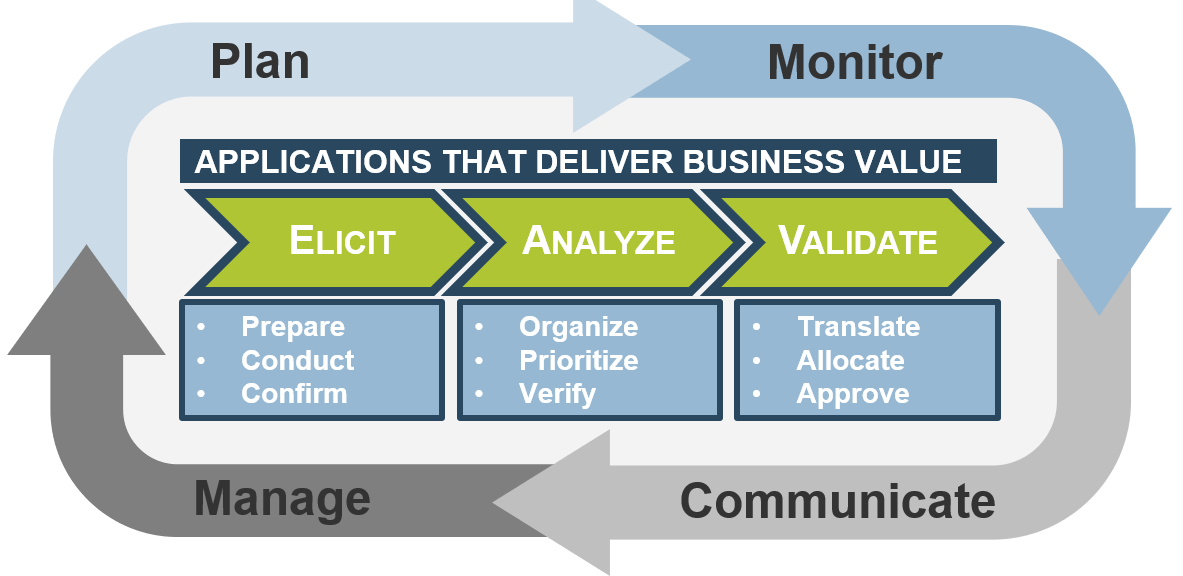

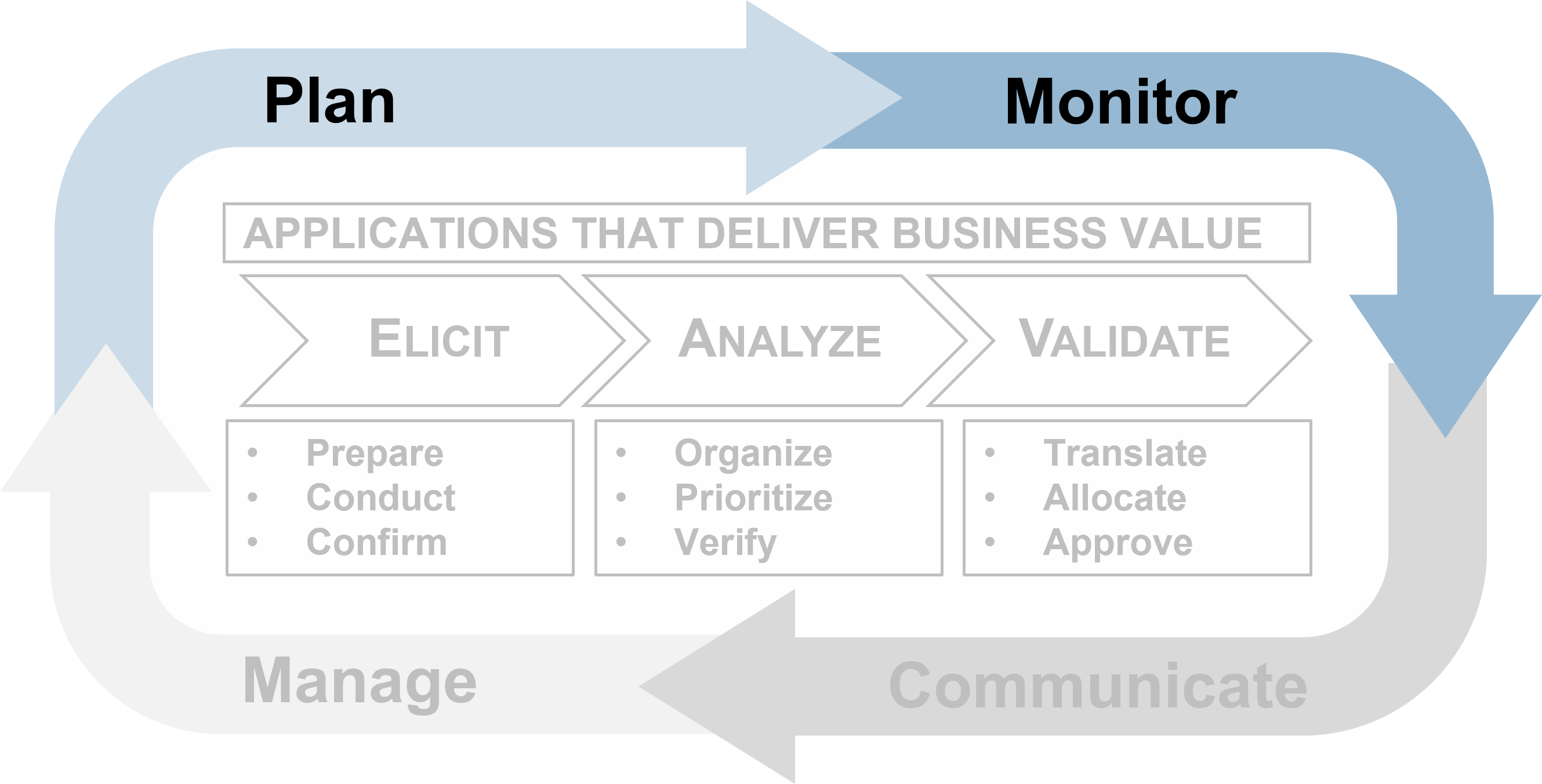

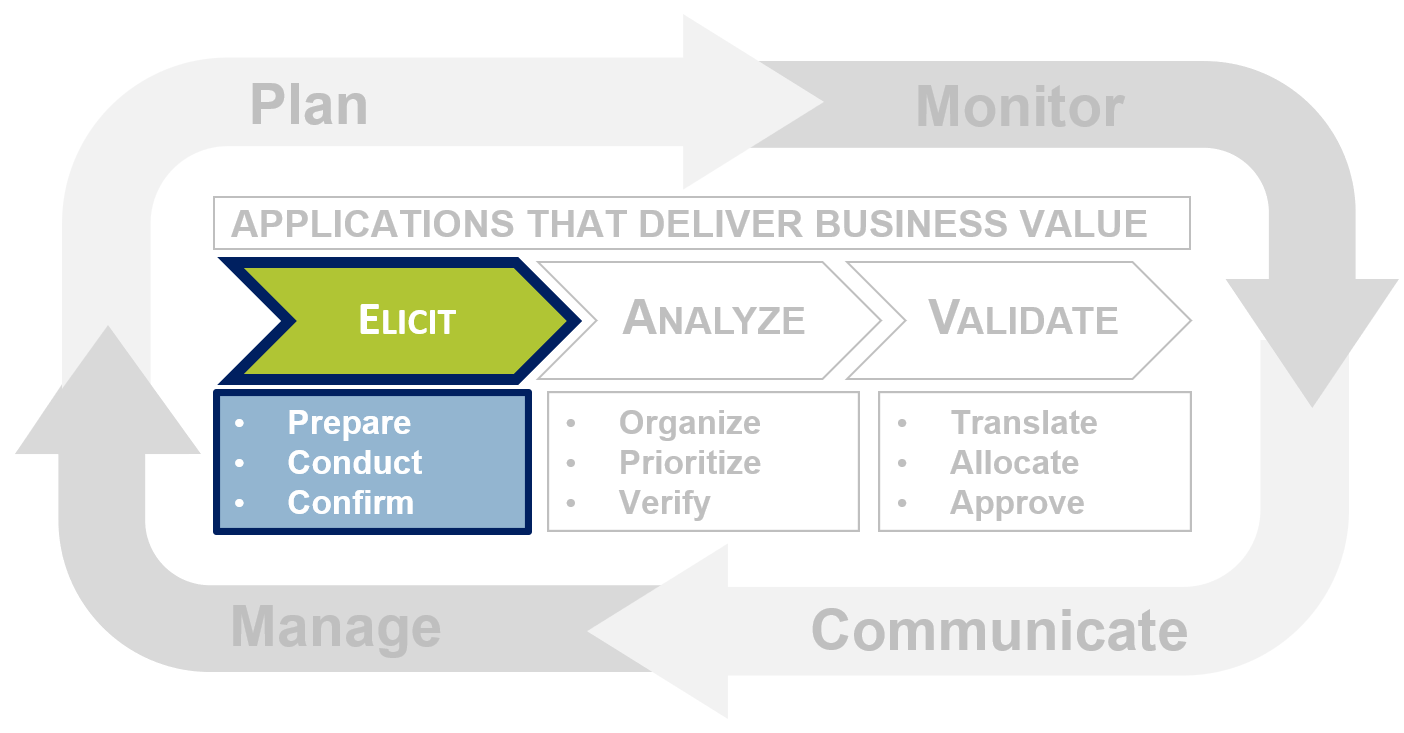

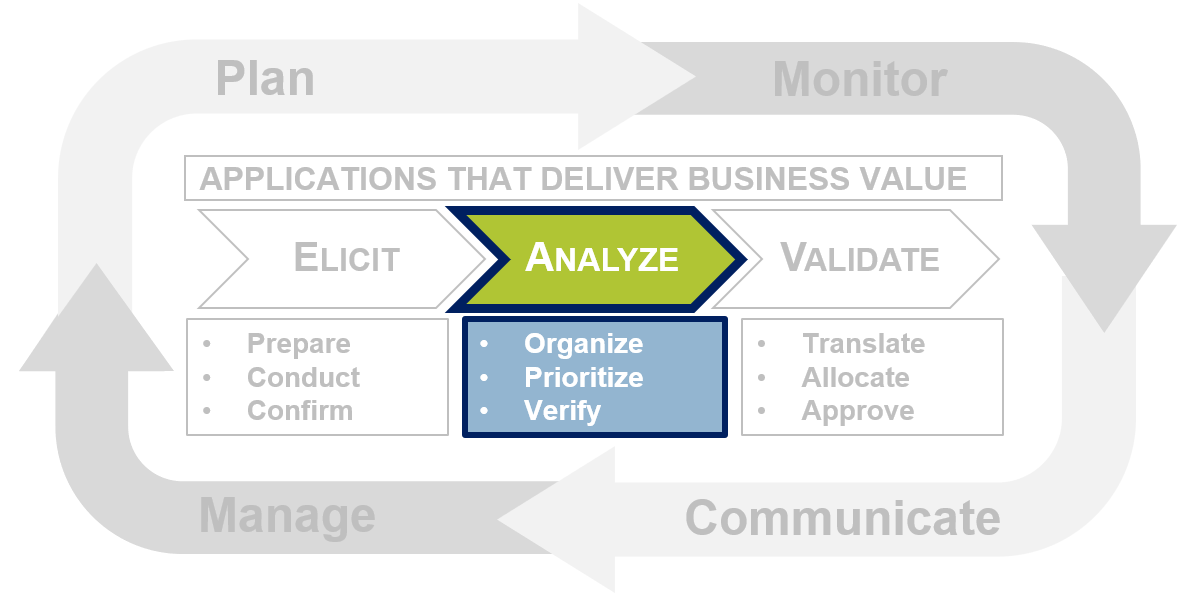

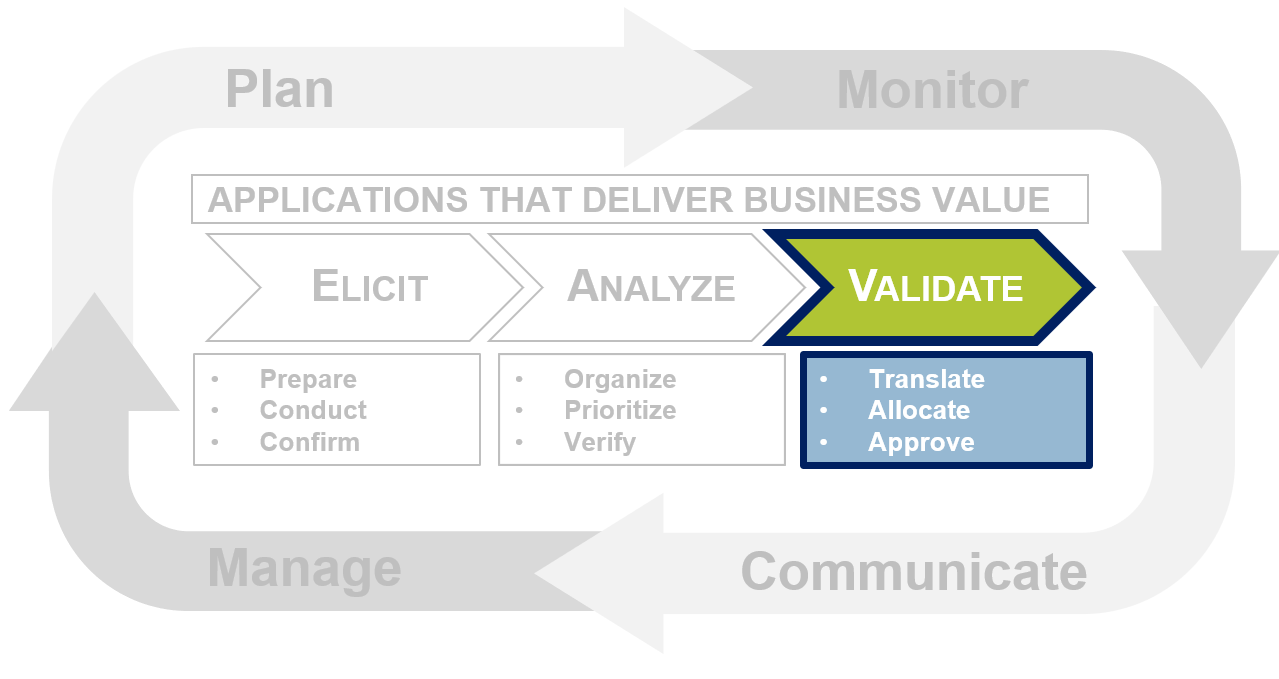

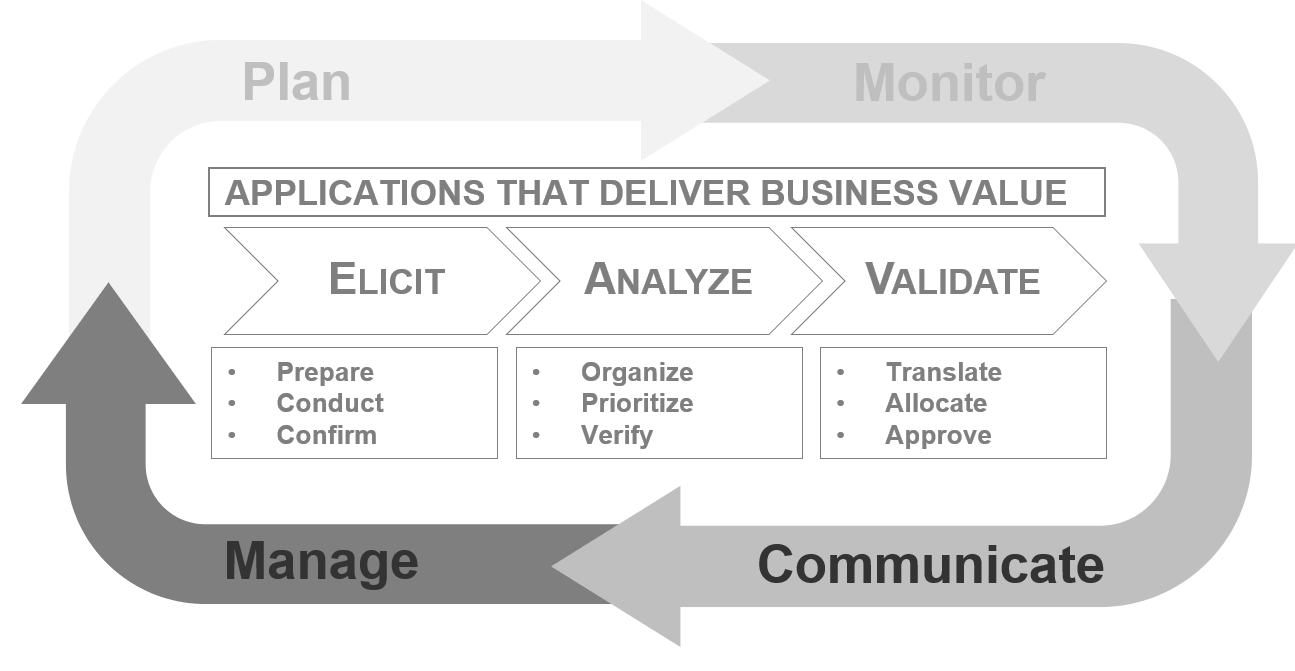

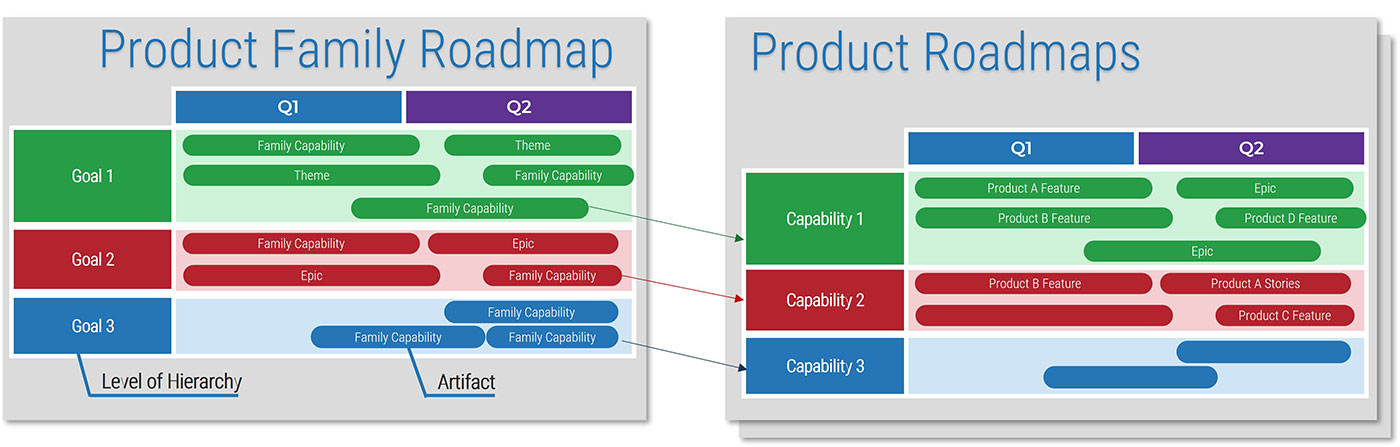

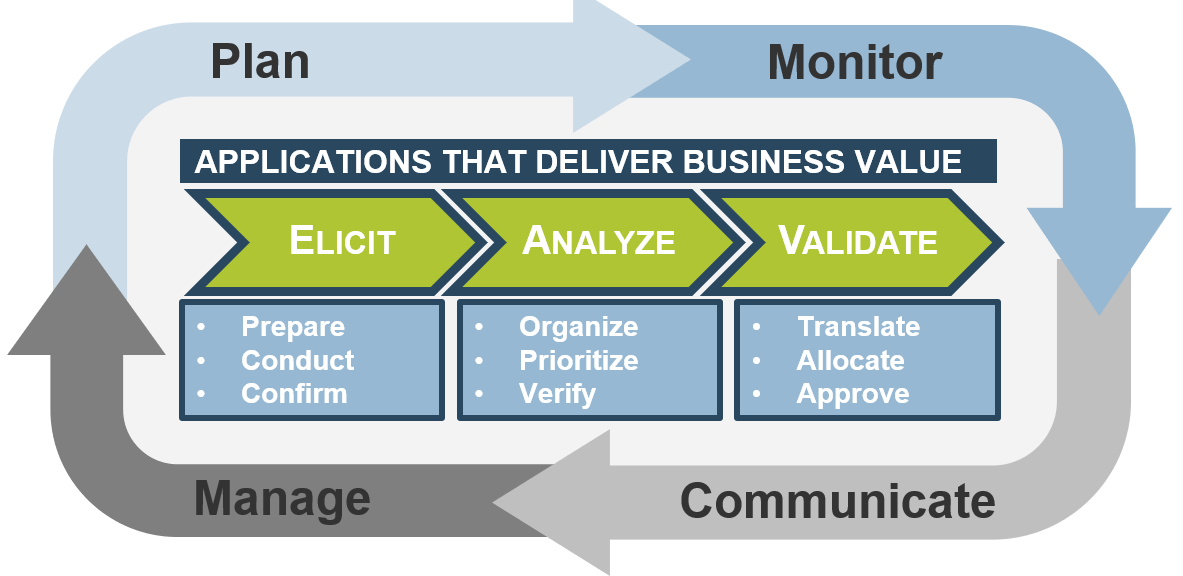

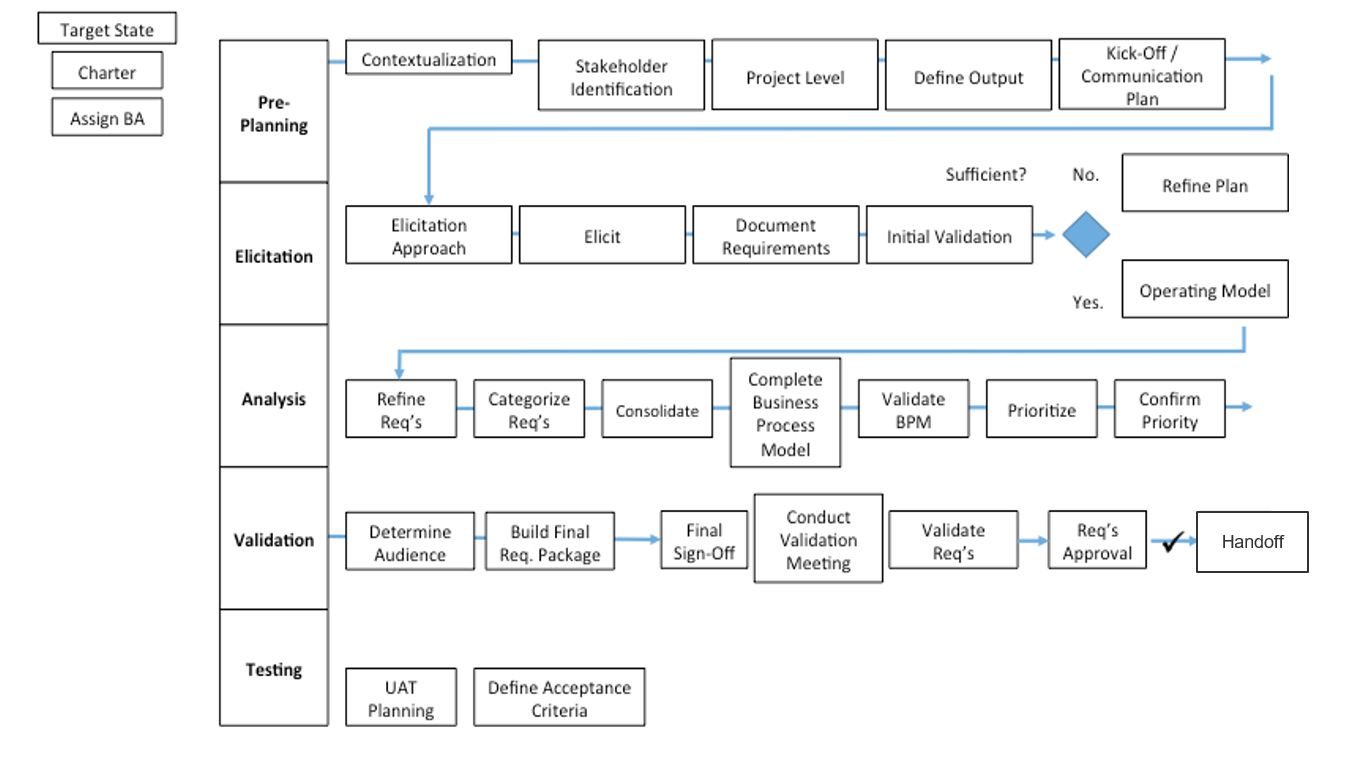

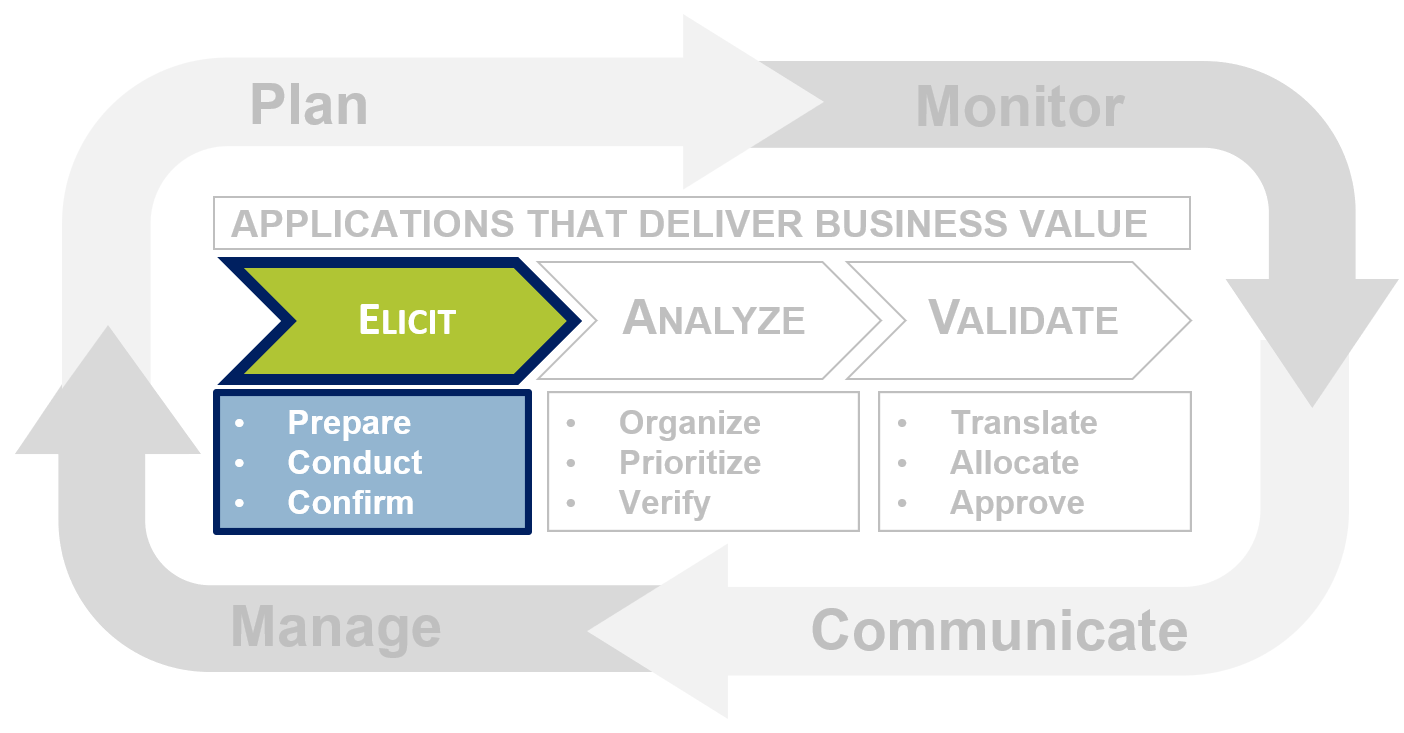

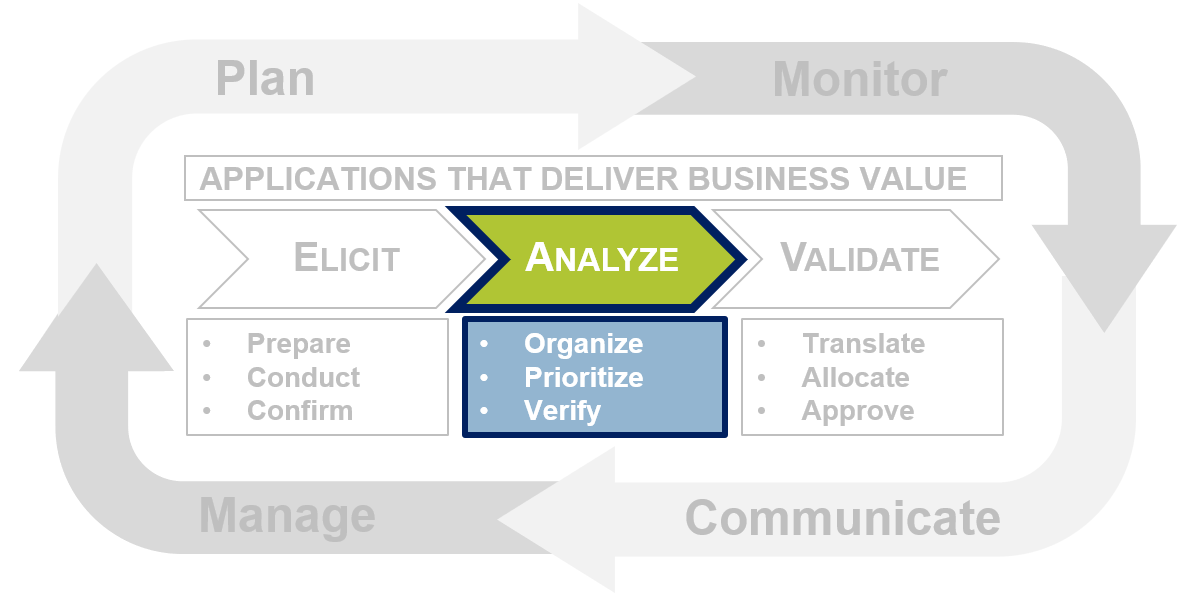

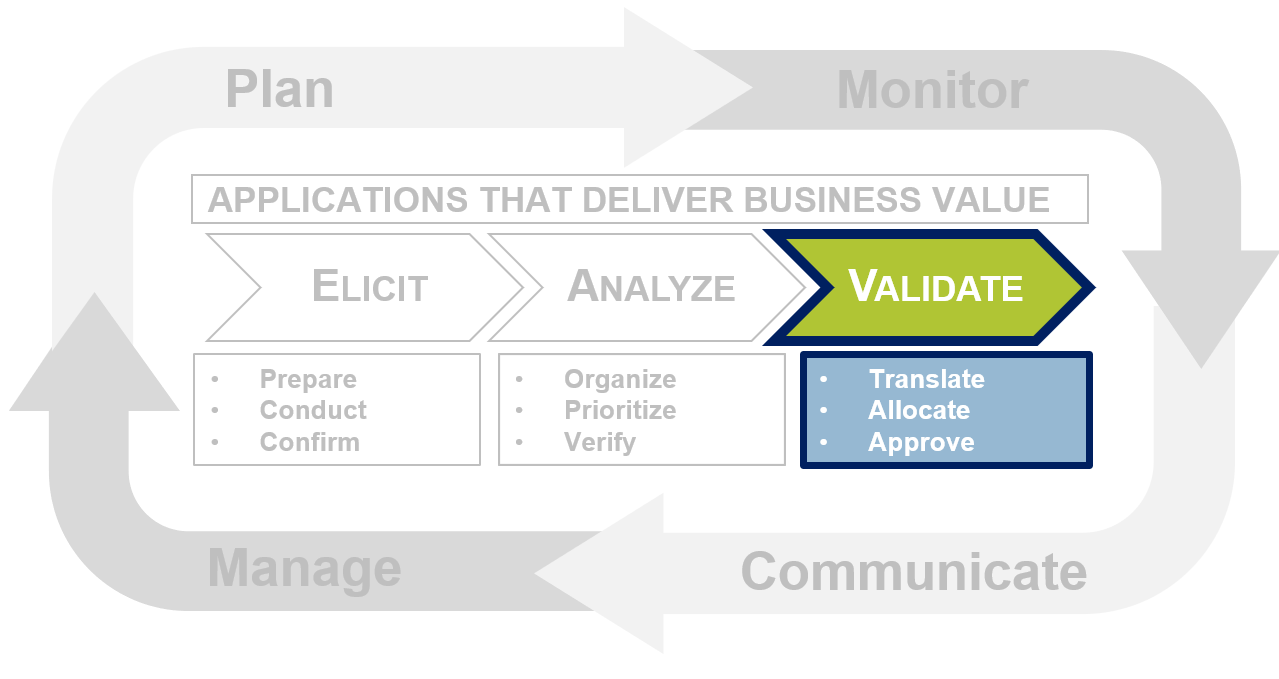

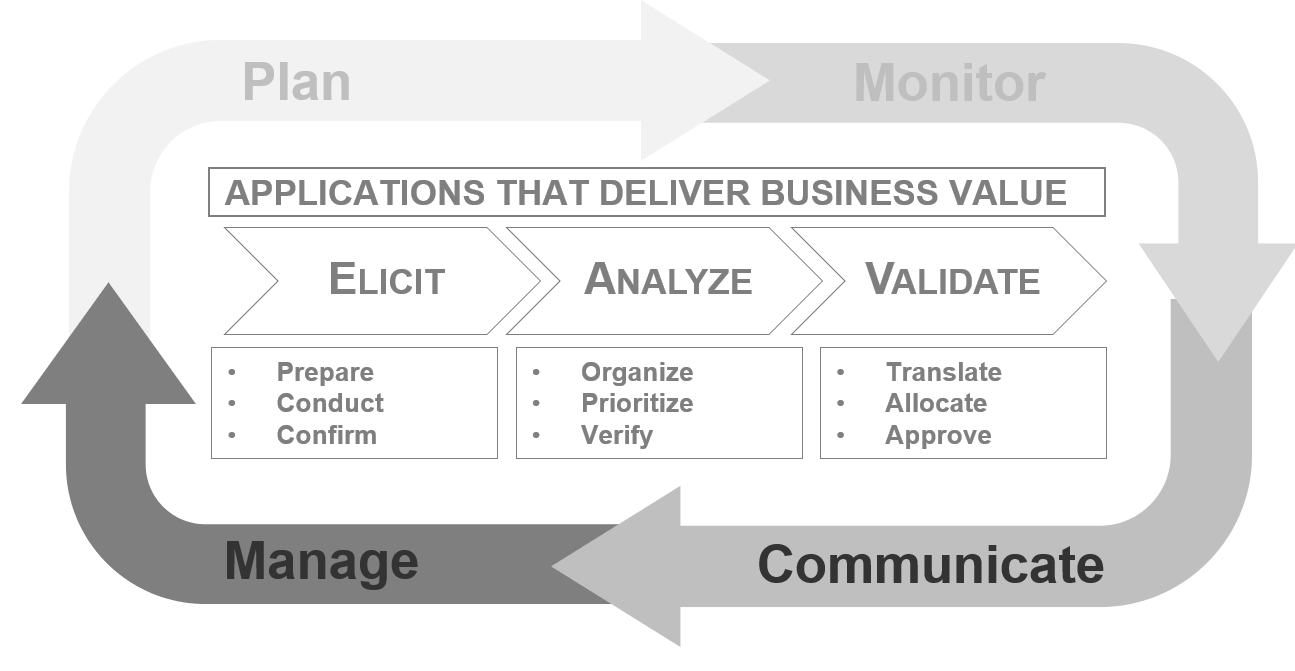

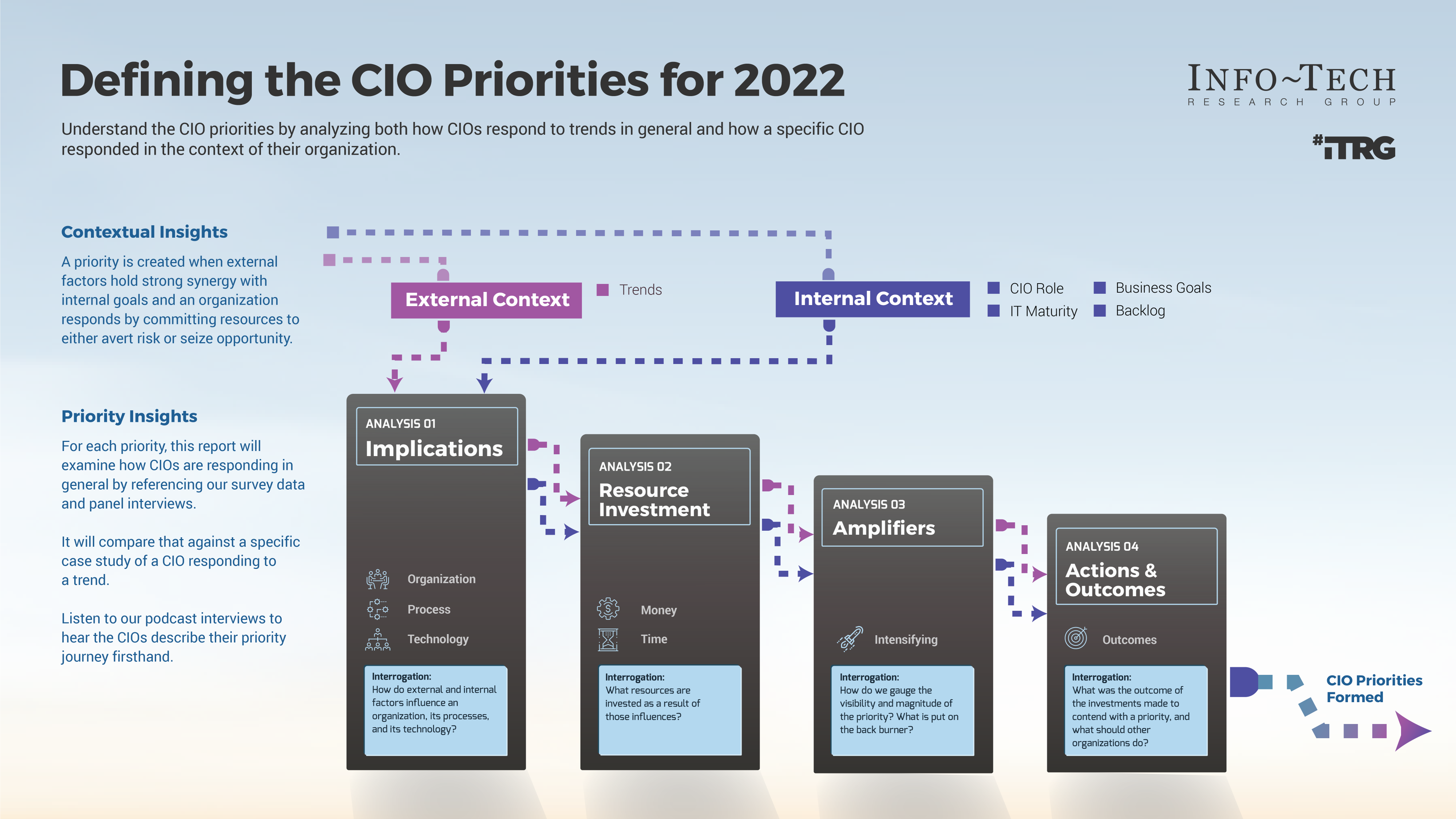

Leverage Info-Tech’s proven Requirements Gathering Framework as the basis for building requirements processes

Info-Tech’s Requirements Gathering Framework is a comprehensive approach to requirements management that can be scaled to any size of project or organization. This framework has been extensively road-tested with our clients to ensure that it balances the needs of IT and business stakeholders to give a holistic, end-to-end approach for requirements gathering. It covers the foundational issues (elicitation, analysis, and validation) and prescribes techniques for planning, monitoring, communicating, and managing the requirements gathering process.

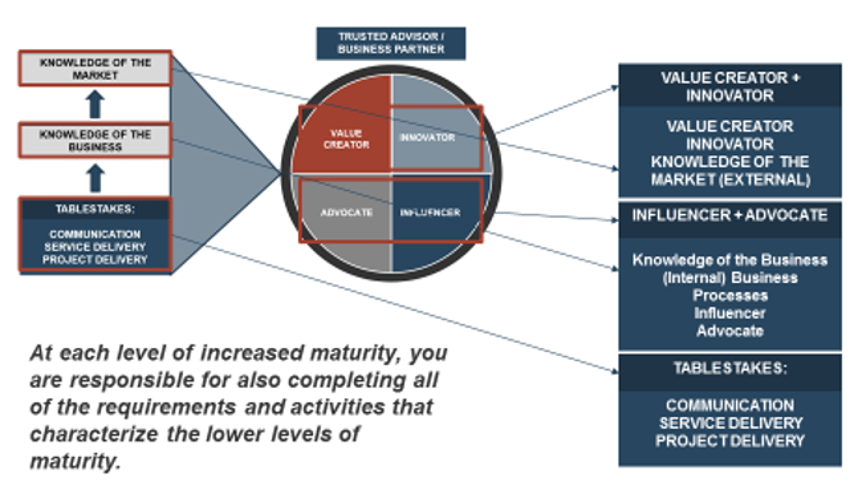

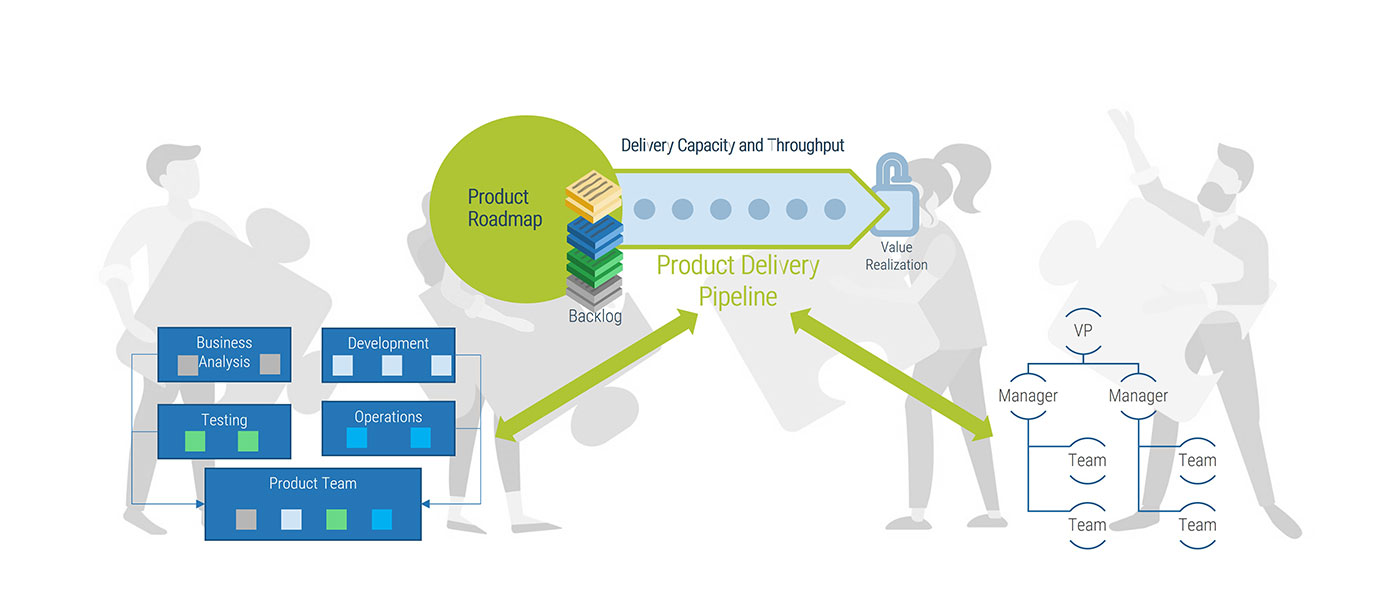

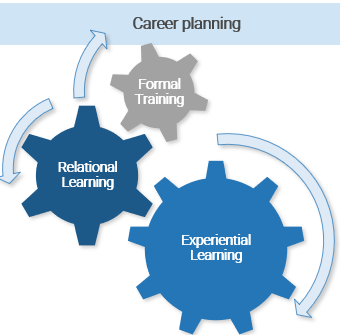

Don’t forget resourcing: the best requirements gathering process will still fail if you don’t develop BA competencies

When creating the process for requirements gathering, think about how it will be executed by your BAs, and what the composition of your BA team should look like. A strong BA needs to serve as an effective translator, being able to speak the language of both the business and IT.

- To ensure alignment of your BAs to the requirements gathering process, undertake a formal skills assessment to identify areas where analysts are strong, and areas that should be targeted for training and skills development.

- Training of BAs on the requirements gathering process and development of intimate familiarity with SOPs is essential; you need to get BAs on the same page to ensure consistency and repeatability of the requirements process.

- Consider implementing a formal mentorship and/or job shadowing program between senior and junior BAs. Many of our members report that leveraging senior BAs to bootstrap the competencies of more junior team members is a proven approach to building skillsets for requirements gathering.

What are some core competencies of a good BA?

- Strong stakeholder management.

- Proven track record in facilitating elicitation sessions.

- Ability to bridge the gulf between IT and the business by speaking both languages.

- Ability to ask relevant probing questions to uncover latent needs.

- Experience with creating project operating models and business process diagrams.

- Ability to set and manage expectations throughout the process.

Throughout this blueprint, look for the “BA Insight” box to learn how steps in the requirements gathering process relate to the skills needed by BAs to facilitate the process effectively.

A mid-sized local government overhauls its requirements gathering approach and sees strong results

CASE STUDY

Industry

Government

Source

Info-Tech Research Group Workshop

The Client

The organization was a local government responsible for providing services to approximately 600,000 citizens in the southern US. Its IT department is tasked with deploying applications and systems (such as HRIS) that support the various initiatives and mandate of the local government.

The Requirements Gathering Challenge

The IT department recognized that a strong requirements gathering process was essential to delivering value to its stakeholders. However, there was no codified process in place – each BA unilaterally decided how they would conduct requirements gathering at the start of each project. IT recognized that to enhance both the effectiveness and efficiency of requirements gathering, it needed to put in place a strong, prescriptive set of SOPs.

The Improvement

Working with a team from Info-Tech, the IT leadership and BA team conducted a workshop to develop a new set of SOPs that provided clear guidance for each stage of the requirements process: elicitation, analysis, and validation. As a result, business satisfaction and value alignment increased.

The Requirements Gathering SOP and BA Playbook offers a codified set of SOPs for requirements gathering gave BAs a clear playbook.

Info-Tech offers various levels of support to best suit your needs

DIY Toolkit

“Our team has already made this critical project a priority, and we have the time and capability, but some guidance along the way would be helpful.”

Guided Implementation

“Our team knows that we need to fix a process, but we need assistance to determine where to focus. Some check-ins along the way would help keep us on track.”

Workshop

“We need to hit the ground running and get this project kicked off immediately. Our team has the ability to take this over once we get a framework and strategy in place.”

Consulting

“Our team does not have the time or the knowledge to take this project on. We need assistance through the entirety of this project.”

Diagnostics and consistent frameworks used throughout all four options

Build a Strong Approach to Business Requirements Gathering – project overview

|

|

1. Build the Target State for Requirements Gathering

|

2. Define the Elicitation Process

|

3. Analyze and Validate Requirements

|

4. Create a Requirements Governance Action Plan

|

| Best-Practice Toolkit

|

1.1 Understand the Benefits of Requirements Optimization

1.2 Determine Your Target State for Requirements Gathering

|

2.1 Determine Elicitation Techniques

2.2 Structure Elicitation Output

|

3.1 Create Analysis Framework

3.2 Validate Business Requirements

|

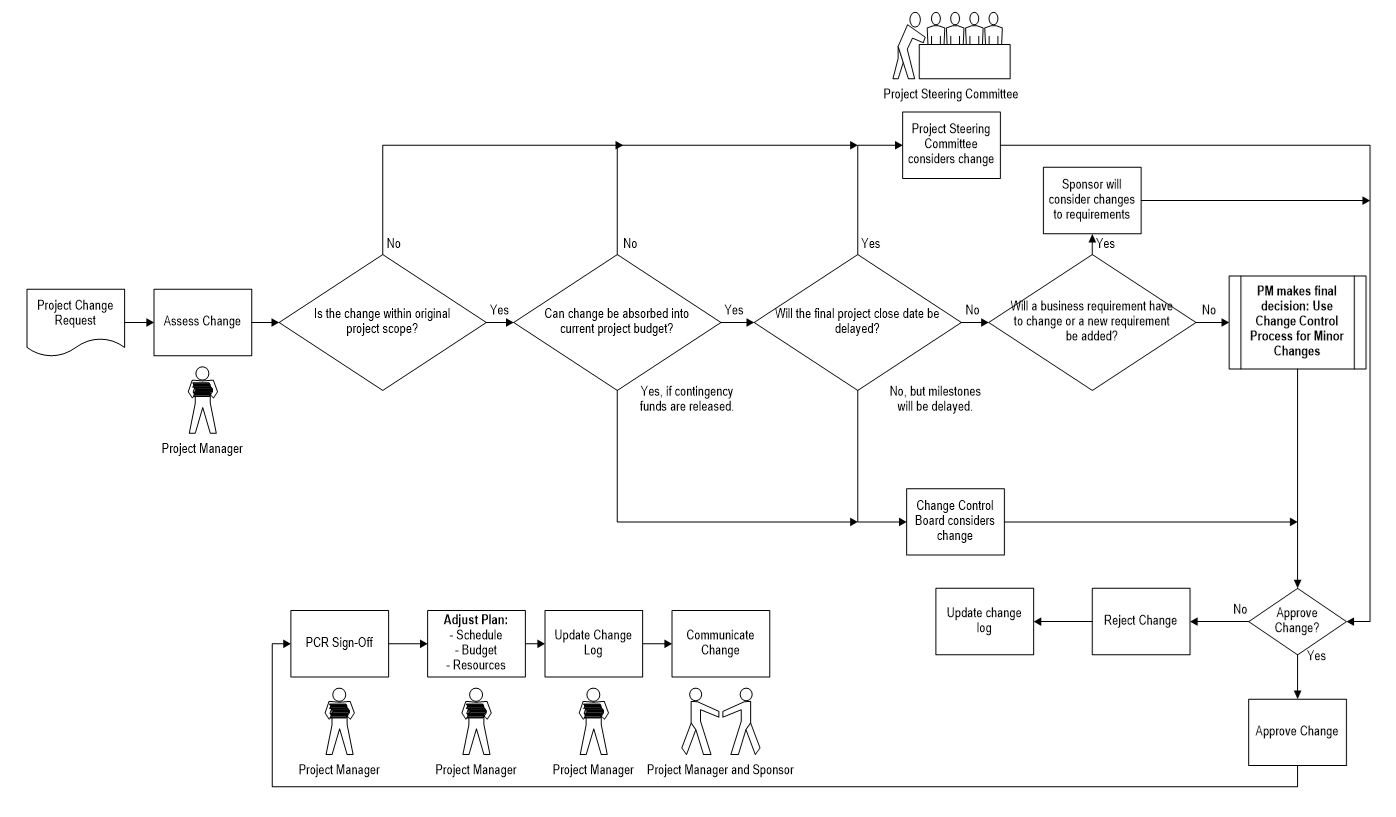

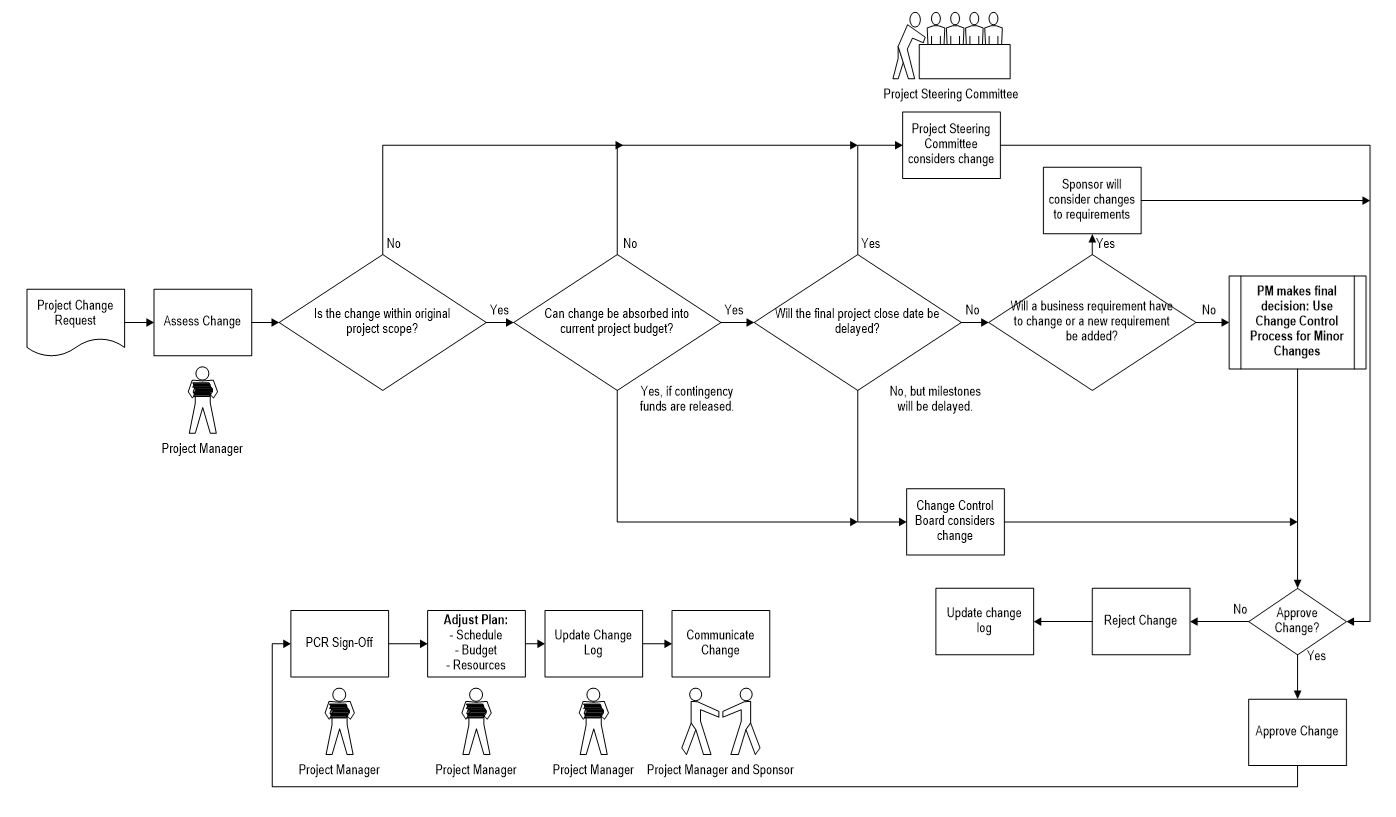

4.1 Create Control Processes for Requirements Changes

4.2 Build Requirements Governance and Communication Plan

|

| Guided Implementations

|

- Review Info-Tech’s requirements gathering methodology.

- Assess current state for requirements gathering – pains and challenges.

- Determine target state for business requirements gathering – areas of opportunity.

|

- Assess elicitation techniques and determine best fit to projects and business environment.

- Review options for structuring the output of requirements elicitation (i.e. SIPOC).

|

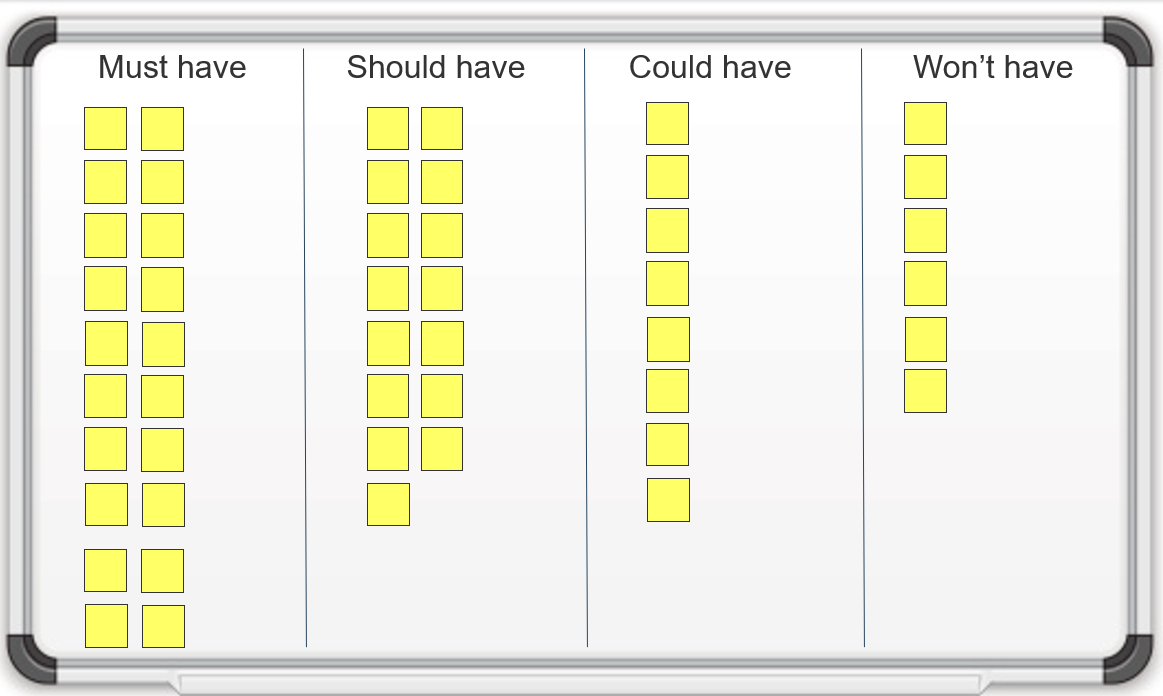

- Create policies for requirements categorization and prioritization.

- Establish best practices for validating the BRD with project stakeholders.

|

- Discuss how to handle changes to requirements, and establish a formal change control process.

- Review options for ongoing governance of the requirements gathering process.

|

| Onsite Workshop

|

Module 1: Define the Current and Target State

|

Module 2: Define the Elicitation Process

|

Module 3: Analyze and Validate Requirements

|

Module 4: Governance and Continuous Improvement Process

|

|

|

Phase 1 Results: Clear understanding of target needs for the requirements process.

|

Phase 2 Results: Best practices for conducting and structuring elicitation.

|

Phase 3 Results: Standardized frameworks for analysis and validation of business requirements.

|

Phase 4 Results: Formalized change control and governance processes for requirements.

|

Workshop overview

Contact your account representative or email Workshops@InfoTech.com for more information.

|

|

Workshop Day 1

|

Workshop Day 2

|

Workshop Day 3

|

Workshop Day 4

|

Workshop Day 5

|

| Activities

|

Define Current State and Target State for Requirements Gathering

- Understand current state and document existing requirement process steps.

- Identify stakeholder, process, outcome, and reigning challenges.

- Conduct target state analysis.

- Establish requirements gathering metrics.

- Identify project levels 1/2/3/4.

- Match control points to project levels 1/2/3/4.

- Conduct project scoping and identify stakeholders.

|

Define the Elicitation Process

- Understand elicitation techniques and which ones to use.

- Document and confirm elicitation techniques.

- Create a requirements gathering elicitation plan for your project.

- Practice using interviews with business stakeholders to build use case models.

- Practice using table-top testing with business stakeholders to build use case models.

- Build the operating model for your project

|

Analyze and Validate Requirements

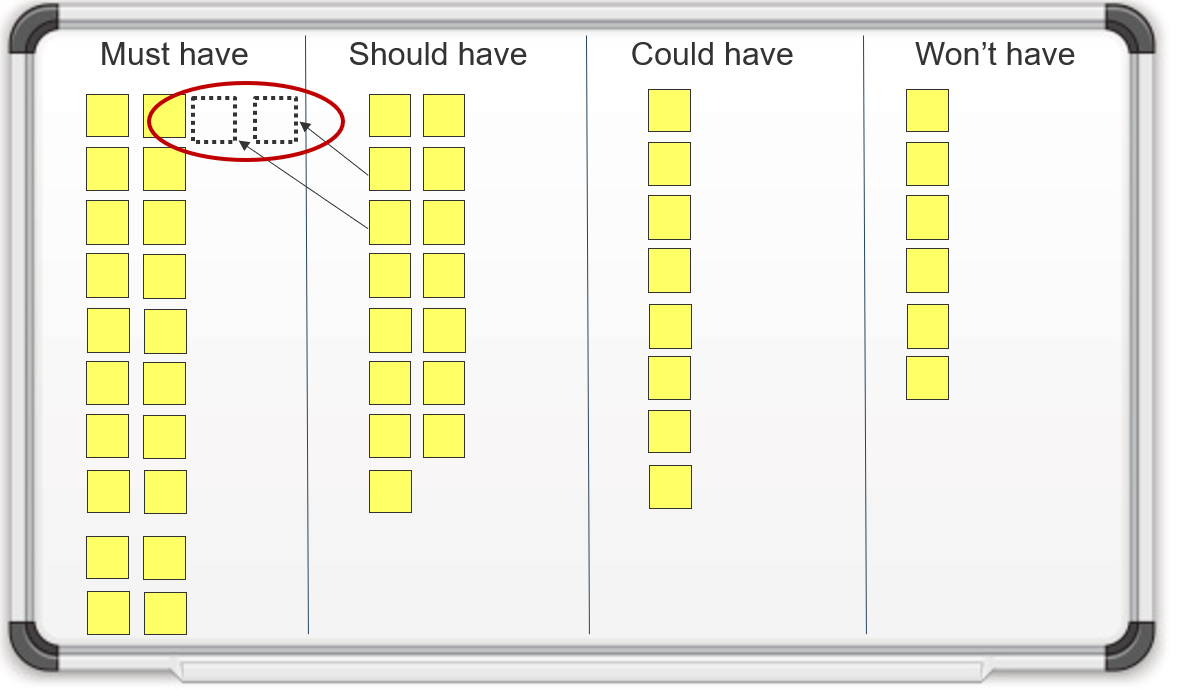

- Categorize gathered requirements for use.

- Consolidate similar requirements and eliminate redundancies.

- Practice prioritizing requirements.

- Rightsize the requirements documentation template.

- Present the business requirements document (BRD) to business stakeholders.

- Identify testing opportunities.

|

Establish Change Control Processes

- Review existing CR process.

- Review change control process best practices & optimization opportunities.

- Build guidelines for escalating changes.

- Confirm your requirements gathering process for project levels 1/2/3/4.

|

Establish Ongoing Governance for Requirements Gathering

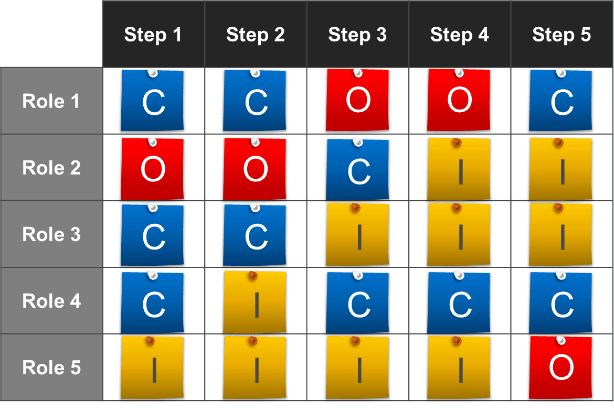

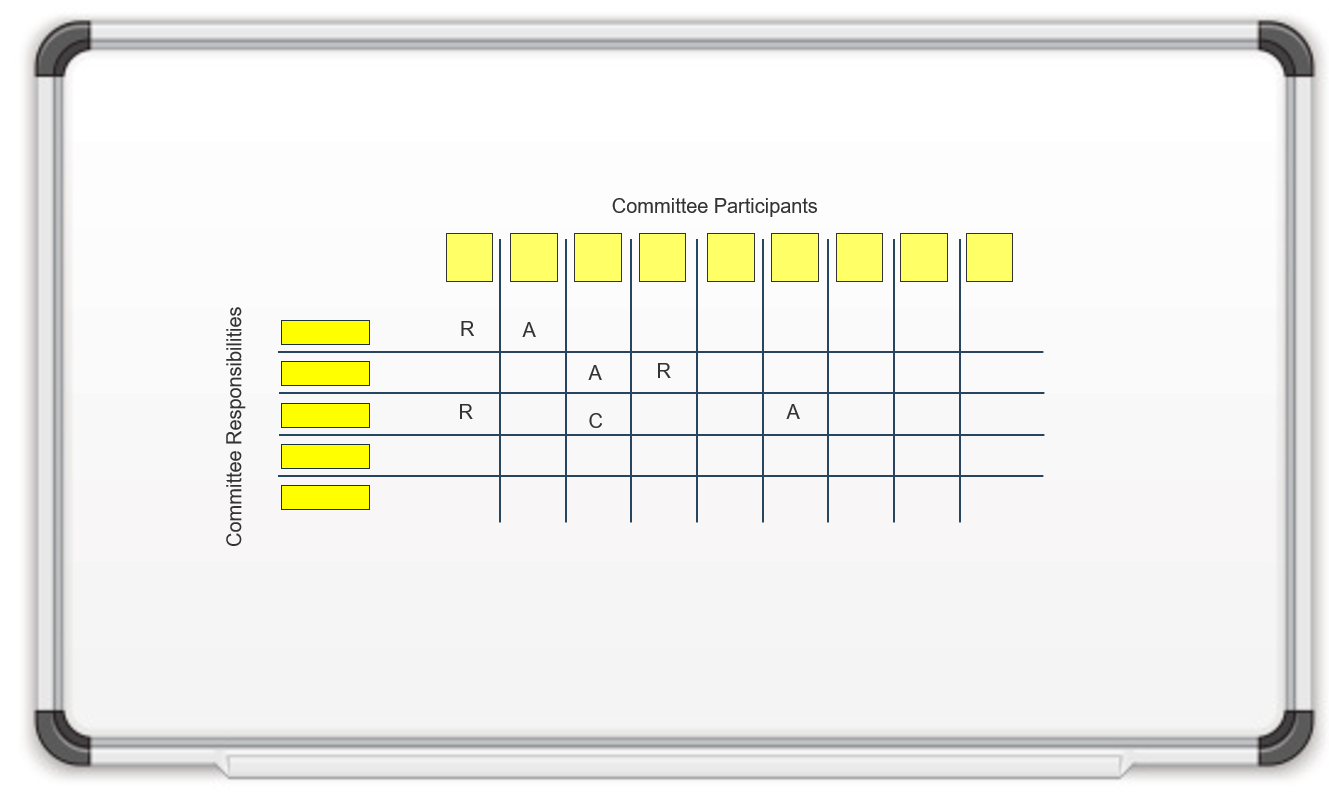

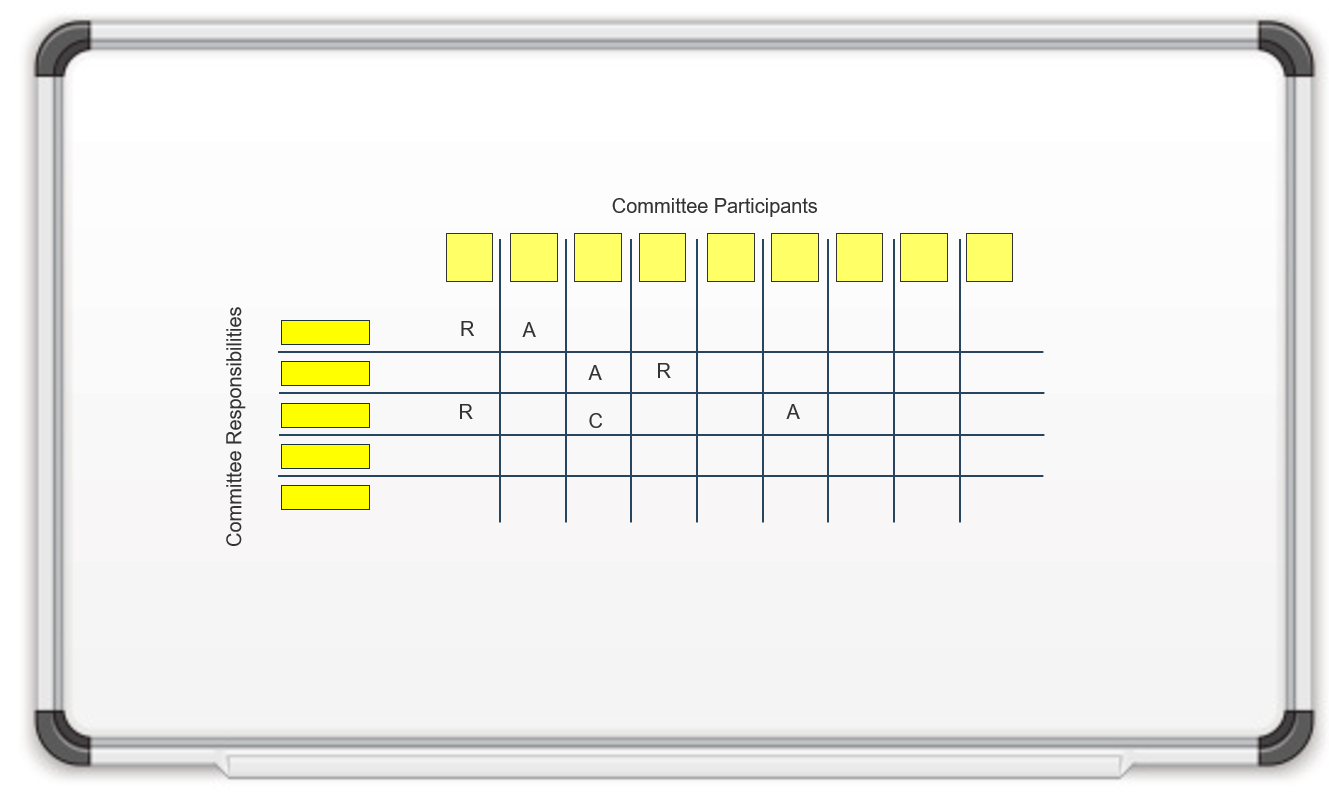

- Define RACI for the requirements gathering process.

- Define the requirements gathering governance process.

- Define RACI for requirements gathering governance.

- Define the agenda and cadence for requirements gathering governance.

- Identify and analyze stakeholders for communication plan.

- Create communication management plan.

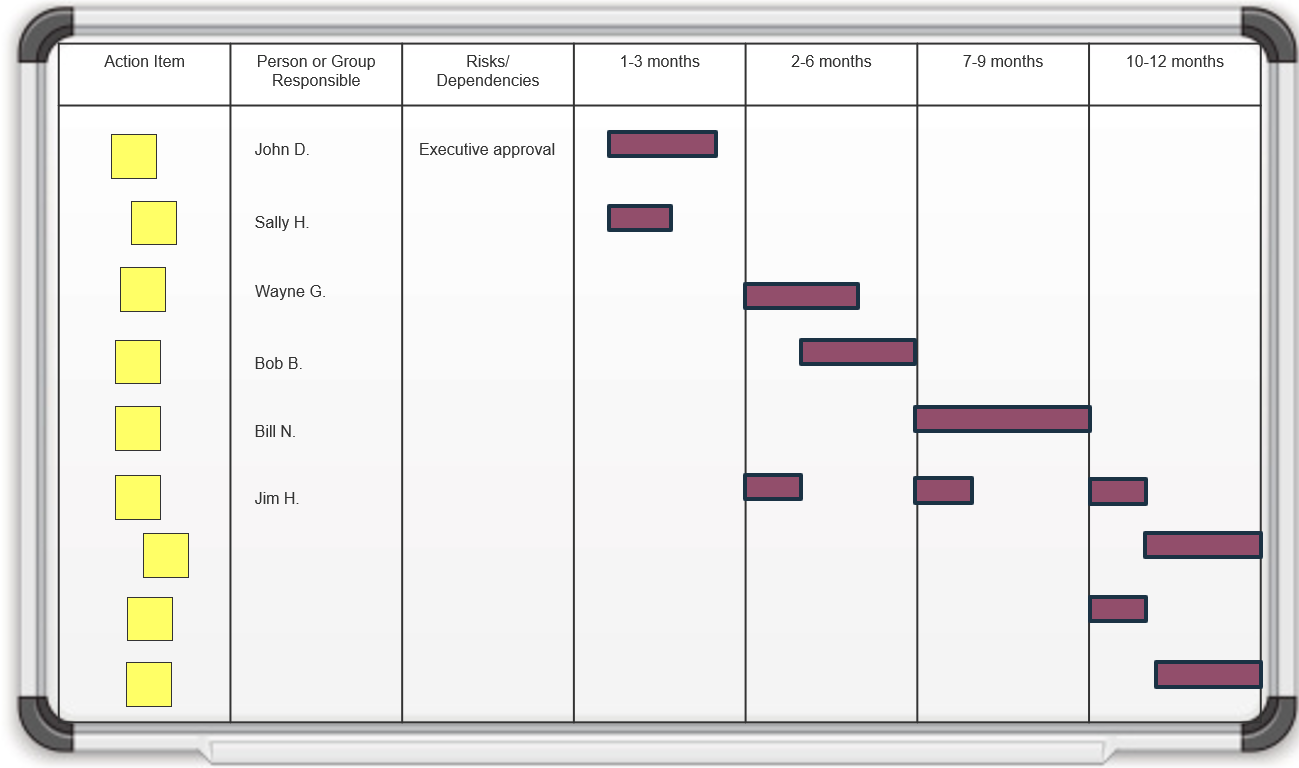

- Build the action plan.

|

| Deliverables

|

- Requirements gathering maturity assessment

- Project level selection tool

- Requirements gathering documentation tool

|

- Project elicitation schedule

- Project operating model

- Project use cases

|

- Requirements gathering documentation tool

- Requirements gathering testing checklist

|

- Requirements traceability matrix

- Requirements gathering communication tracking template

|

- Requirements gathering action plan

|

Phase 1: Build the Target State for the Requirements Gathering Process

Phase 1 outline

Call 1-888-670-8889 or email GuidedImplementations@InfoTech.com for more information.

Complete these steps on your own, or call us to complete a guided implementation. A guided implementation is a series of

2-3 advisory calls that help you execute each phase of a project. They are included in most advisory memberships.

Guided Implementation 1: Build the Target State

Proposed Time to Completion: 2 weeks

Step 1.1: Understand the Benefits of Requirements Optimization

Start with an analyst kick off call:

- Review Info-Tech’s requirements gathering methodology.

Then complete these activities…

With these tools & templates:

Requirements Gathering SOP and BA Playbook

Step 1.2: Determine Your Target State for Requirements Gathering

Review findings with analyst:

- Assess current state for requirements gathering – pains and challenges.

- Determine target state for business requirements gathering – areas of opportunity.

Then complete these activities…

- Identify your business process model.

- Define project levels.

- Match control points to project level.

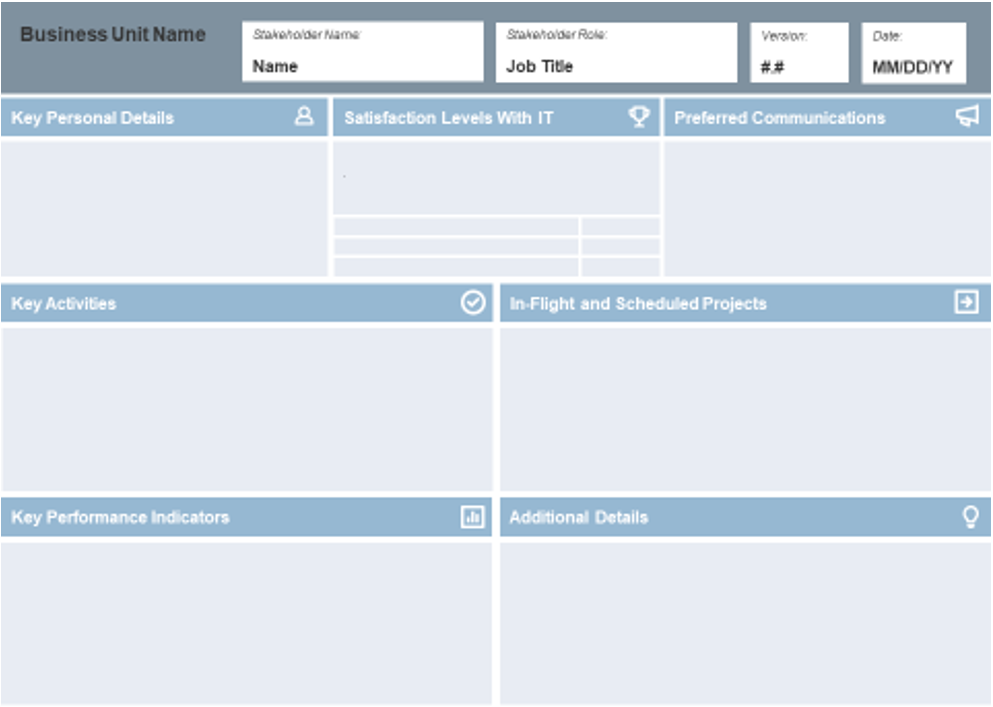

- Identify and analyze stakeholders.

With these tools & templates:

- Requirements Gathering Maturity Assessment

- Project Level Selection Tool

- Business Requirements Analyst job description

- Requirements Gathering Communication Tracking Template

Phase 1 Results & Insights:

Clear understanding of target needs for the requirements process.

Step 1.1: Understand the Benefits of Requirements Optimization

Phase 1

1.1 Understand the Benefits of Requirements Optimization

1.2 Determine Your Target State for Requirements Gathering

Phase 2

2.1 Determine Elicitation Techniques

2.2 Structure Elicitation Output

Phase 3

3.1 Create Analysis Framework

3.2 Validate Business Requirements

Phase 4

4.1 Create Control Processes for Requirements Changes

4.2 Build Requirements Governance and Communication Plan

This step will walk you through the following activities:

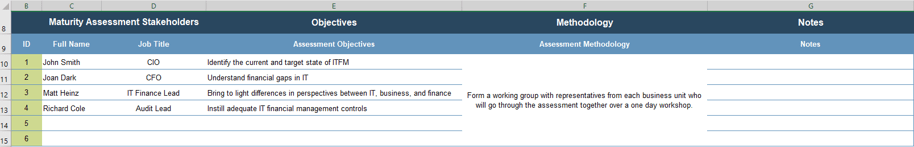

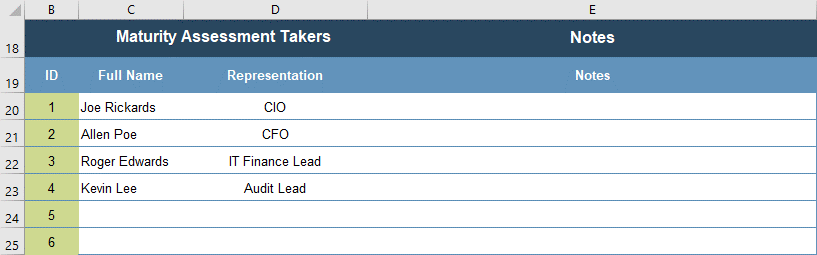

- Identifying challenges with requirements gathering and identifying objectives for the workshop.

This step involves the following participants:

- Business stakeholders

- BAs

Outcomes of this step

- Stakeholder objectives identified.

Requirements optimization is powerful, but it’s not free; gauge the organizational capital you’ll need to make it a success

Optimizing requirements management is not something that can be done in isolation, and it’s not necessarily going to be easy. Improving your requirements will translate into better value delivery, but it takes real commitment from IT and its business partners.

There are four “pillars of commitment” that will be necessary to succeed with requirements optimization:

- Senior Management Organizational Capital

- Before organizations can establish revised SOPs for requirements gathering, they’ll need a strong champion in senior management to ensure that updated elicitation and sign-off techniques do not offend people. A powerful sponsor can lead to success, especially if they are in the business.

- End-User Organizational Capital

- To overcome cynicism, you need to focus on convincing end users that there is something to be gained from participating in requirements gathering (and the broader process of requirements optimization). Frame the value by focusing on how good requirements mean better apps (e.g. faster, cheaper, fewer errors, less frustration).

- Staff Resourcing

- You can have a great SOP, but if you don’t have the right resources to execute on it you’re going to have difficulty. Requirements gathering needs dedicated BAs (or equivalent staff) who are trained in best practices and can handle elicitation, analysis, and validation successfully.

- Dedicated Cycle Time

- IT and the business both need to be willing to demonstrate the value of requirements optimization by giving requirements gathering the time it needs to succeed. If these parties are convinced by the concept in theory, but still try to rush moving to the development phase, they’re destined for failure.

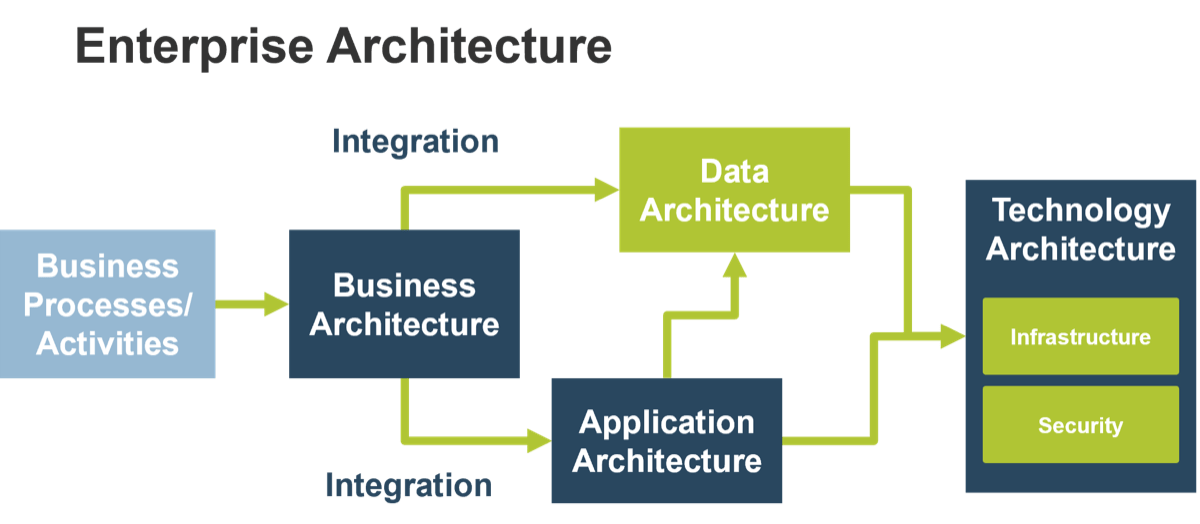

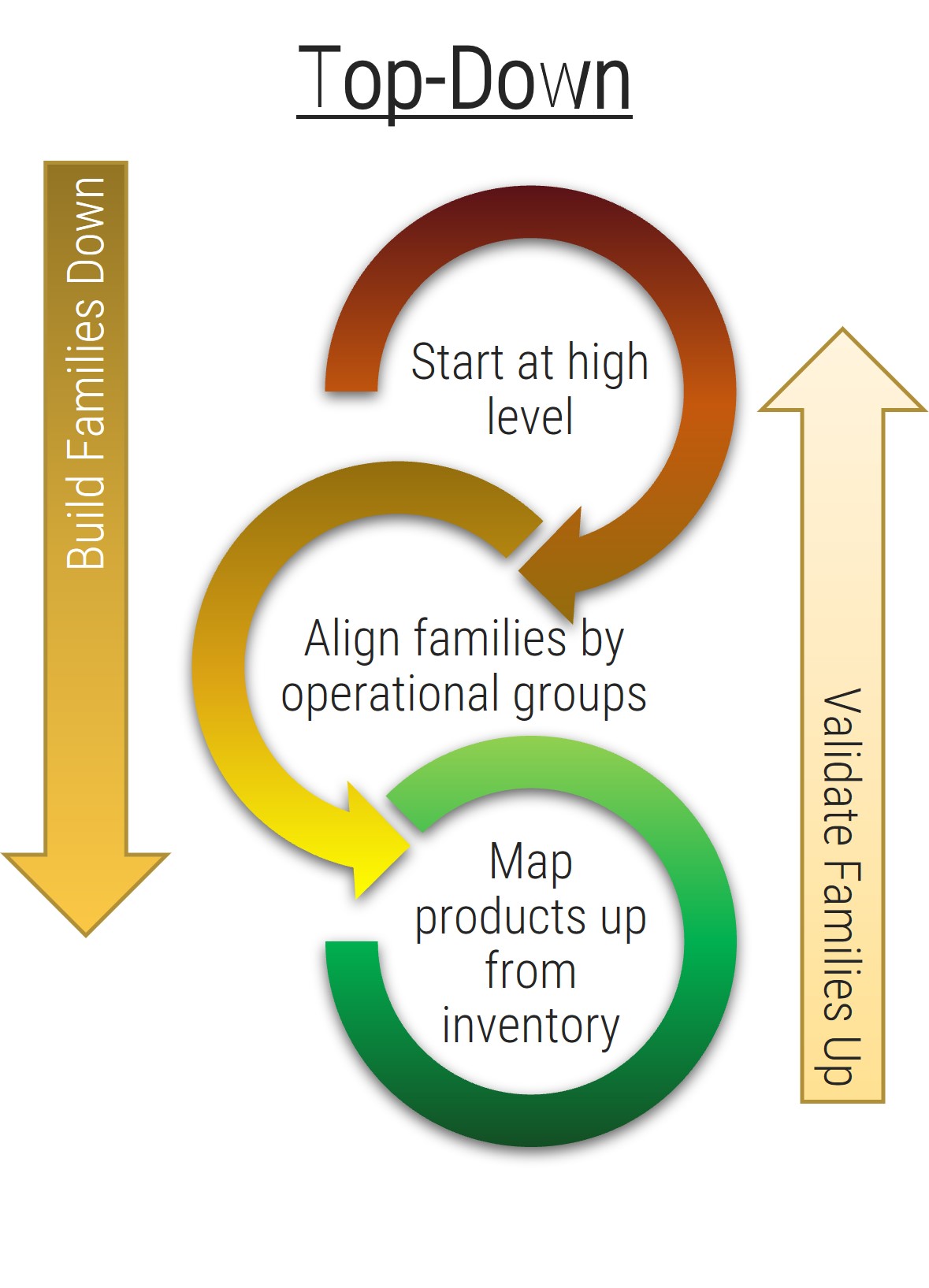

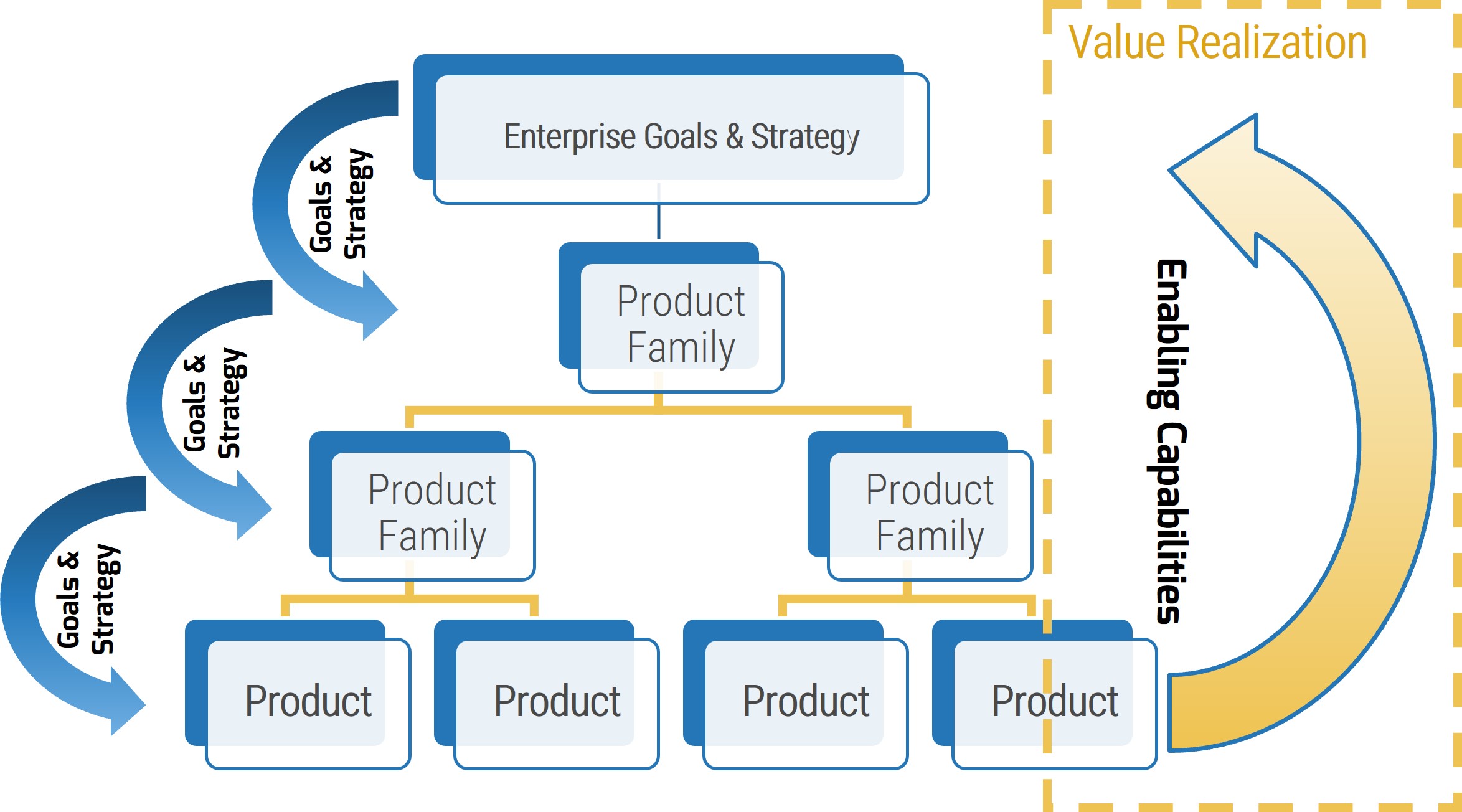

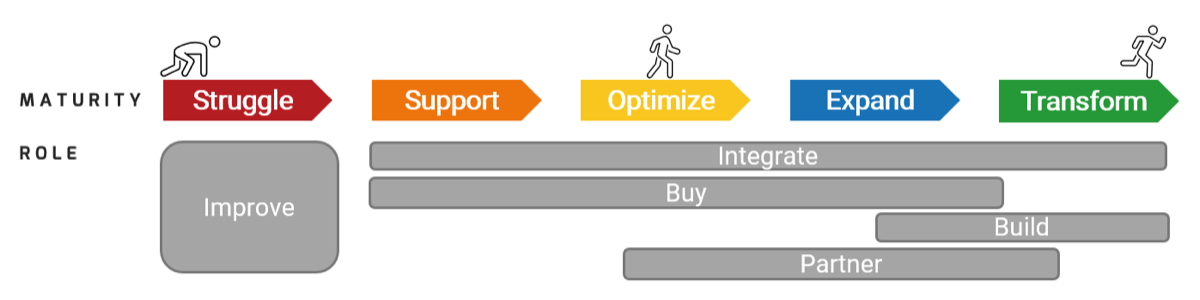

Rethink your approach to requirements gathering: start by examining the business process, then tackle technology

When gathering business requirements, it’s critical not to assume that layering on technology to a process will automatically solve your problems.

Proper requirements gathering views projects holistically (i.e. not just as an attempt to deploy an application or technology, but as an endeavor to enable new or re-engineered business processes). Neglecting to see requirements gathering in the context of business process enablement leads to failure.

- Far too often, organizations automate an existing process without putting much thought into finding a better way to do things.

- Most organizations focus on identifying a series of small improvements to make to a process and realize limited gains.

- The best way to generate transformational gains is to reinvent how the process should be performed and work backwards from there.

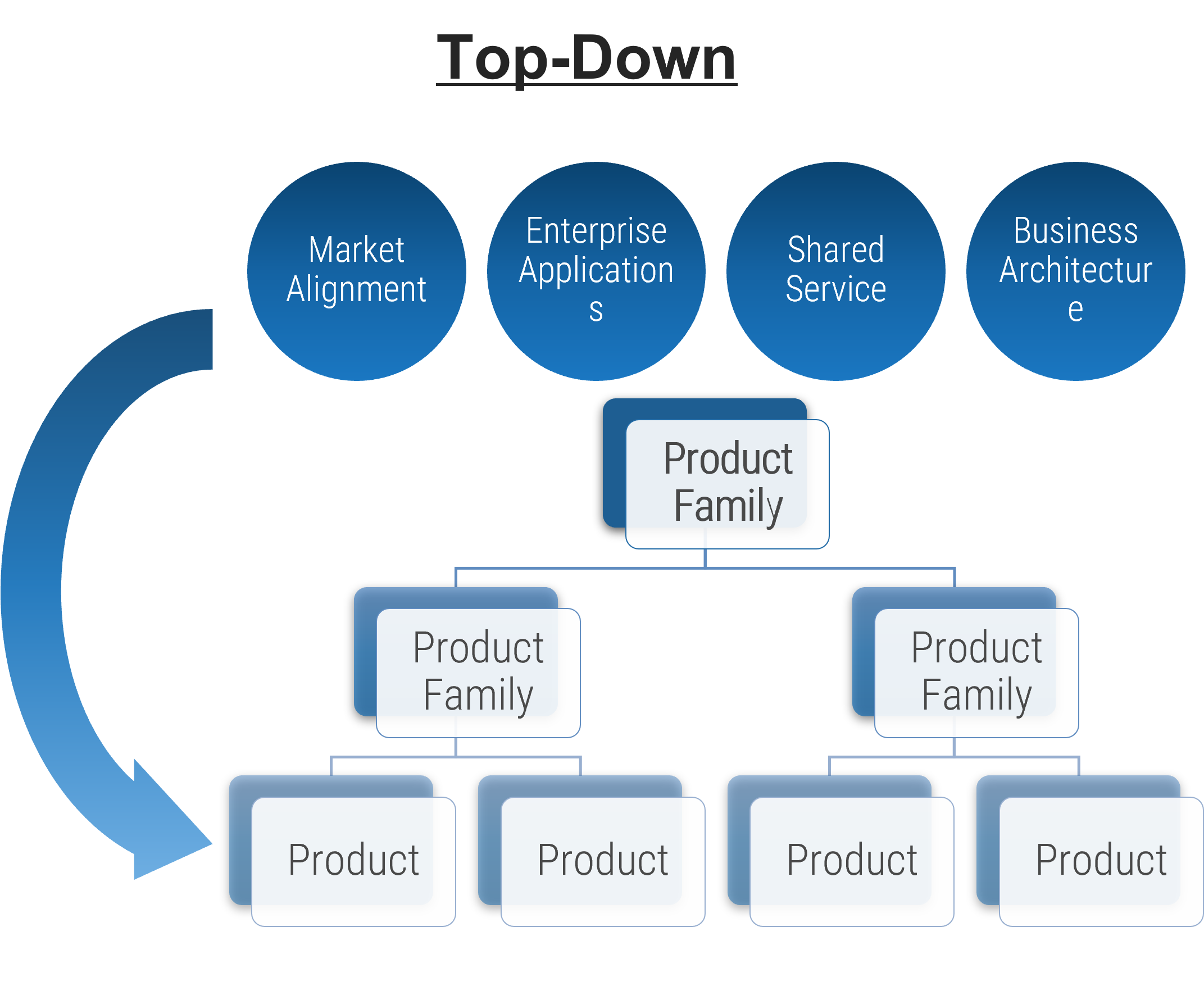

- You should take a top-down approach and begin by speaking with senior management about the business case for the project and their vision for the target state.

- You should elicit requirements from the rank-and-file employees while centering the discussion and requirements around senior management’s target state. Don’t turn requirements gathering into a griping session about deficiencies with a current application.

Leverage Info-Tech’s proven Requirements Gathering Framework as the basis for building requirements processes

Info-Tech’s Requirements Gathering Framework is a comprehensive approach to requirements management that can be scaled to any size of project or organization. This framework has been extensively road-tested with our clients to ensure that it balances the needs of IT and business stakeholders to give a holistic, end-to-end approach for requirements gathering. It covers both the foundational issues (elicitation, analysis, and validation) as well as prescribing techniques for planning, monitoring, communicating, and managing the requirements gathering process.

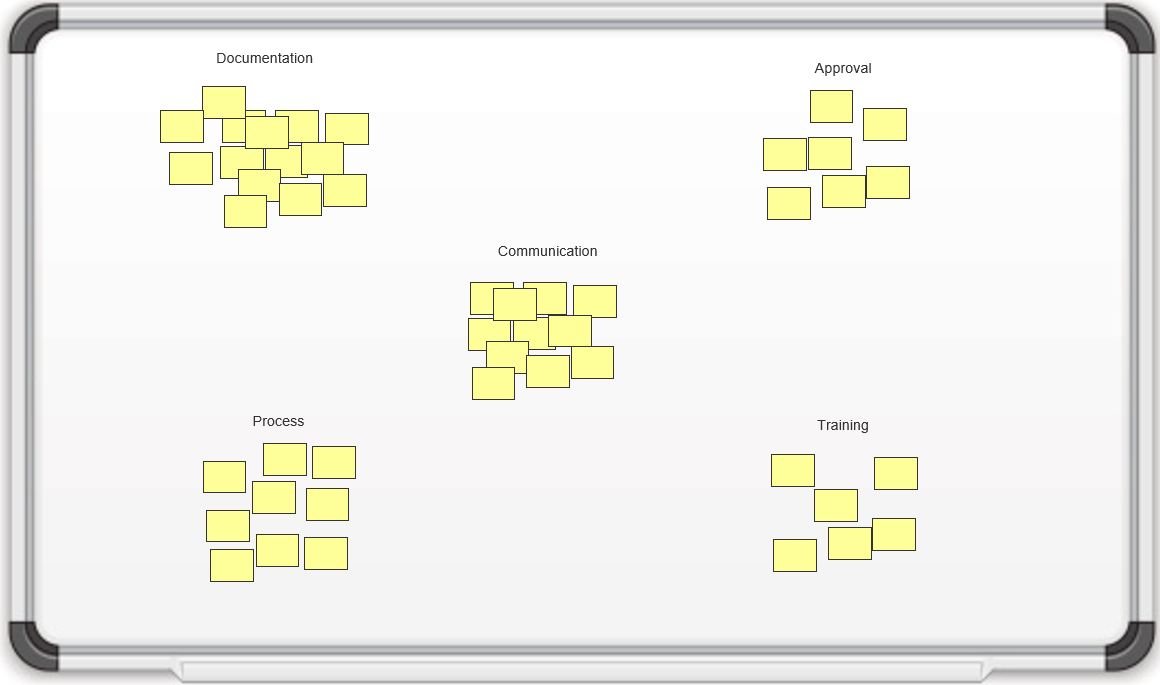

Requirements gathering fireside chat

1.1.1 – 45 minutes

Output

Materials

- Whiteboard, markers, sticky notes

Participants

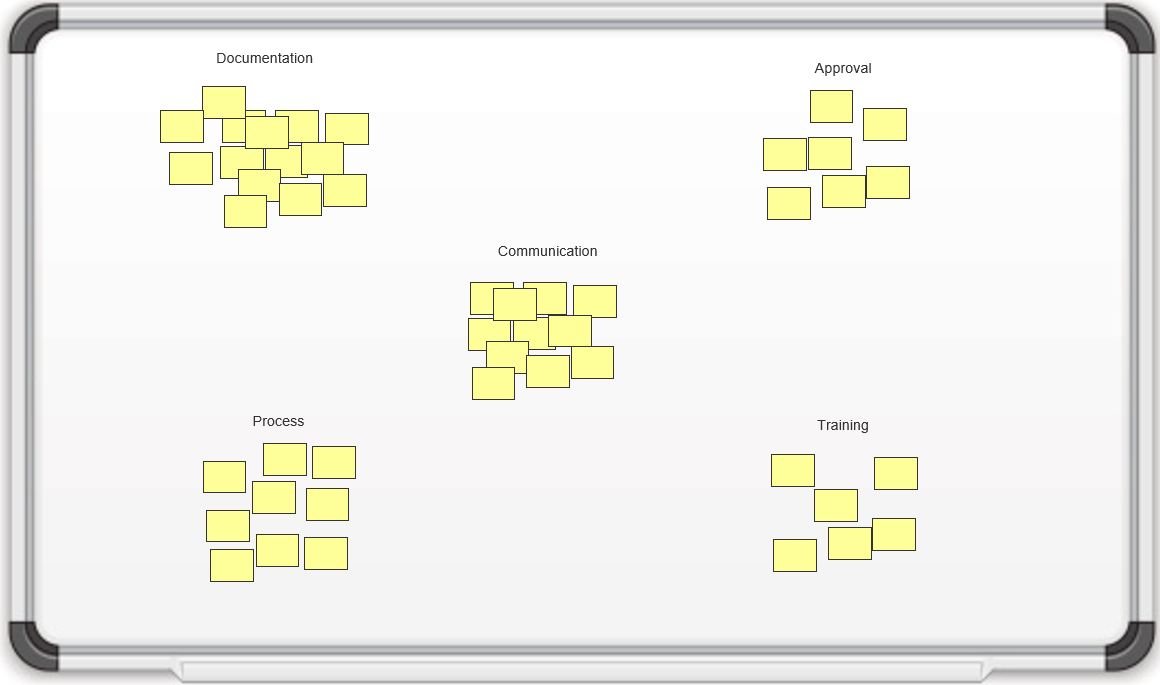

Identify the challenges you’re experiencing with requirements gathering, and identify objectives.

- Hand out sticky notes to participants, and ask the group to work independently to think of challenges that exist with regards to requirements gathering. (Hint: consider stakeholder challenges, process challenges, outcome challenges, and training challenges.) Ask participants to write their current challenges on sticky notes, and place them on the whiteboard.

- As a group, review all sticky notes and group challenges into themes.

- For each theme you uncover, work as a group to determine the objective that will overcome these challenges throughout the workshop and write this on the whiteboard.

- Discuss how these challenges will be addressed in the workshop.

Don’t improvise: have a structured, prescriptive end-to-end approach for successfully gathering useful requirements

Creating a unified SOP guide for requirements elicitation, analysis, and validation is a critical step for requirements optimization; it gives your BAs a common frame of reference for conducting requirements gathering.

- The key to requirements optimization is to establish a strong set of SOPs that provide direction on how your organization should be executing requirements gathering processes. This SOP guide should be a holistic document that walks your BAs through a requirements gathering project from beginning to end.

- An SOP that is put aside is useless; it must be well communicated to BAs. It should be treated as the veritable manifesto of requirements management in your organization.

Info-Tech Insight

Having a standardized approach to requirements management is critical, and SOPs should be the responsibility of a group. The SOP guide should cover all of the major bases of requirements management. In addition to providing a walk-through of the process, an SOP also clarifies requirements governance.

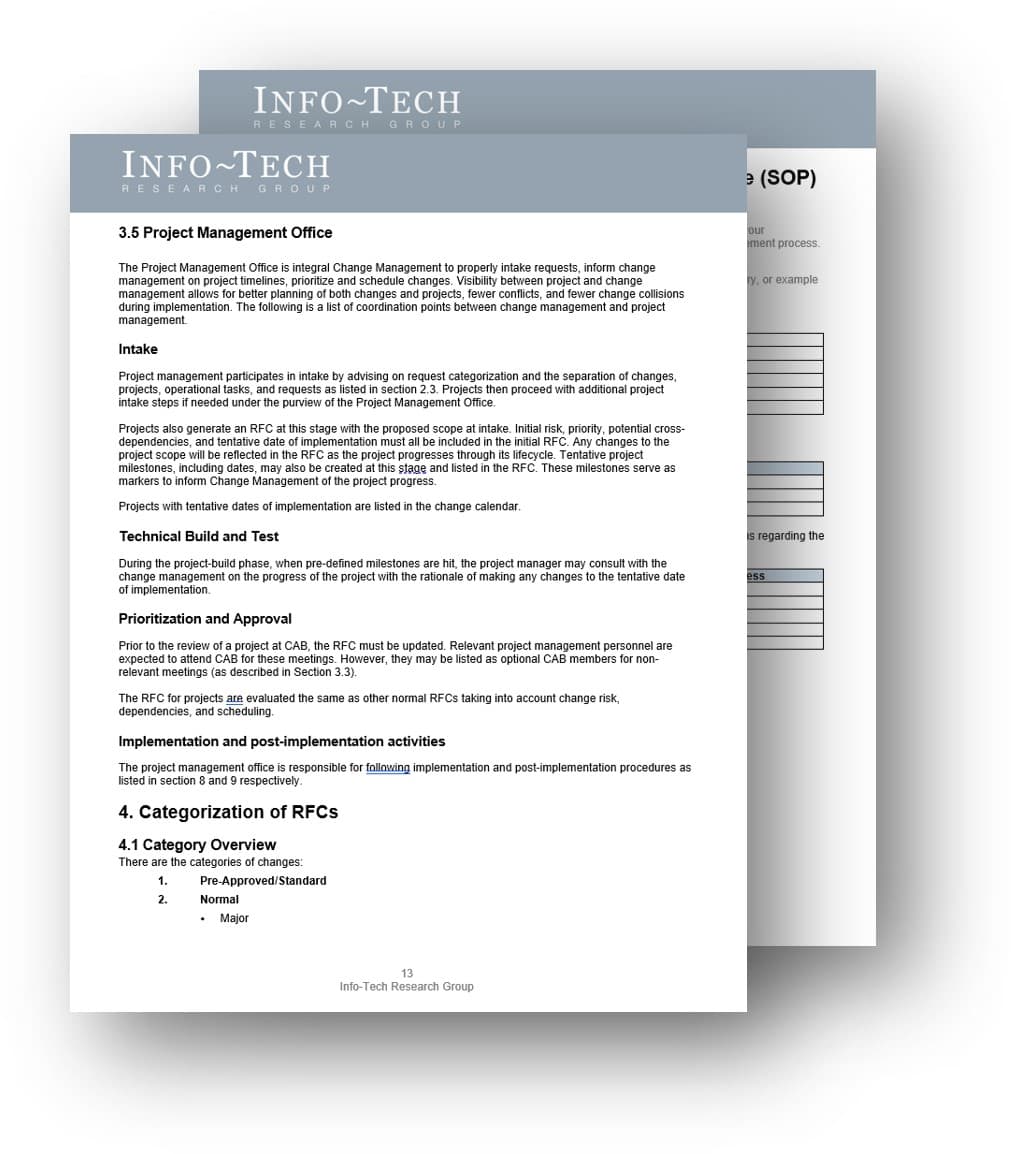

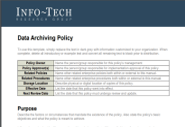

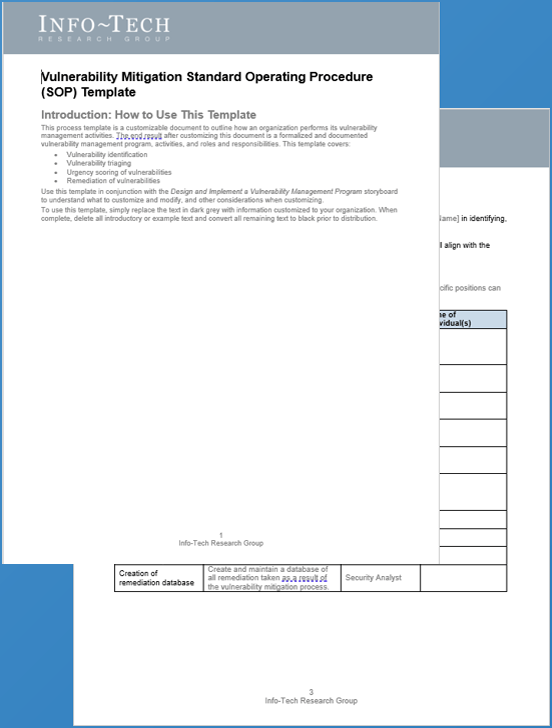

Use Info-Tech’s Requirements Gathering SOP and BA Playbook to assist with requirements gathering optimization

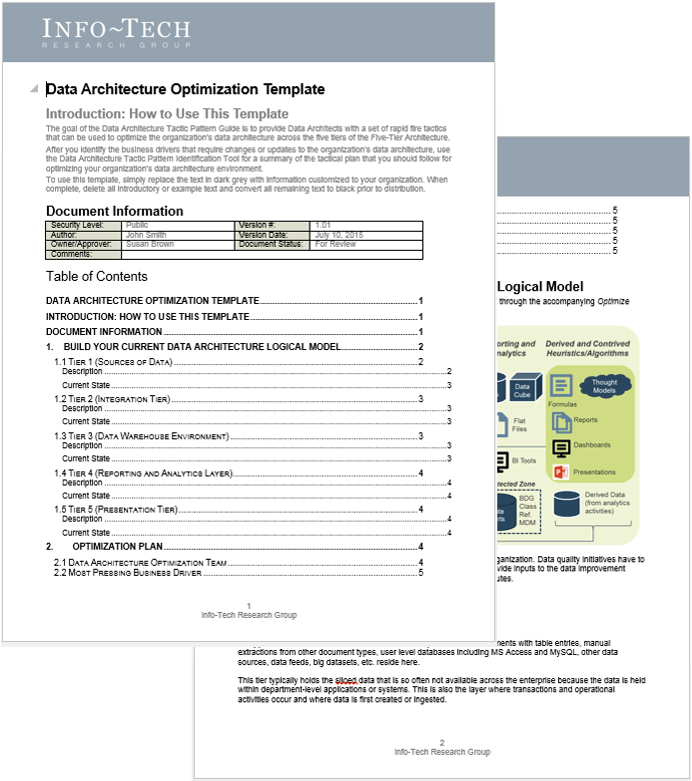

Info-Tech’s Requirements Gathering SOP and BA Playbook template forms the basis of this blueprint. It’s a structured document that you can fill out with defined procedures for how requirements should be gathered at your organization.

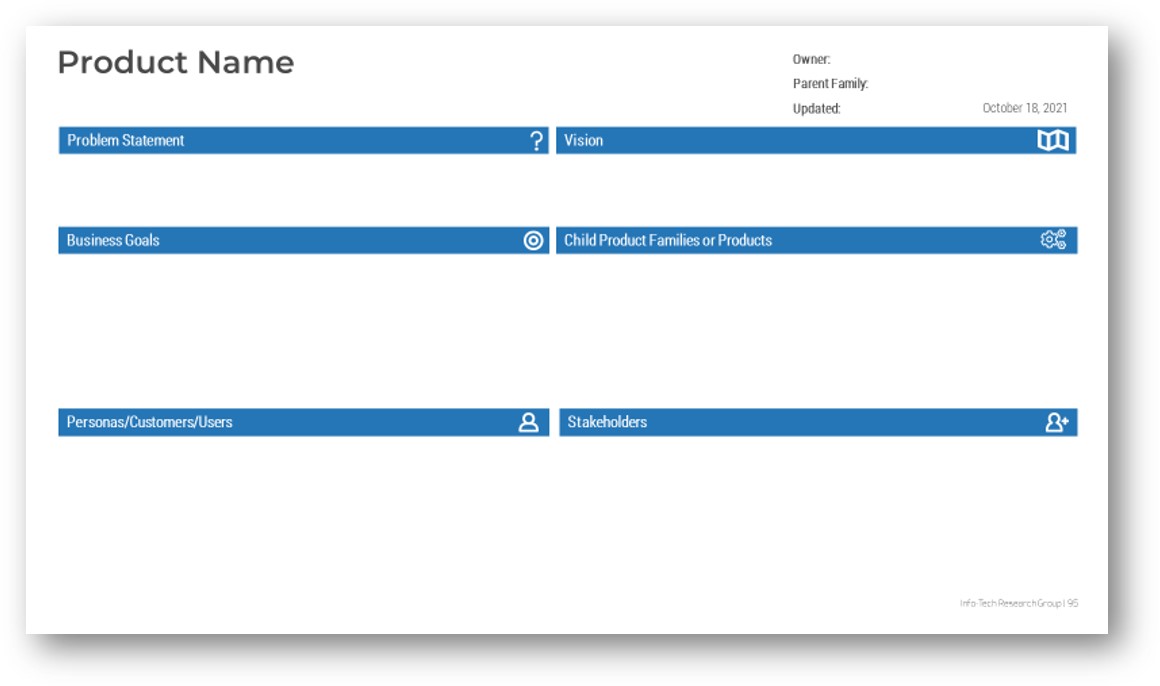

Info-Tech’s Requirements Gathering SOP and BA Playbook template provides a number of sections that you can populate to provide direction for requirements gathering practitioners. Sections provided include: Organizational Context Governance Procedures Resourcing Model Technology Strategy Knowledge Management Elicitation SOPs Analysis SOPs Validation SOPs.

The template has been pre-populated with an example of requirements management procedures. Feel free to customize it to fit your specific needs.

Download the Requirements Gathering SOP and BA Playbook template.

Step 1.2: Determine Your Target State for Requirements Gathering

Phase 1

1.1 Understand the Benefits of Requirements Optimization

1.2 Determine Your Target State for Requirements Gathering

Phase 2

2.1 Determine Elicitation Techniques

2.2 Structure Elicitation Output

Phase 3

3.1 Create Analysis Framework

3.2 Validate Business Requirements

Phase 4

4.1 Create Control Processes for Requirements Changes

4.2 Build Requirements Governance and Communication Plan

This step will walk you through the following activities:

- Conduct a current and target state analysis.

- Identify requirements gathering business process model.

- Establish requirements gathering performance metrics.

- Define project levels – level 1/2/3/4.

- Match control points to project level.

- Conduct initial brainstorming on the project.

This step involves the following participants:

Outcomes of this step:

- Requirements gathering maturity summary.

- Requirements gathering business process model.

- Identification of project levels.

- Identification of control points.

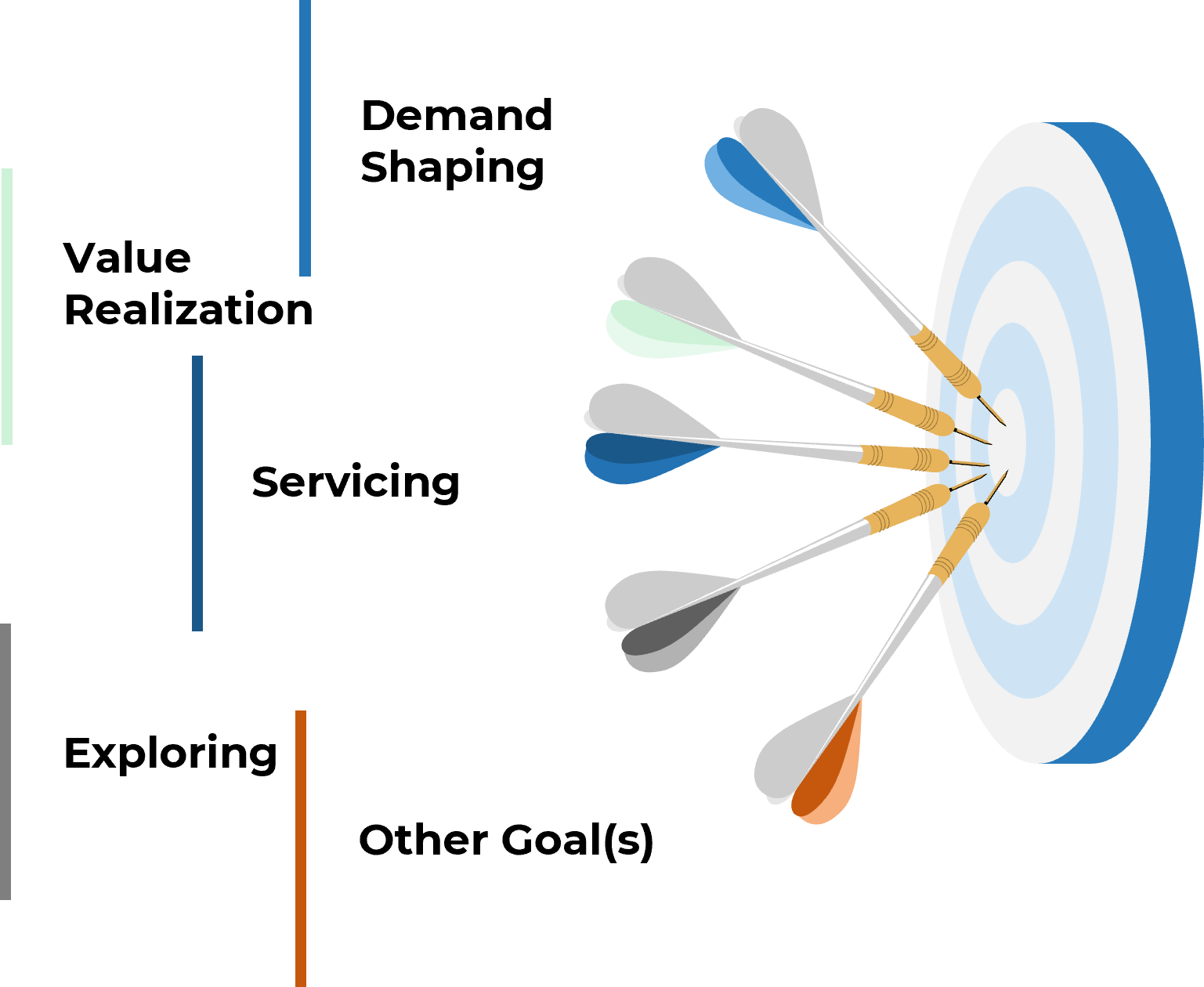

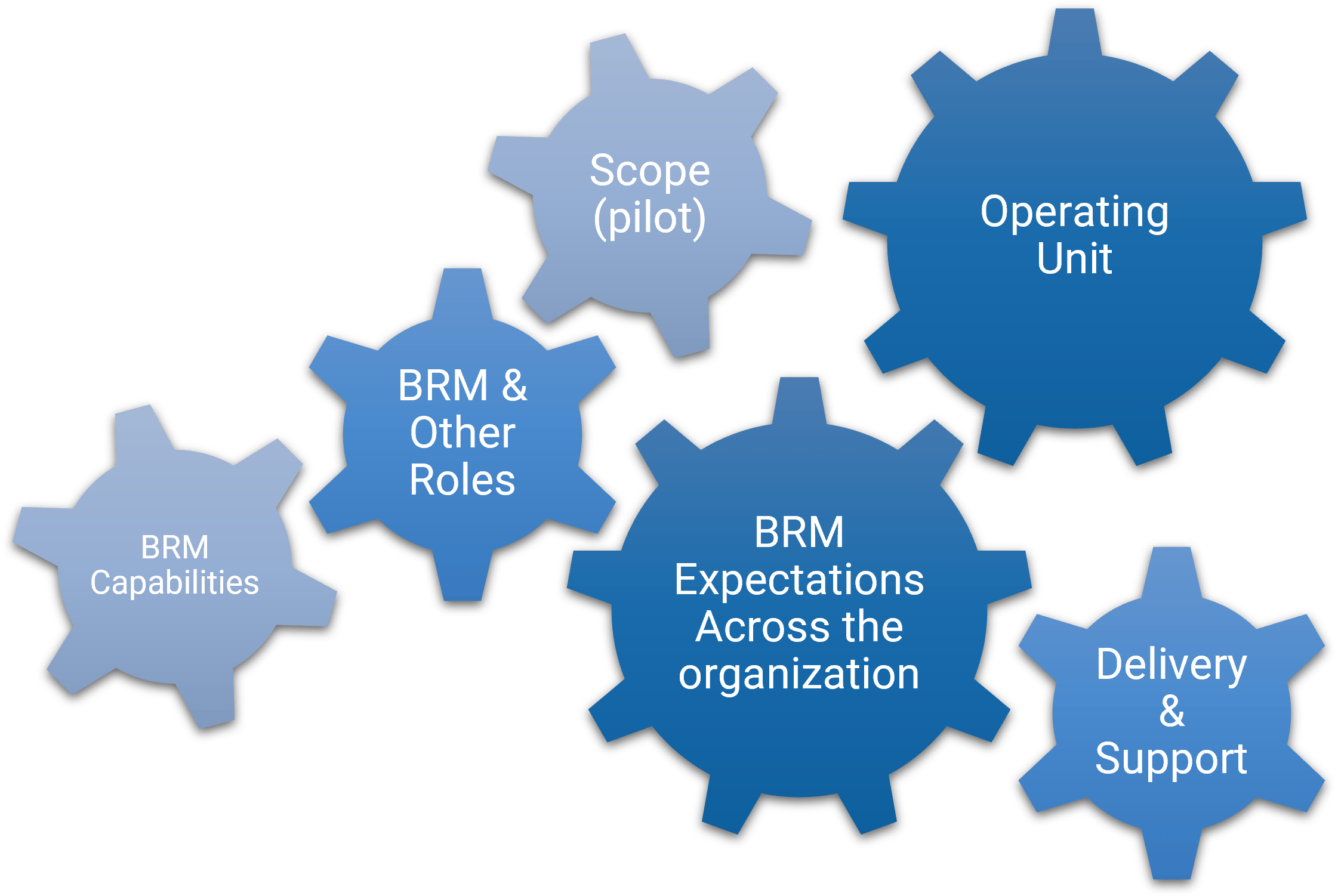

Plan for requirements gathering

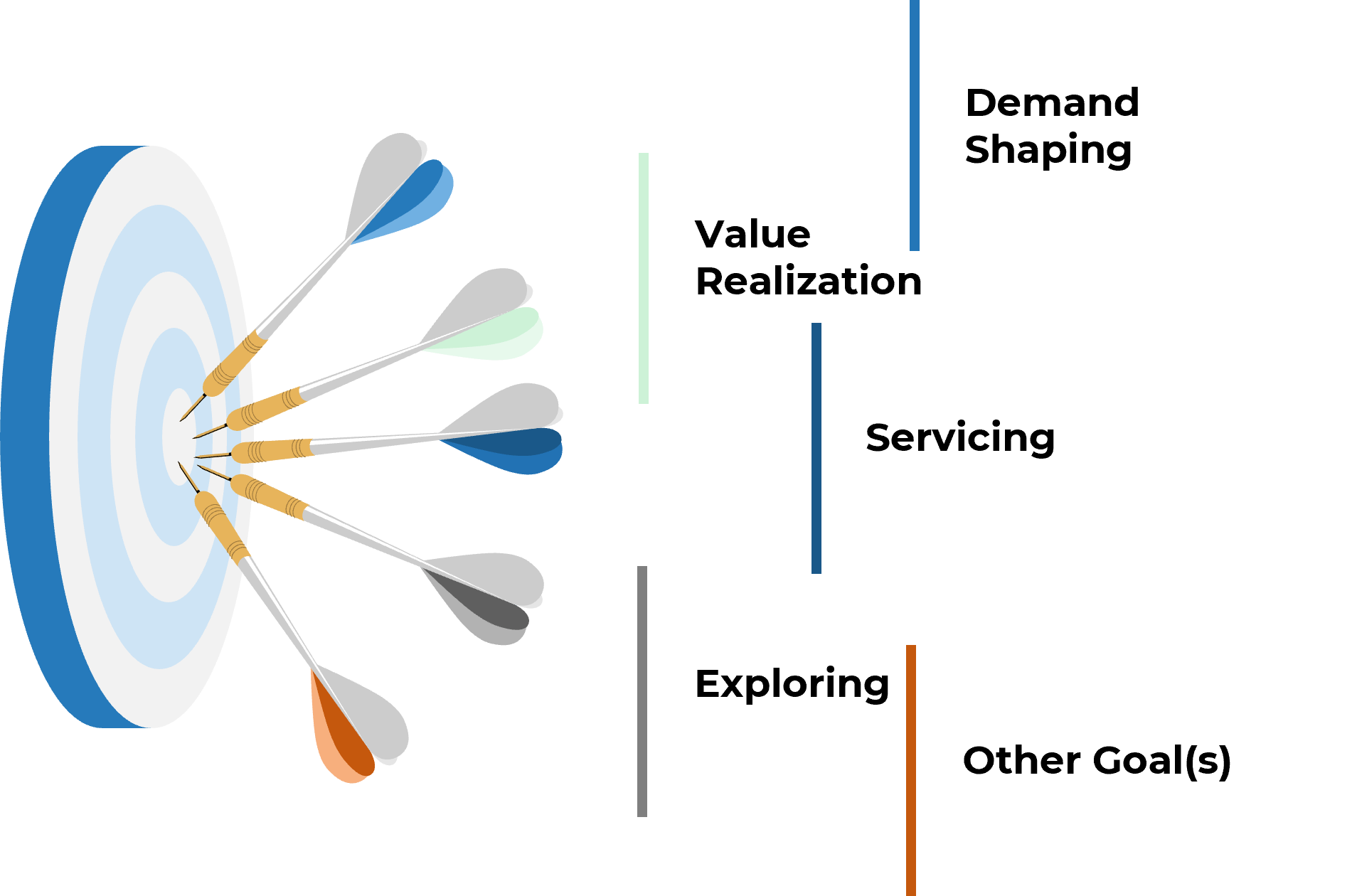

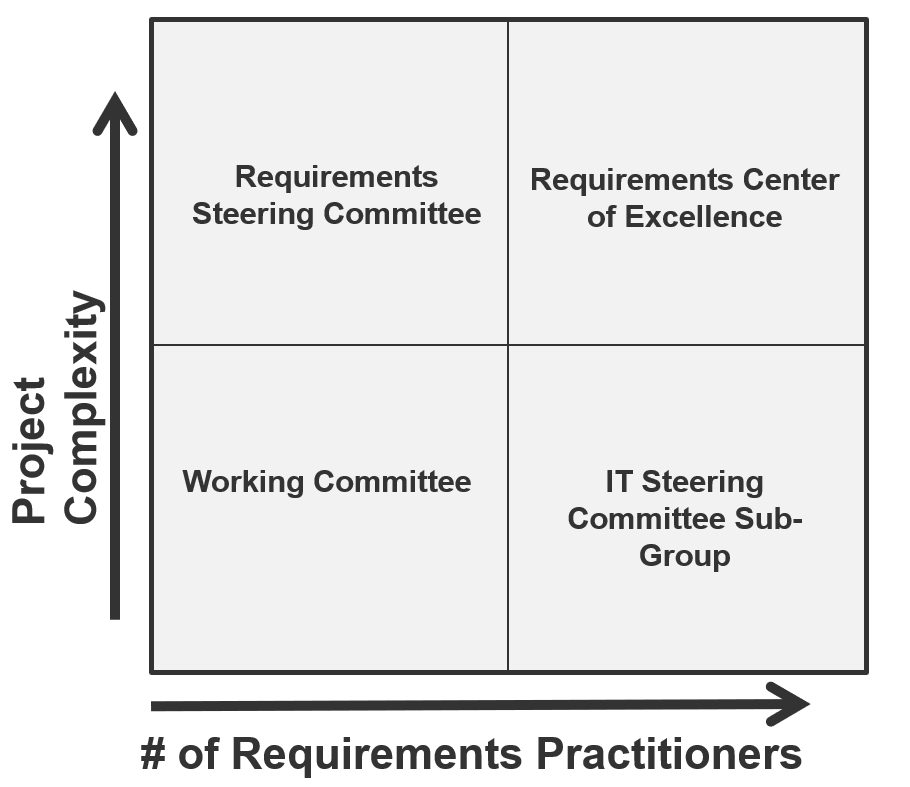

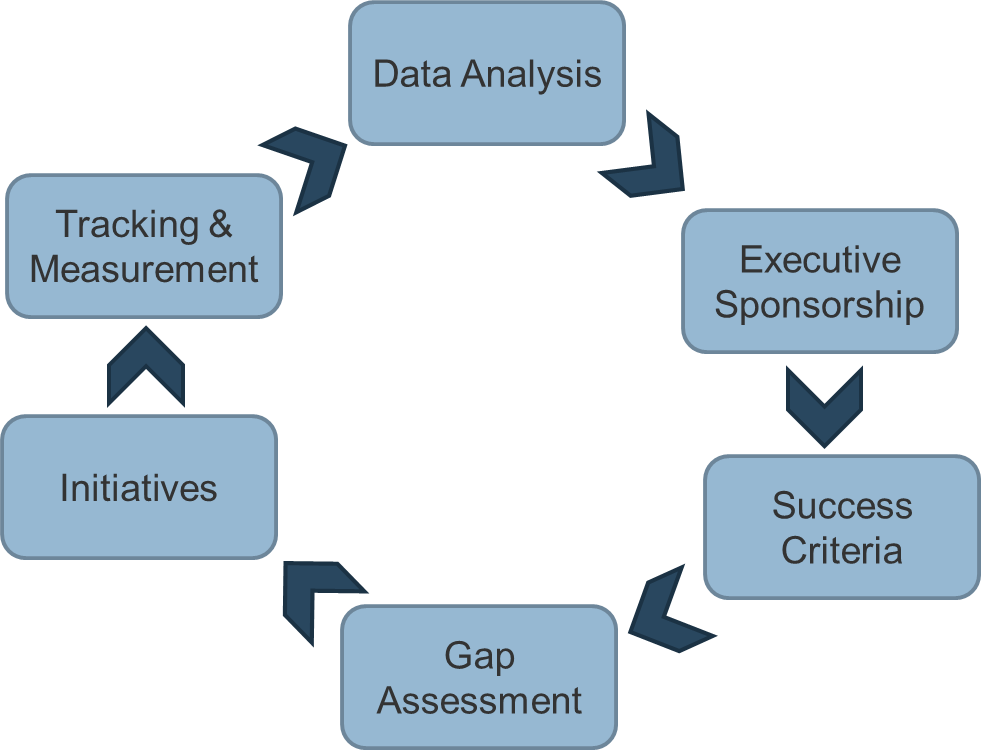

Establishing an overarching plan for requirements governance is the first step in building an SOP. You must also decide who will actually execute the requirements gathering processes, and what technology they will use to accomplish this. Planning for governance, resourcing, and technology is something that should be done repeatedly and at a higher strategic level than the more sequential steps of elicitation, analysis, and validation.

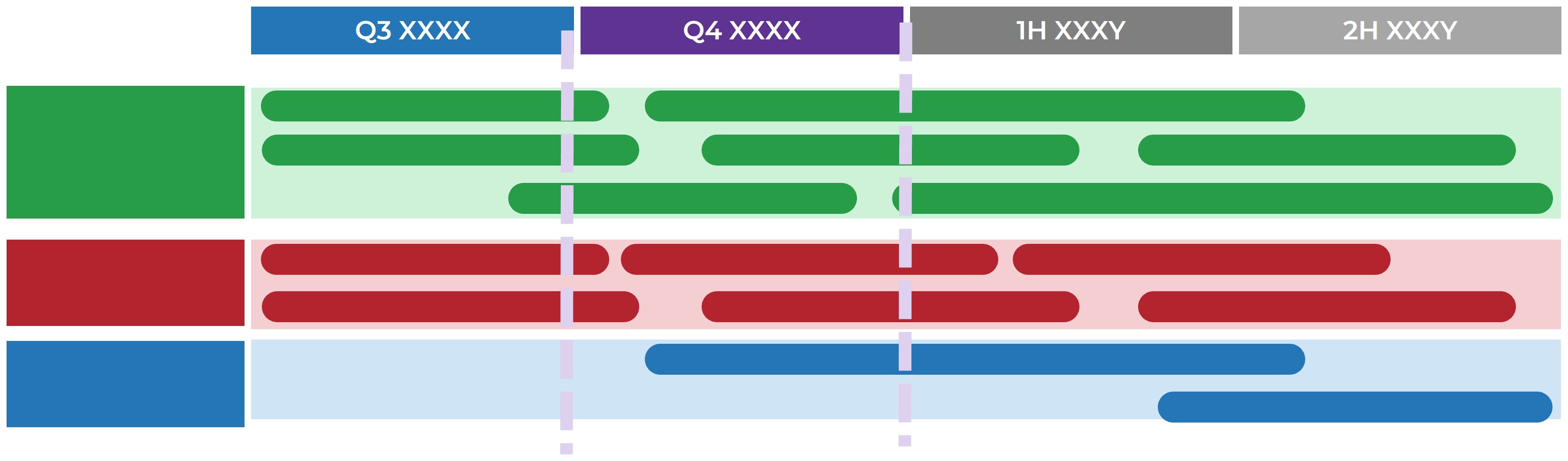

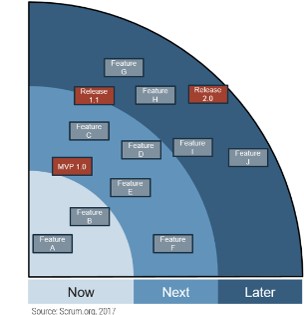

Establish your target state for requirements gathering processes to have a cogent roadmap of what needs to be done

Visualize how you want requirements to be gathered in your organization. Do not let elements of the current process restrict your thinking.

- First, articulate the impetus for optimizing requirements management and establish clear goals.

- Use these goals to drive the target state.

For example:

- If the goal is to improve the accuracy of requirements, then restructure the validation process.

- If the goal is to improve the consistency of requirements gathering, then create SOPs or use electronic templates and tools.

Refrain from only making small changes to improve the existing process. Think about the optimal way to structure the requirements gathering process.

Define the attributes of a good requirement to help benchmark the type of outputs that you’re looking for

Attributes of Good Requirements

Verifiable – It is stated in a way that can be

tested.

Unambiguous – It is free of

subjective terms and can only be interpreted in one way.

Complete – It contains all

relevant information.

Consistent – It does not

conflict with other requirements.

Achievable – It is possible

to accomplish given the budgetary and technological constraints.

Traceable – It can tracked

from inception to testing.

Unitary – It addresses

only one thing and cannot be decomposed into multiple requirements.

Accurate – It is based on proven facts and correct information.

Other Considerations:

Organizations can also track a requirement owner, rationale, priority level (must have vs. nice to have), and current status (approved, tested, etc.).

Info-Tech Insight

Requirements must be solution agnostic – they should focus on the underlying need rather than the technology required to satisfy the need as it can be really easy to fall into the technology solution trap.

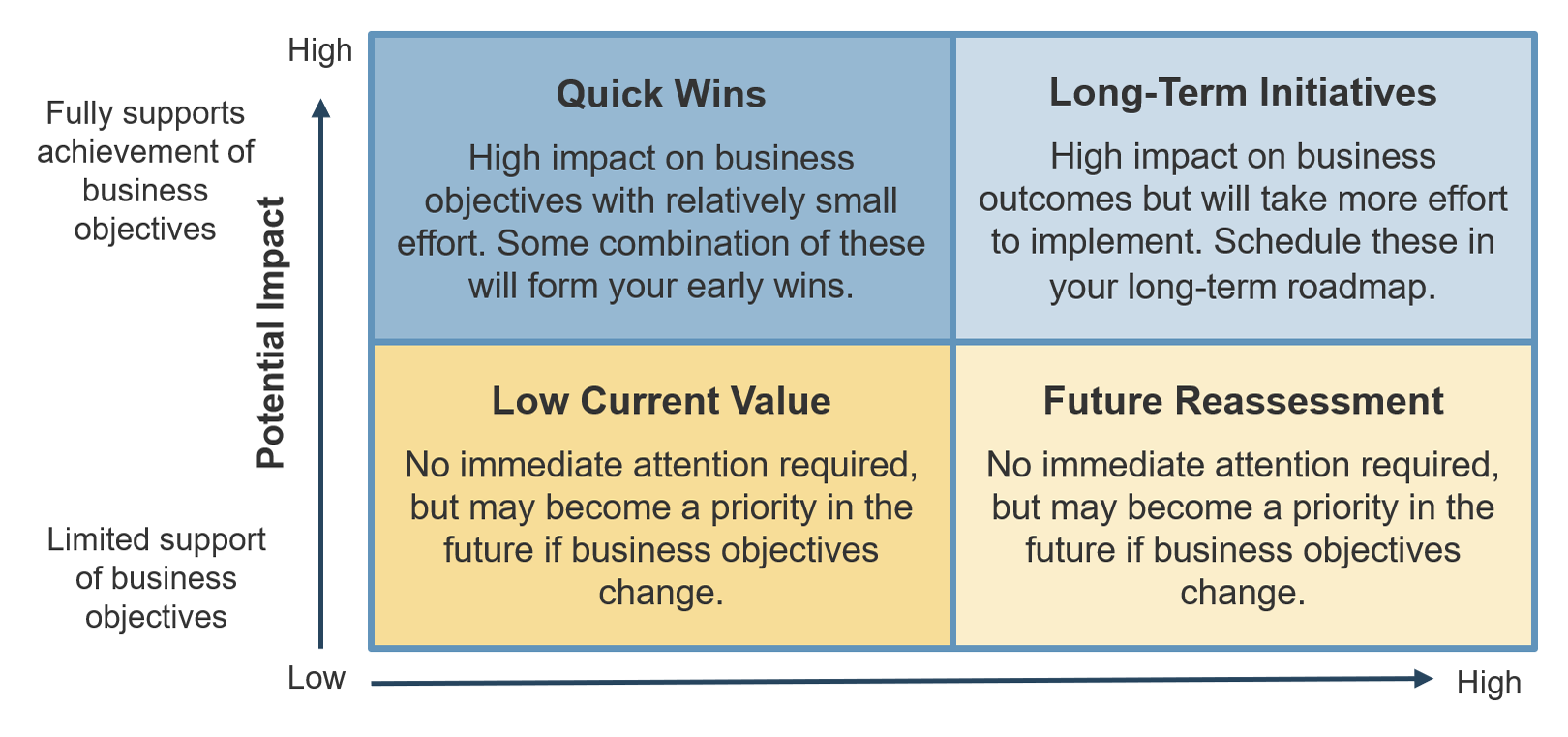

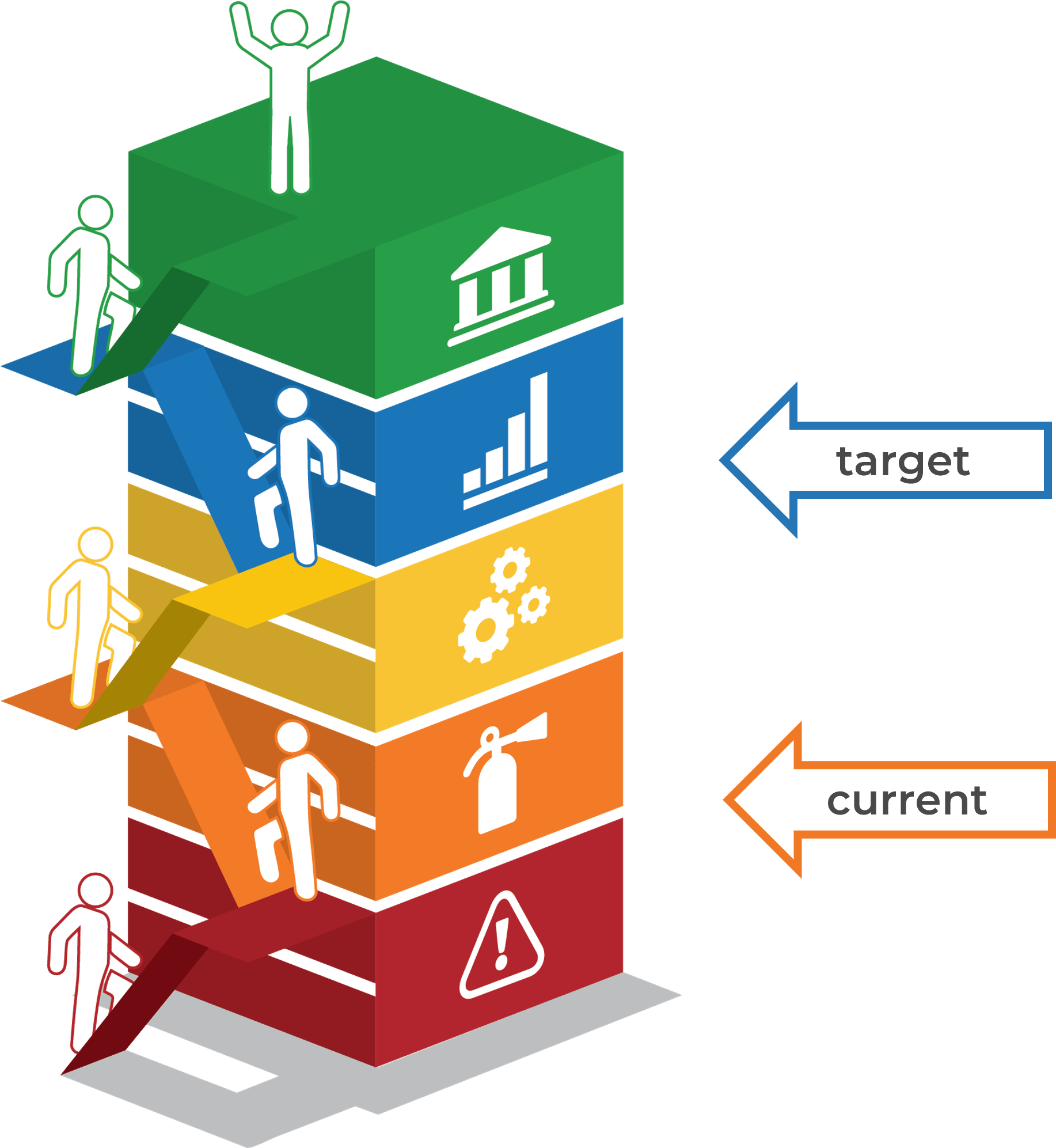

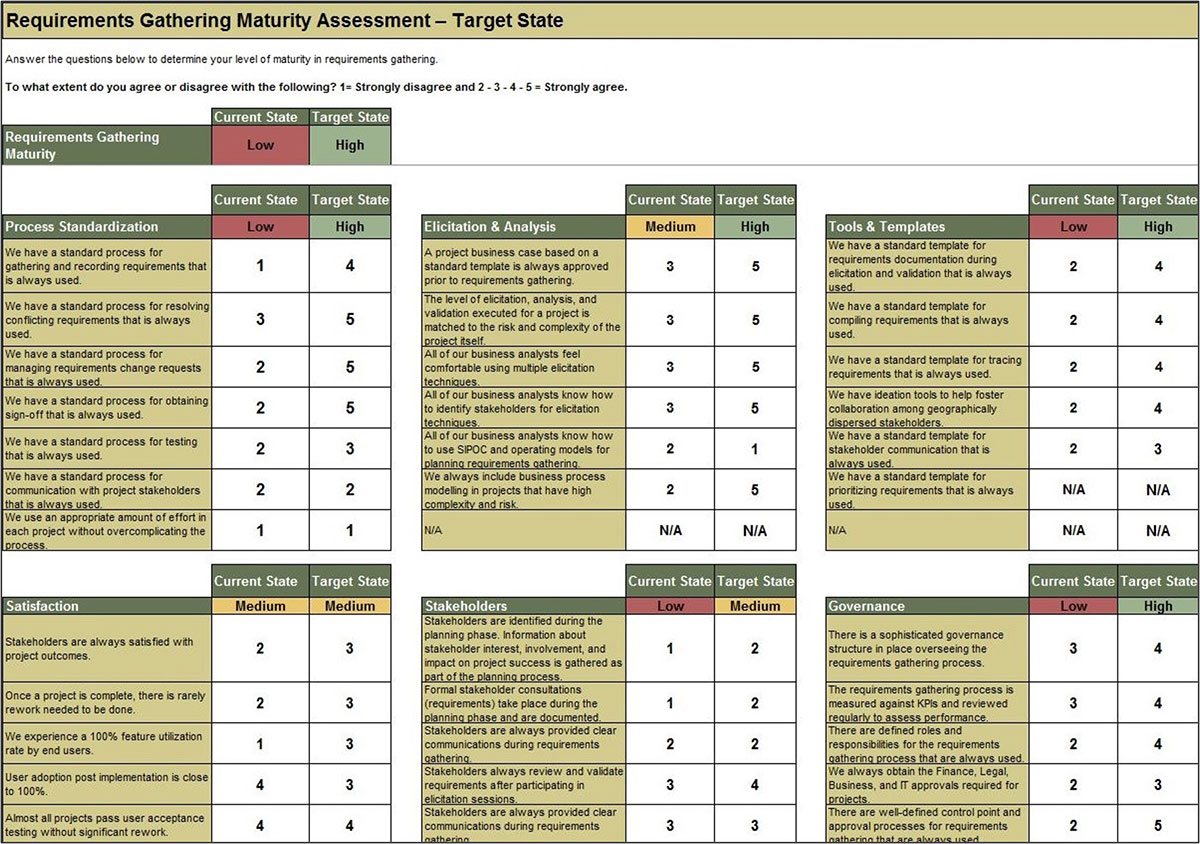

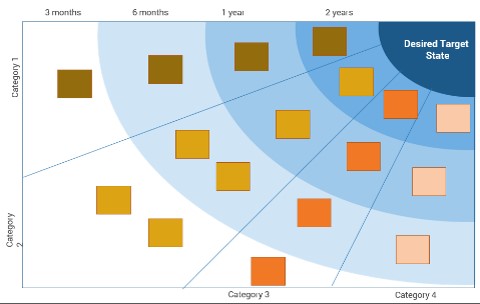

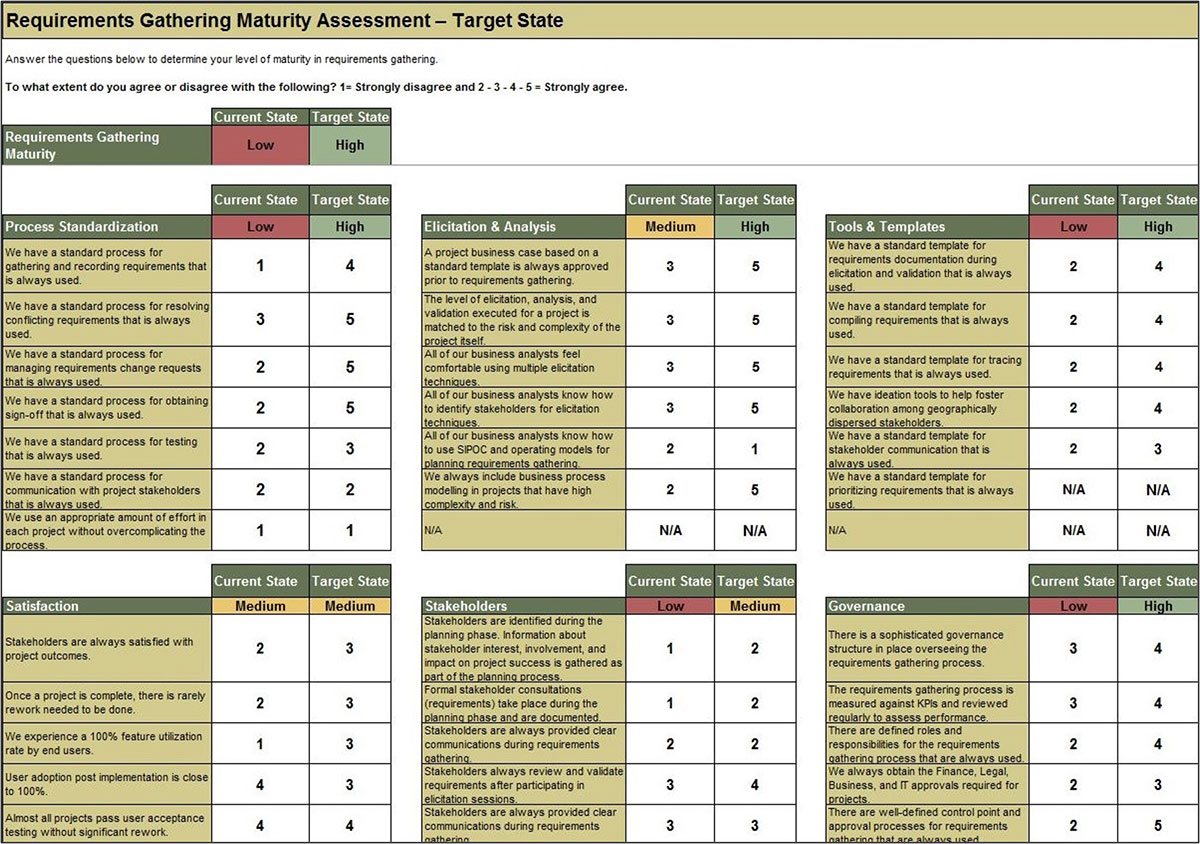

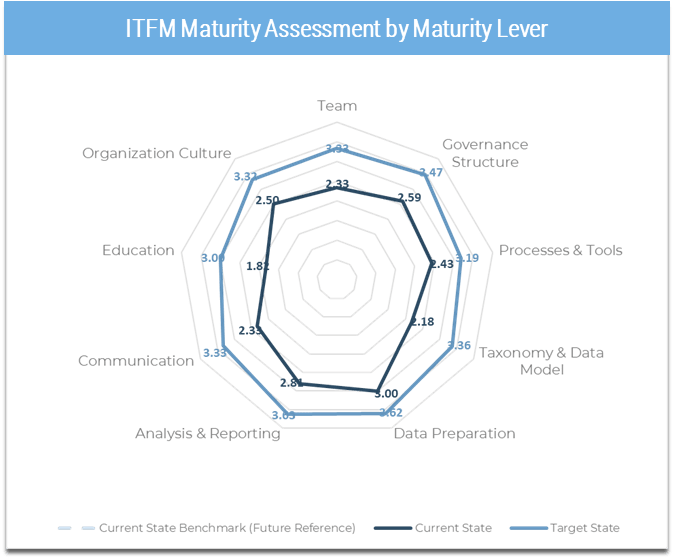

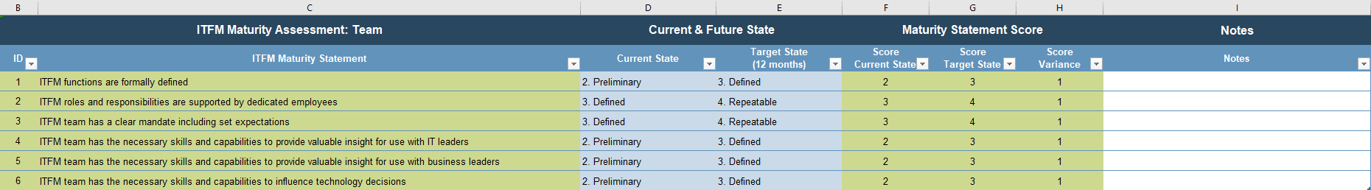

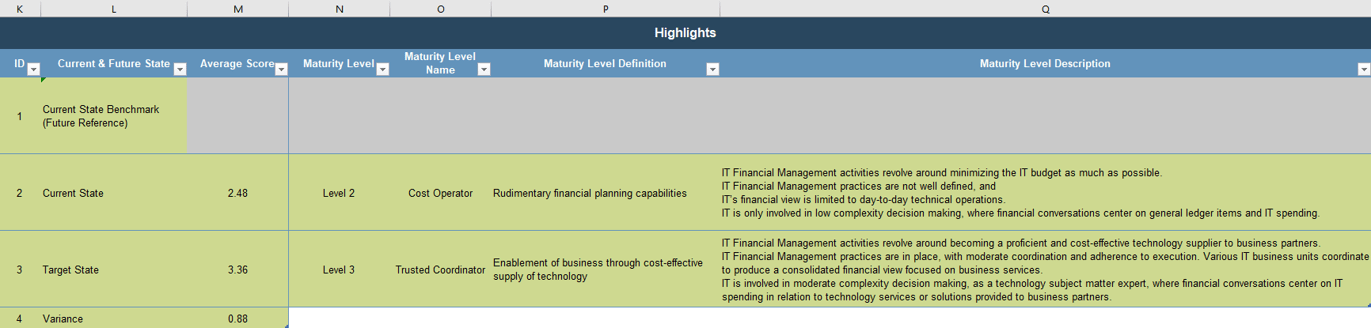

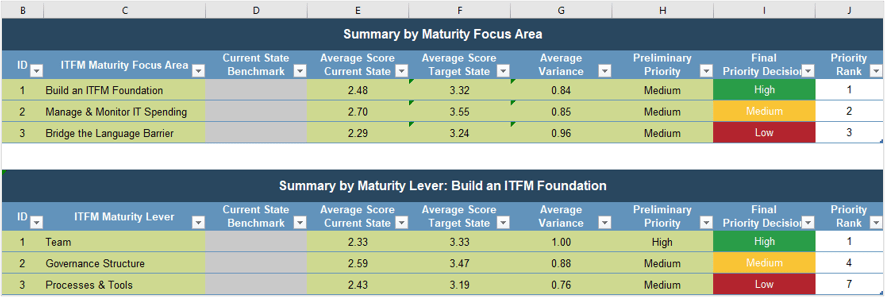

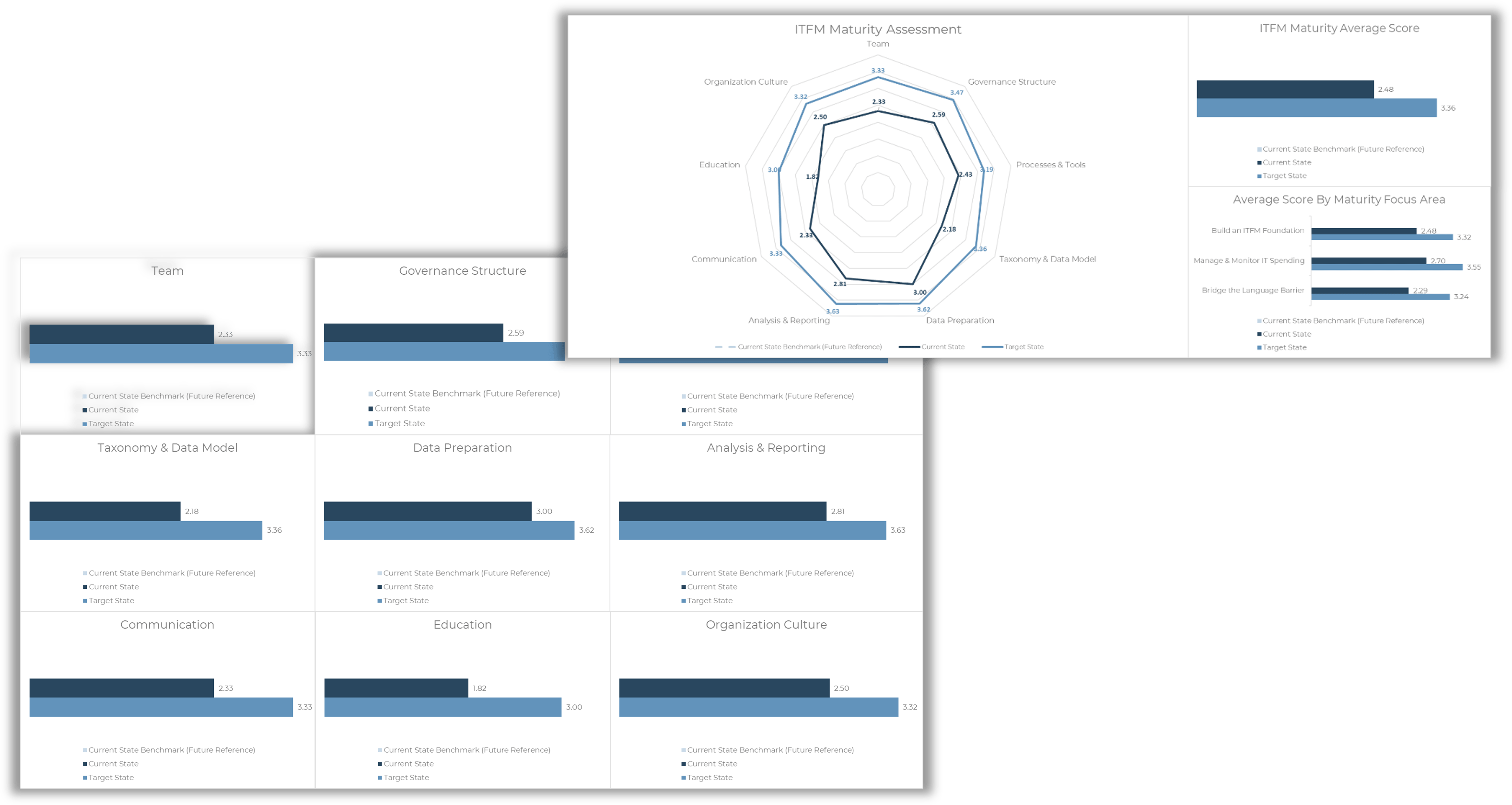

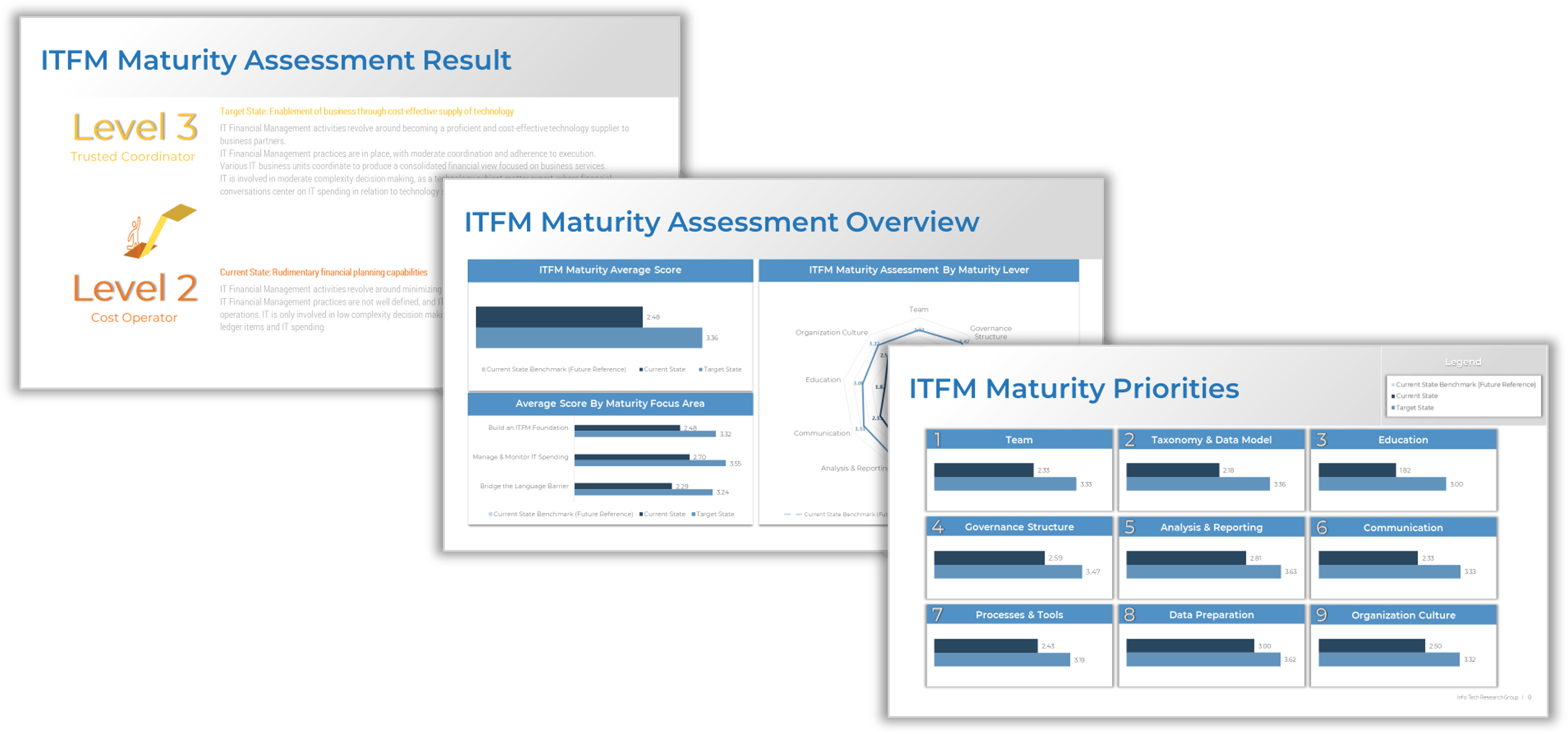

Use Info-Tech’s Requirements Gathering Maturity Assessment tool to help conduct current and target state analysis

Use the Requirements Gathering Maturity Assessment tool to help assess the maturity of your requirements gathering function in your organization, and identify the gaps between the current state and the target state. This will help focus your organization's efforts in closing the gaps that represent high-value opportunities.

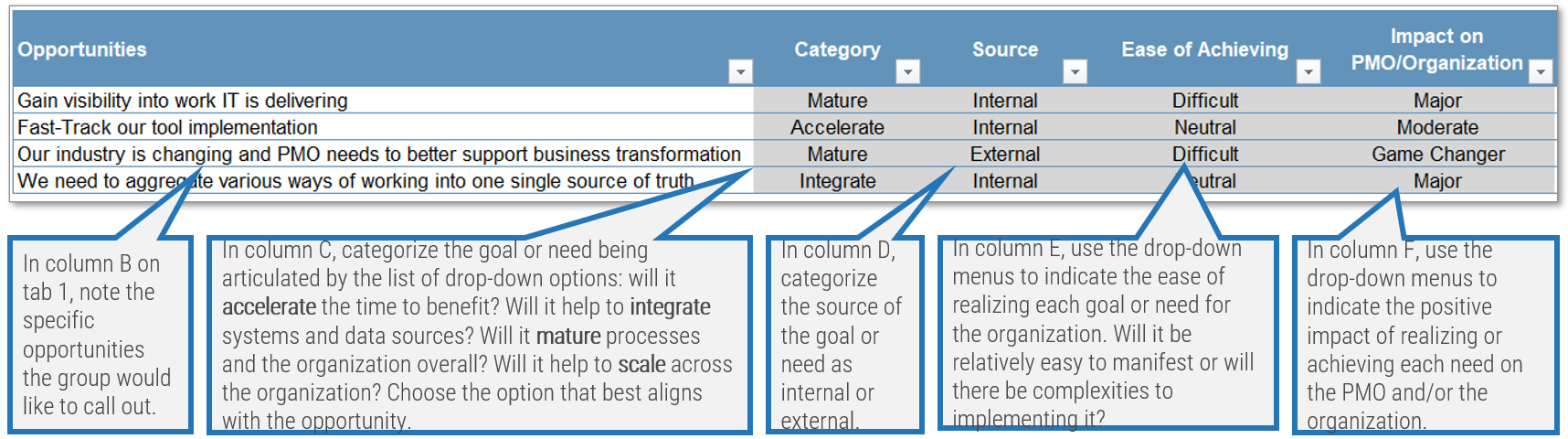

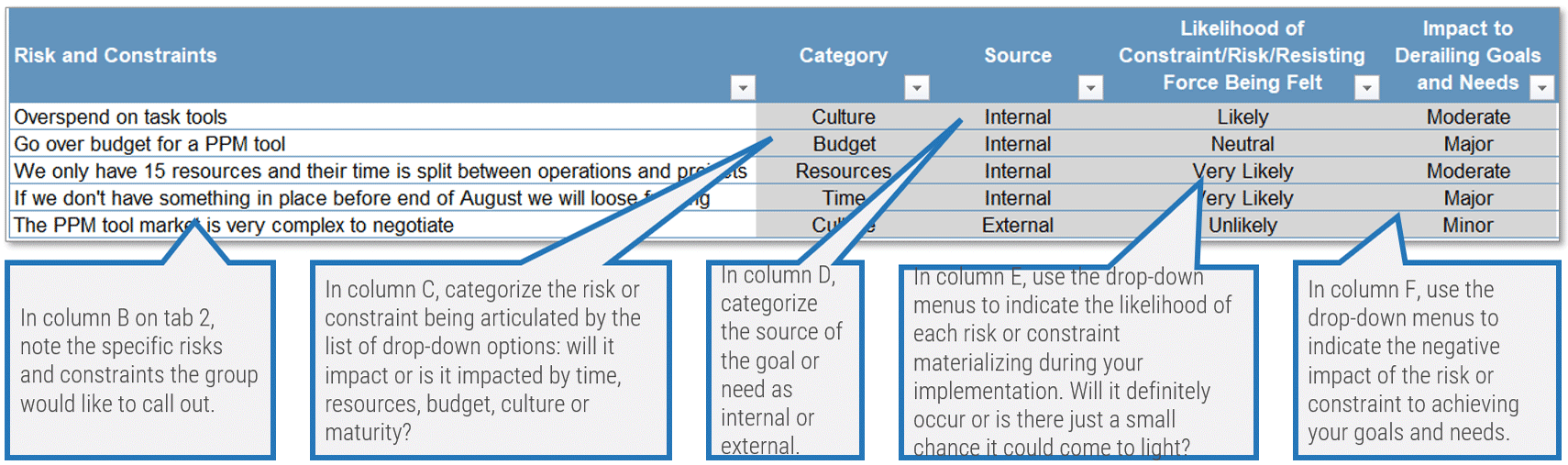

- On tab 2. Current State, use the drop-down responses to provide the answer that best matches your organization, where 1= Strongly disagree and 5 = Strongly agree. On tab 3. Target State, answer the same questions in relation to where your organization would like to be.

- Based on your responses, tab 4. Maturity Summary will display a visual of the gap between the current and target state.

Conduct a current and target state analysis

1.2.1 – 1 hour

Complete the Requirements Gathering Maturity Assessment tool to define your target state, and identify the gaps in your current state.

Input

- Current and target state maturity rating

Output

- Requirements gathering maturity summary

Materials

Participants

- For each component of requirements gathering, write out a series of questions to evaluate your current requirements gathering practices. Use the Requirements Gathering Maturity Assessment tool to assist you in drafting questions.

- Review the questions in each category, and agree on a rating from 1-5 on their current maturity: 1= Strongly disagree and 5 = Strongly agree. (Note: it will likely be very rare that they would score a 5 in any category, even for the target state.)

- Once the assigned categories have been completed, have groups present their assessment to all, and ensure that there is consensus. Once consensus has been reached, input the information into the Current State tab of the tool to reveal the overall current state of maturity score for each category.

- Now that the current state is complete, go through each category and define the target state goals.

- Document any gaps or action items that need to be addressed.

Example: Conduct a current and target state analysis

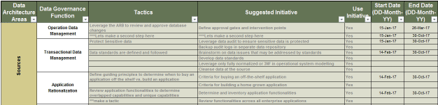

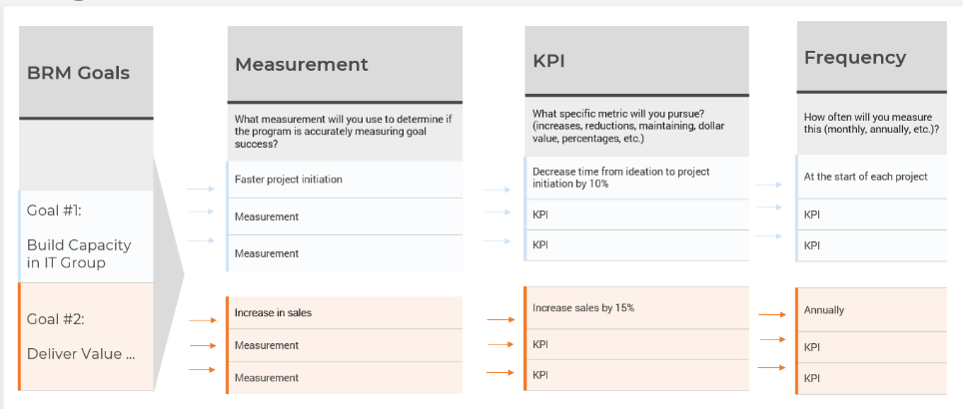

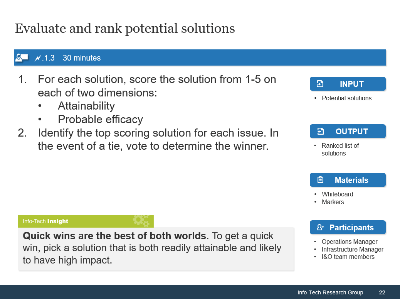

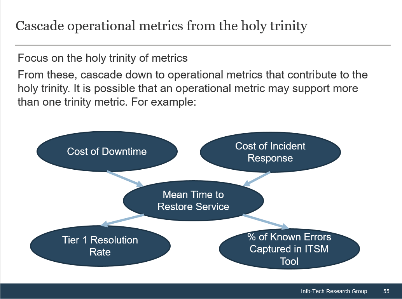

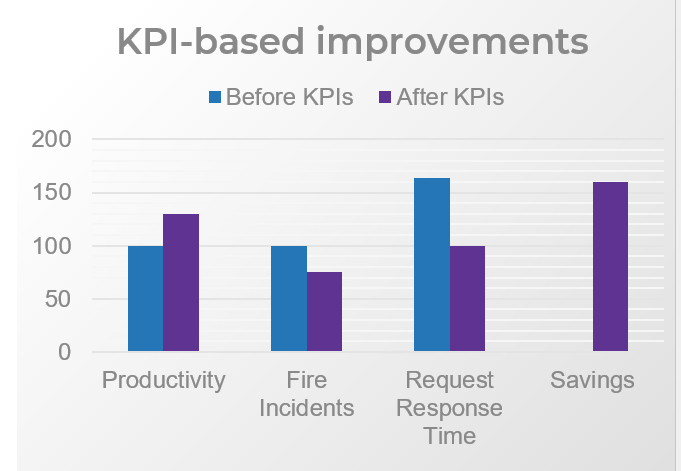

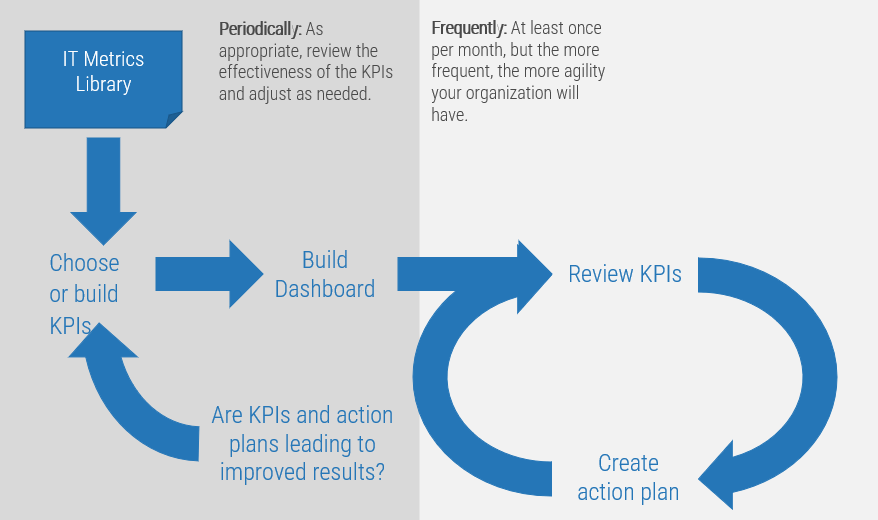

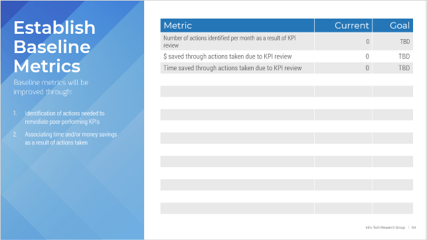

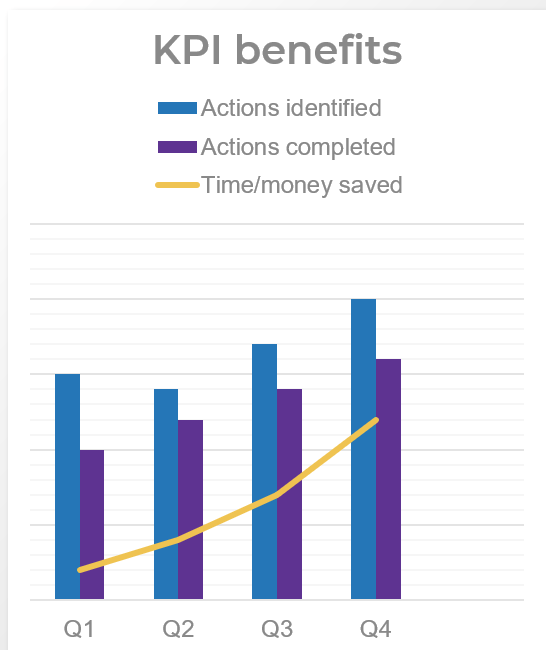

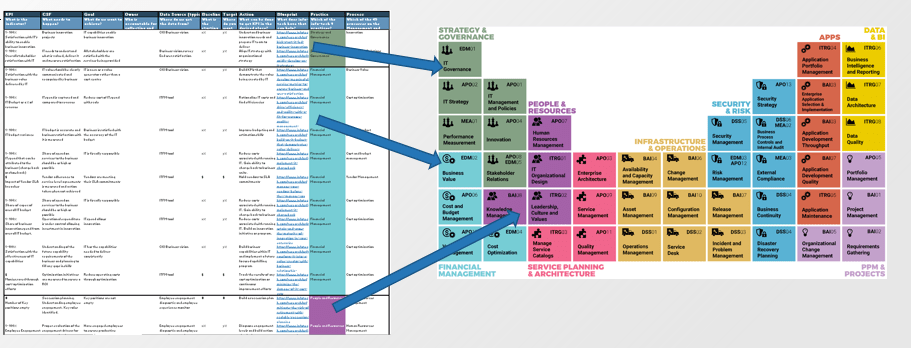

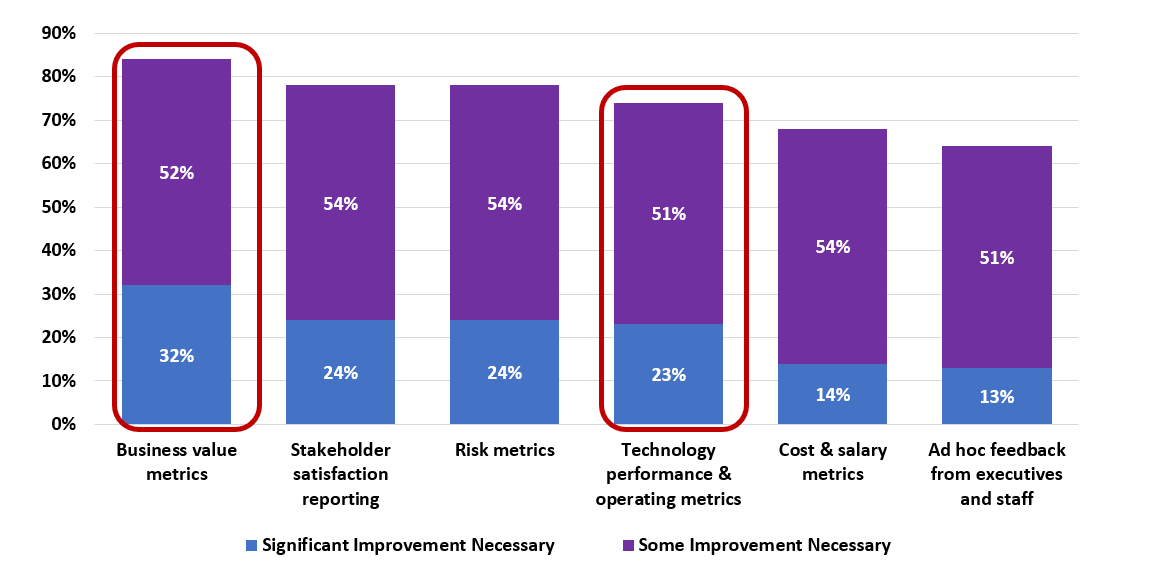

Select the project-specific KPIs that will be used to track the value of requirements gathering optimization

You need to ensure your requirements gathering procedures are having the desired effect and adjust course when necessary. Establishing an upfront list of key performance indicators that will be benchmarked and tracked is a crucial step.

- Without following up on requirements gathering by tracking project metrics and KPIs, organizations will not be able to accurately gauge if the requirements process re-engineering is having a tangible, measurable effect. They will also not be able to determine what changes (if any) need to be made to SOPs based on project performance.

- This is a crucial step that many organizations overlook. Creating a retroactive list of KPIs is inadequate, since you must benchmark pre-optimization project metrics in order to assess and isolate the value generated by reducing errors and cycle time and increasing value of deployed applications.

Establish requirements gathering performance metrics

1.2.2 – 30 minutes

Input

Output

- Target performance metrics

Materials

Participants

-

Identify the following information for the last six months to one year:

- Average number of reworks to requirements.

- Number of change requests.

- Percent of feature utilization by end users.

- User adoption rate.

- Number of breaches in regulatory requirements.

- Percent of final deliverables implemented on time.

- End-user satisfaction score (if possible).

- As a group, look at each metric in turn and set your target metrics for six months to one year for each of these categories.

Document the output from this exercise in section 2.2 of the Requirements Gathering SOP and BA Playbook.

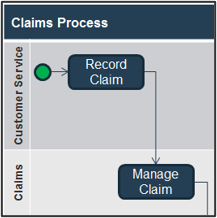

Visualize your current and target state process for requirements gathering with a business process model

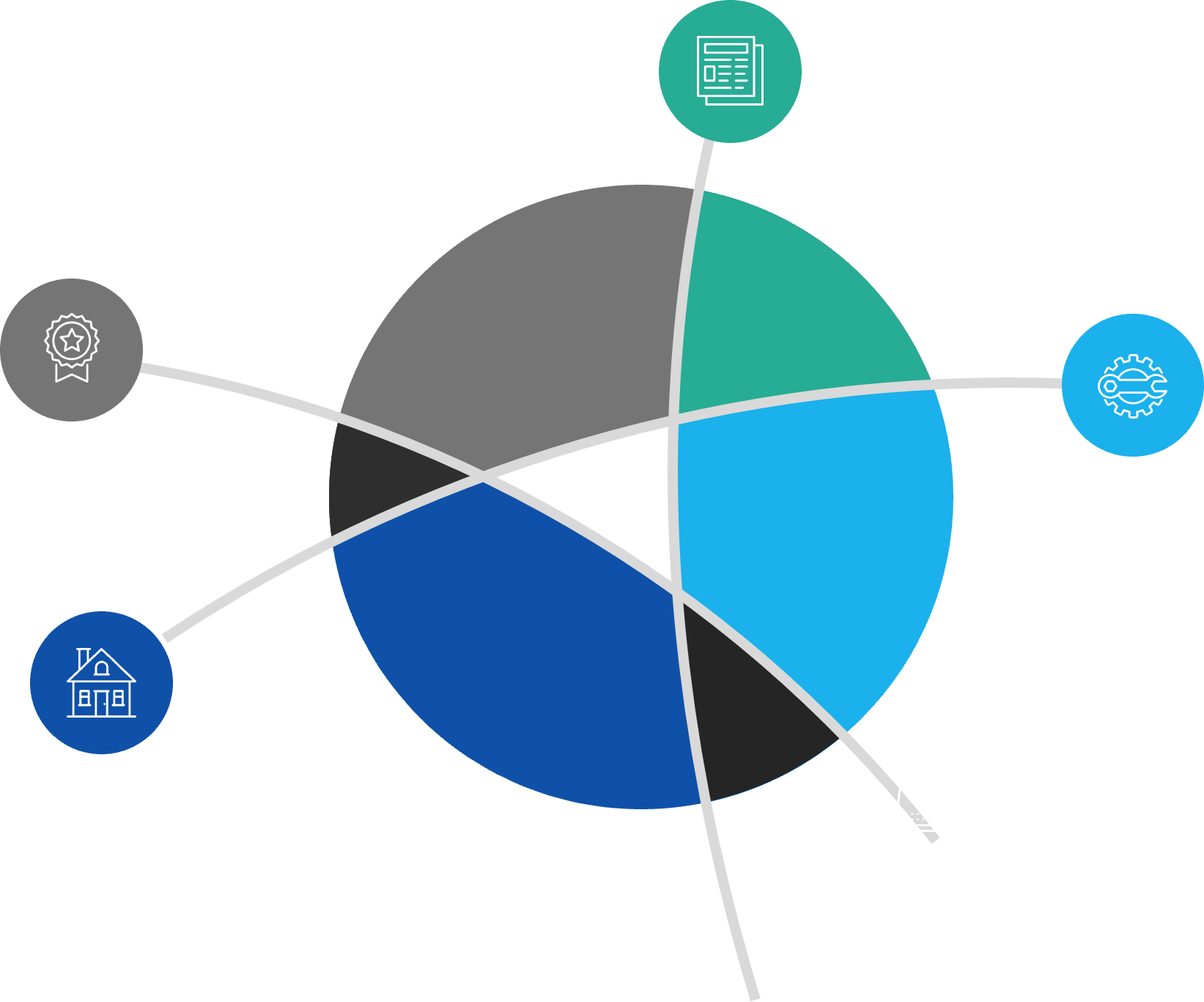

A business process model (BPM) is a simplified depiction of a complex process. These visual representations allow all types of stakeholders to quickly understand a process, how it affects them, and enables more effective decision making. Consider these areas for your model:

Stakeholder Analysis

- Identify who the right stakeholders are

- Plan communication

- Document stakeholder responsibilities in a RACI

Elicitation Techniques

- Get the right information from stakeholders

- Document it in the appropriate format

- Define business need

- Enterprise analysis

Documentation

- How are outputs built?

- Process flows

- Use cases

- Business rules

- Traceability matrix

- System requirements

Validation & Traceability

- Make sure requirements are accurate and complete

- Trace business needs to requirements

Managing Requirements

- Organizing and prioritizing

- Gap analysis

- Managing scope

- Communicating

- Managing changes

Supporting Tools

- Templates to standardize

- Checklists

- Software to automate the process

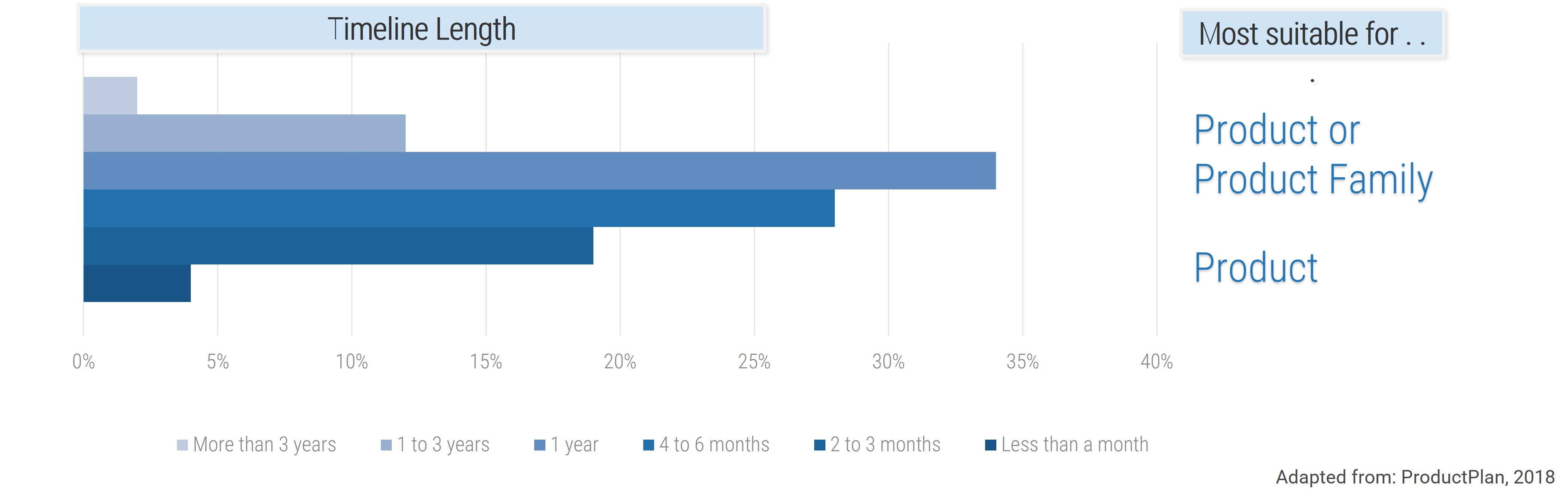

Your requirements gathering process will vary based on the project level

It’s important to determine the project levels up front, as each project level will have a specific degree of elicitation, analysis, and validation that will need to be completed. That being said, not all organizations will have four levels.

Level 4

- Very high risk and complexity.

- Projects that result in a transformative change in the way you do business. Level 4 projects affect all lines of business, multiple technology areas, and have significant costs and/or risks.

- Example: Implement ERP

Level 3

- High risk and complexity.

- Projects that affect multiple lines of business and have significant costs and/or risks.

- Example: Implement CRM

Level 2

- Medium risk and complexity.

- Projects with broader exposure to the business that present a moderate level of risk to business operations.

- Example: Deploy Office 365

Level 1

- Low risk and complexity.

- Routine/straightforward projects with limited exposure to the business and low risk of negative business impact.

- Example: SharePoint Update

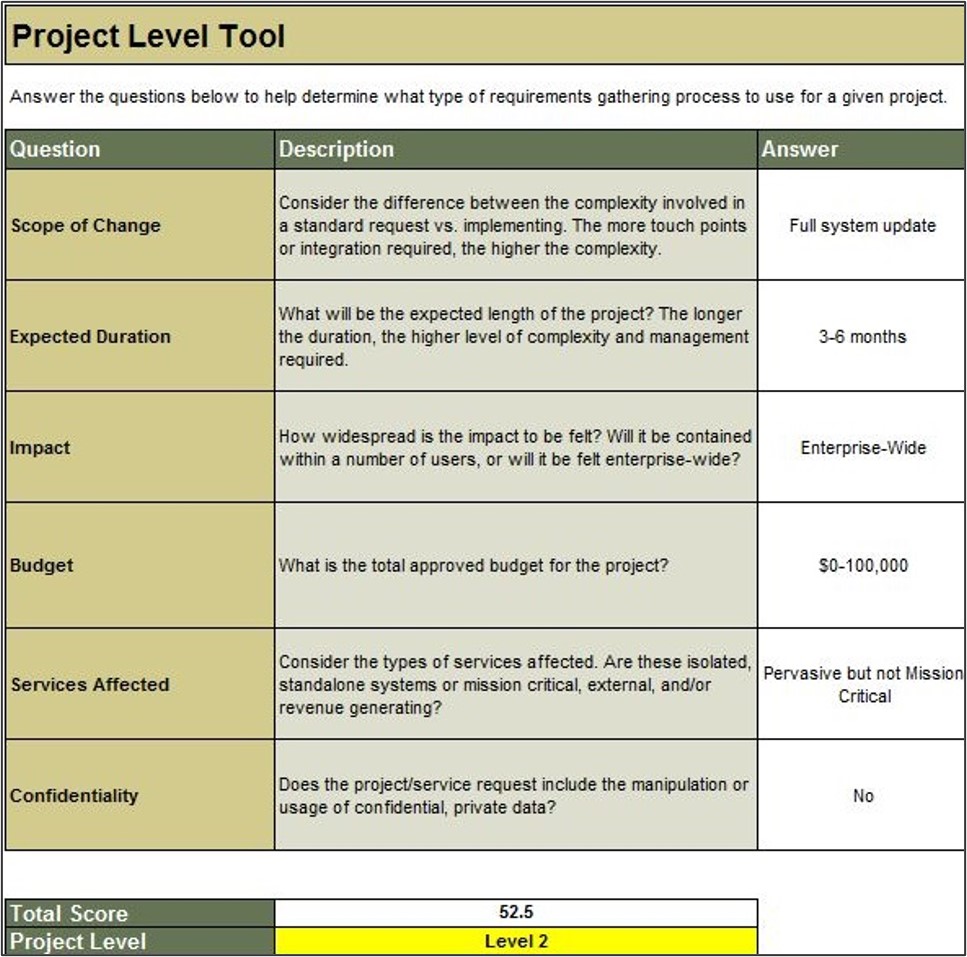

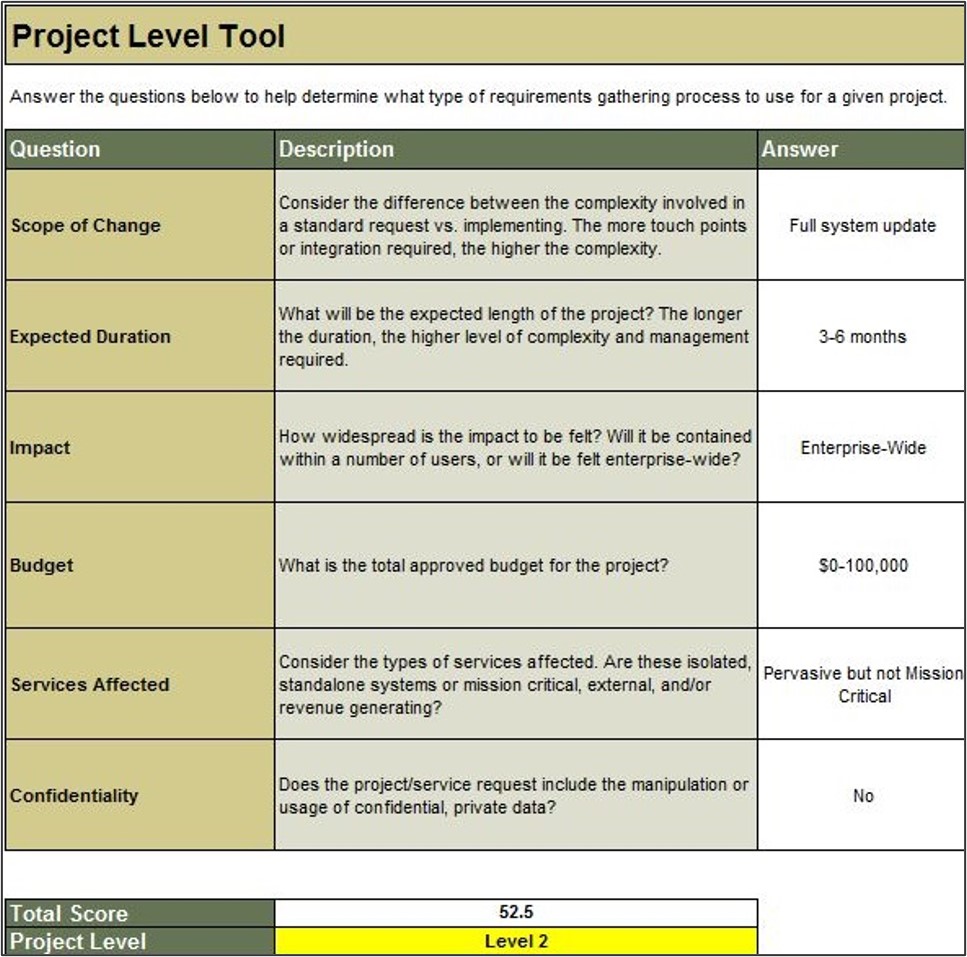

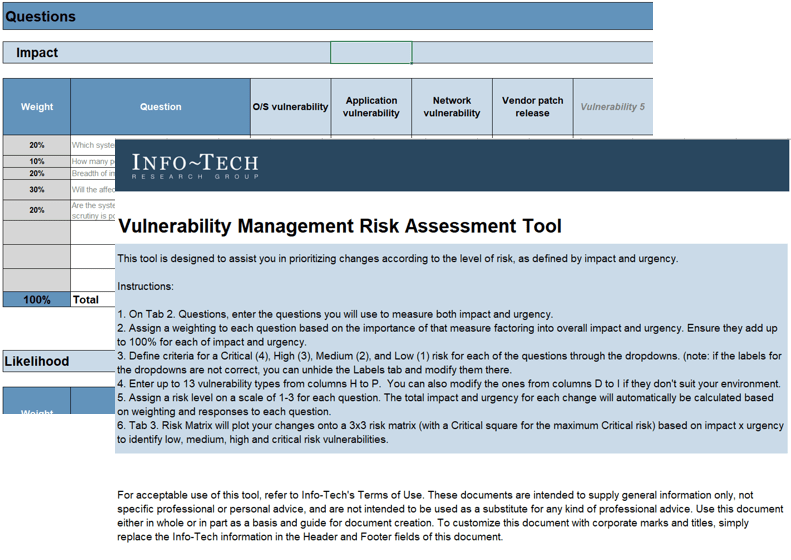

Use Info-Tech’s Project Level Selection Tool to classify your project level and complexity

1.3 Project Level Selection Tool

The Project Level Selection Tool will classify your projects into four levels, enabling you to evaluate the risk and complexity of a particular project and match it with an appropriate requirements gathering process.

Project Level Input

- Consider the weighting criteria for each question and make any needed adjustments to better reflect how your organization values each of the criterion.

- Review the option levels 1-4 for each of the six questions, and make any modifications necessary to better suit your organization.

- Review the points assigned to each of the four buckets for each of the six questions, and make any modifications needed.

Project Level Selection

- Use this tab to evaluate the project level of each new project.

- To do so, answer each of the questions in the tool.

Define project levels – Level 1/2/3/4

1.2.3 – 1 hour

Input

- Project level assessment criteria

Output

- Identification of project levels

Materials

Participants

Define the project levels to determine the appropriate requirements gathering process for each.

- Begin by asking participants to review the six criteria for assessing project levels as identified in the Project Level Selection Tool. Have participants review the list and ensure agreement around the factors. Create a chart on the board using Level 1, Level 2, Level 3, and Level 4 as column headings.

- Create a row for each of the chosen factors. Begin by filling in the chart with criteria for a level 4 project: What constitutes a level 4 project according to these six factors?

- Repeat the exercise for Level 3, Level 2, and Level 1. When complete, you should have a chart that defines the four project levels at your organization.

- Input this information into the tool, and ask participants to review the weighting factors and point allocations and make modifications where necessary.

- Input the details from one of the projects participants had selected prior to the workshop beginning and determine its project level. Discuss whether this level is accurate,

and make any changes needed.

Document the output from this exercise in section 2.3 of the Requirements Gathering SOP and BA Playbook.

Define project levels

1.2.3 – 1 hour

| Category

|

Level 4

|

Level 3

|

Level 2

|

Level 1

|

| Scope of Change

|

Full system update

|

Full system update

|

Multiple modules

|

Minor change

|

| Expected Duration

|

12 months +

|

6 months +

|

3-6 months

|

0-3 months

|

| Impact

|

Enterprise-wide, globally dispersed

|

Enterprise-wide

|

Department-wide

|

Low users/single division

|

| Budget

|

$1,000,000+

|

$500,000-1,000,000

|

$100,000-500,000

|

$0-100,000

|

| Services Affected

|

Mission critical, revenue impacting

|

Mission critical, revenue impacting

|

Pervasive but not mission critical

|

Isolated, non-essential

|

| Confidentiality

|

Yes

|

Yes

|

No

|

No

|

Define project levels

1.2.3 – 1 hour

The tool is comprised of six questions, each of which is linked to at least one type of project risk.

Using the answers provided, the tool will calculate a level for each risk category. Overall project level is a weighted average of the individual risk levels, based on the importance weighting of each type of risk set by the project manager.

This tool is an excerpt from Info-Tech’s exhaustive Project Level Assessment Tool.

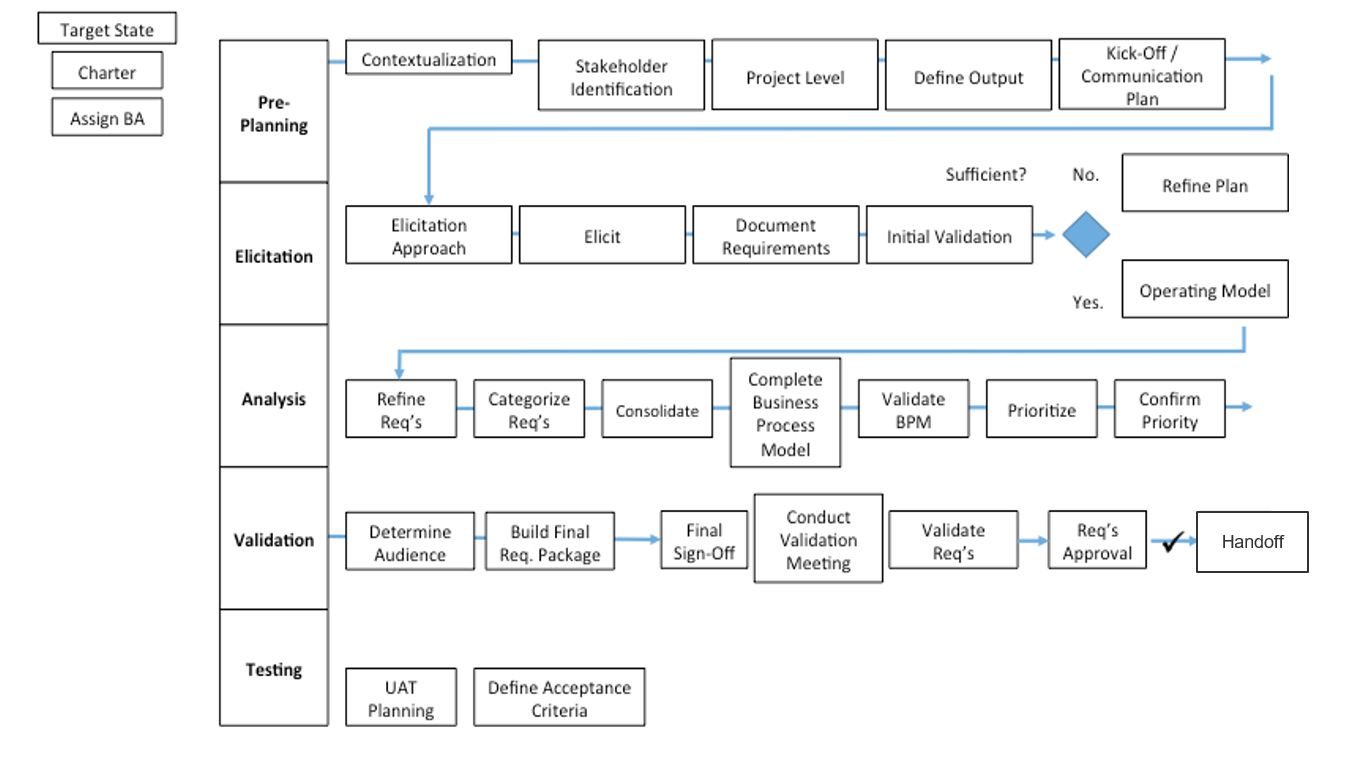

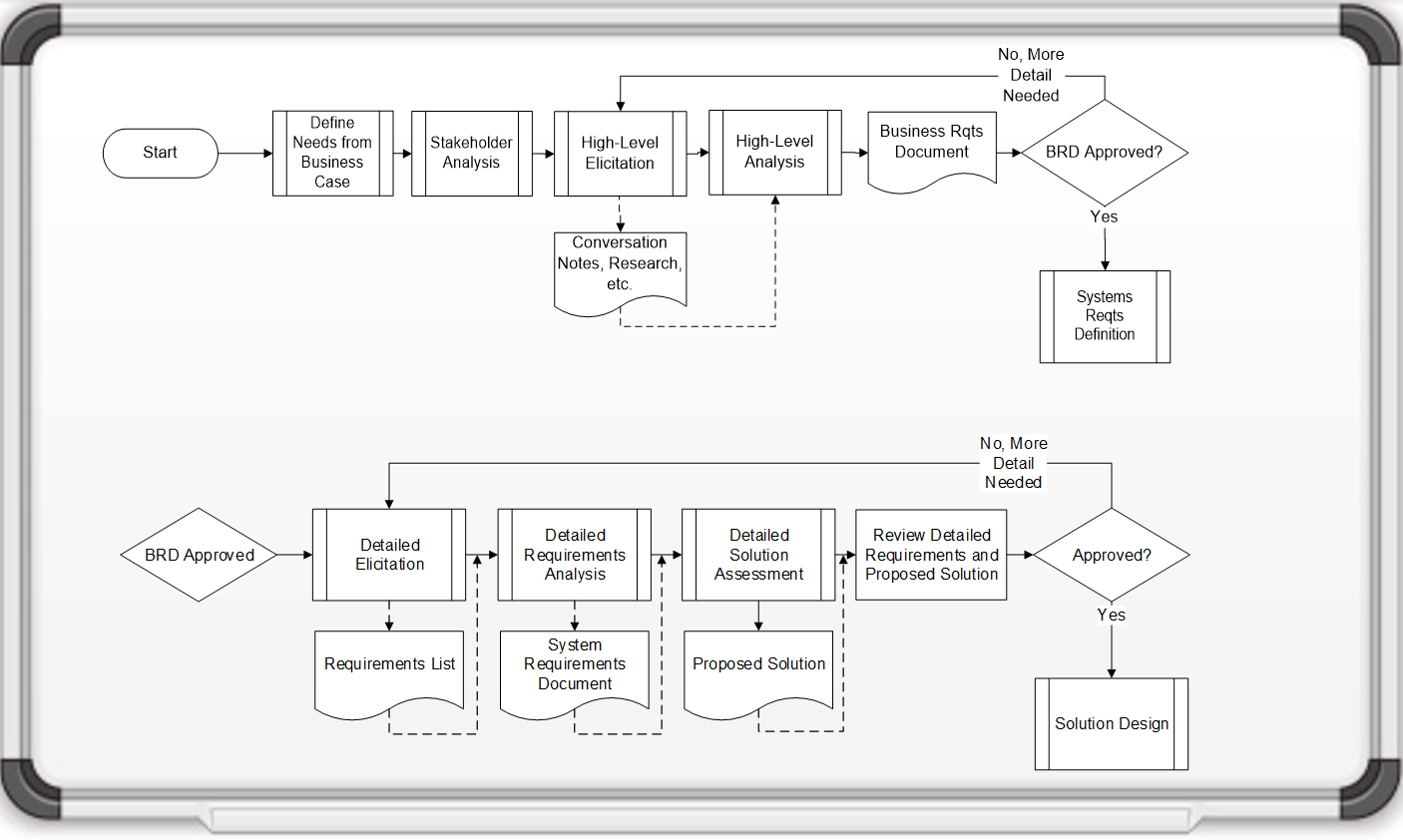

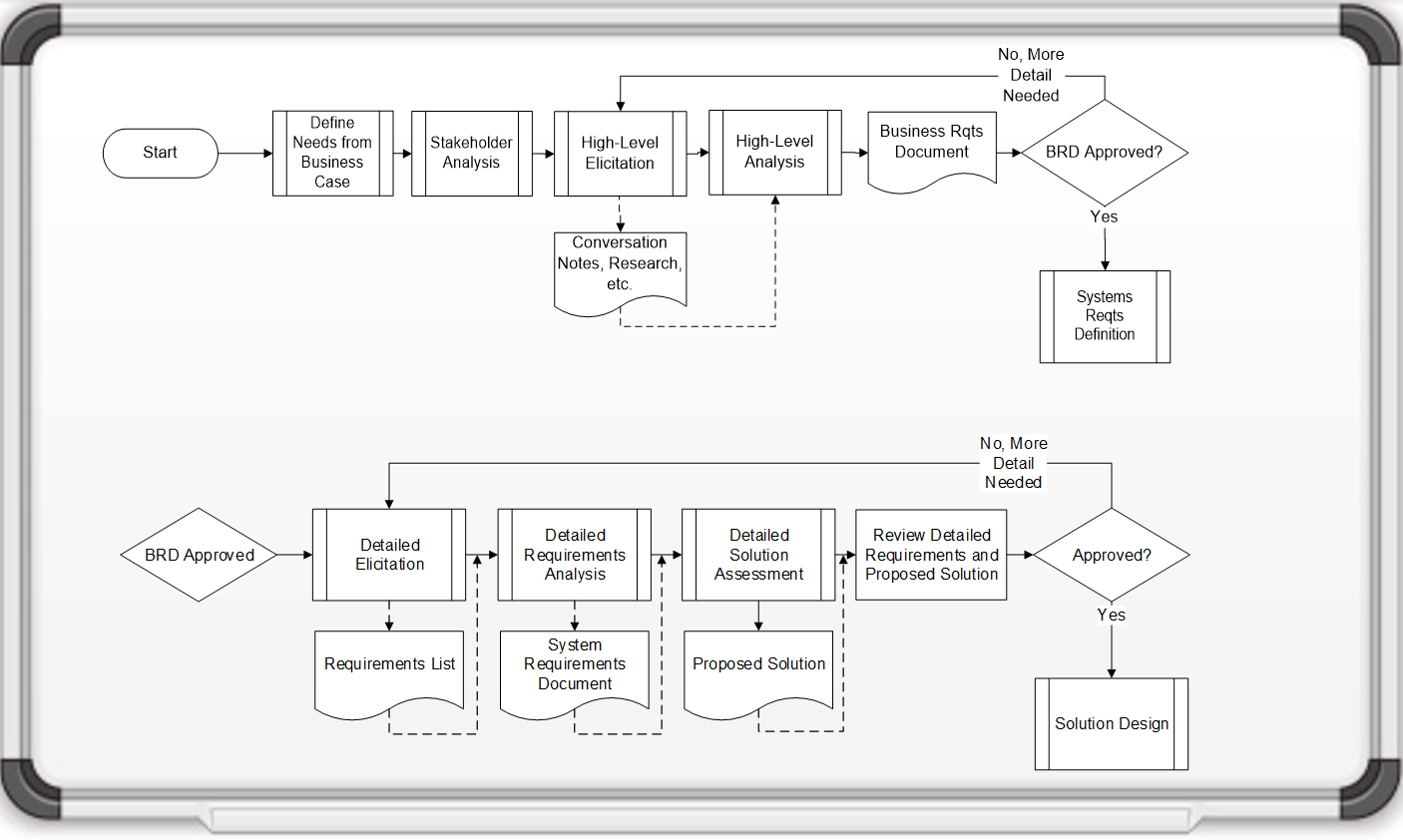

Build your initial requirements gathering business process models: create different models based on project complexity

1.2.4 – 30 minutes

Input

- Current requirements gathering process flow

Output

- Requirements gathering business process model

Materials

Participants

Brainstorm the ideal target business process flows for your requirements gathering process (by project level).

- As a group, create a process flow on the whiteboard that covers the entire requirements gathering lifecycle, incorporating the feedback from exercise 1.2.1. Draw the process with input from the entire group.

- After the process flow is complete, compare it to the best practice process flow on the following slide. You may want to create different process flows based on project level (i.e. a process model for Level 1 and 2 requirements gathering, and a process model for how to collect requirements for Level 3 and 4). As you work through the blueprint, revisit and refine these models – this is the initial brainstorming!

Document the output from this exercise in section 2.4 of the Requirements Gathering SOP and BA Playbook.

Example: requirements gathering business process model

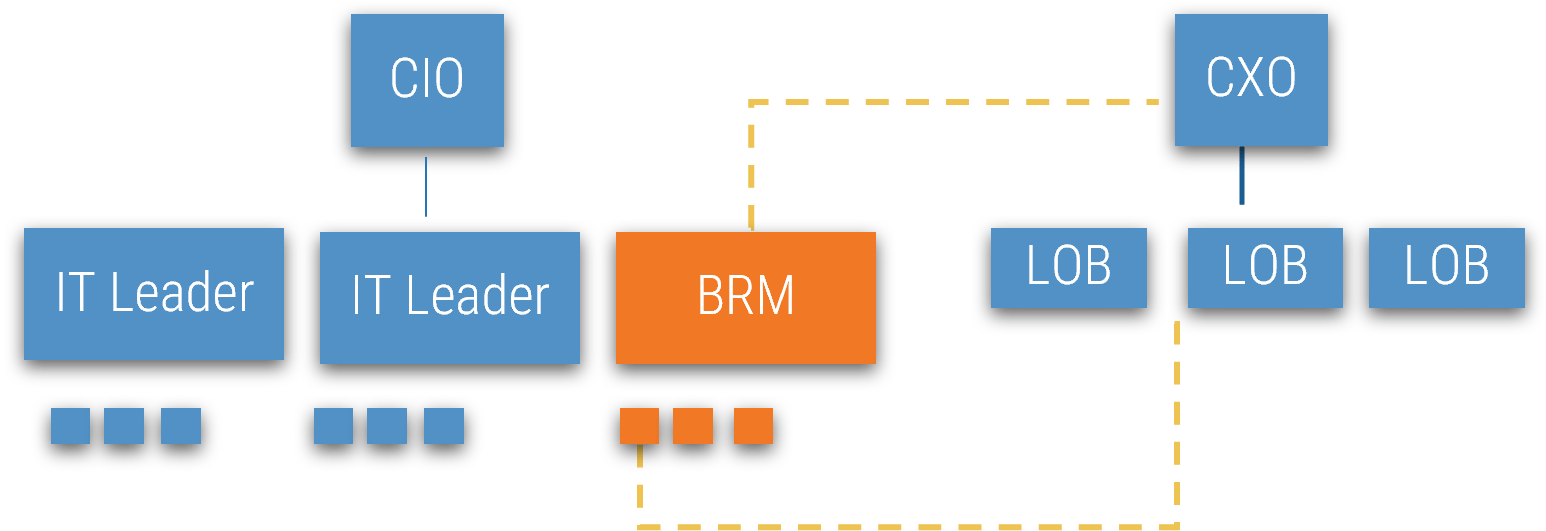

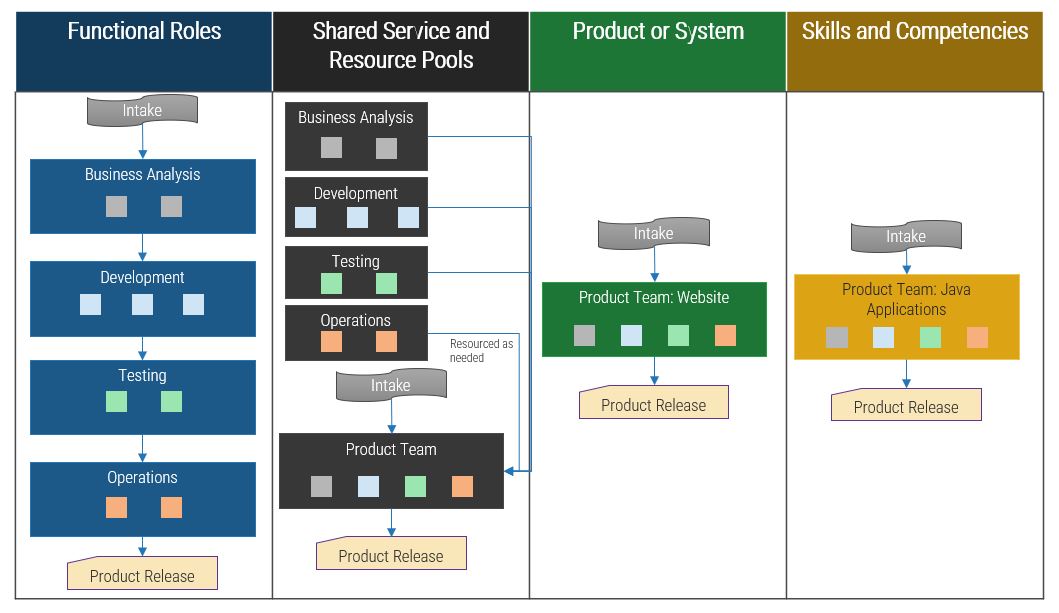

Develop your BA team to accelerate collecting, analyzing, and translating requirements

Having an SOP is important, but it should be the basis for training the people who will actually execute the requirements gathering process. Your BA team is critical for requirements gathering – they need to know the SOPs in detail, and you need to have a plan for recruiting those with an excellent skill set.

- The designated BA(s) for the project have responsibility for end-to-end requirements management – they are responsible for executing the SOPs outlined in this blueprint, including elicitation, analysis, and validation of requirements during the project.

- Designated BAs must work collaboratively with their counterparts in the business and IT (e.g. developer teams or procurement professionals) to ensure that the approved requirements are met in a timely and cost-effective manner.

The ideal candidates for requirements gathering are technically savvy analysts (but not necessarily computer science majors) from the business who are already fluent with the business’ language and cognizant of the day-to-day challenges that take place. Organizationally, these BAs should be in a group that bridges IT and the business (such as an RGCOE or PMO) and be specialists rather than generalists in the requirements management space.

A BA resourcing strategy is included in the SOP. Customize it to suit your needs.

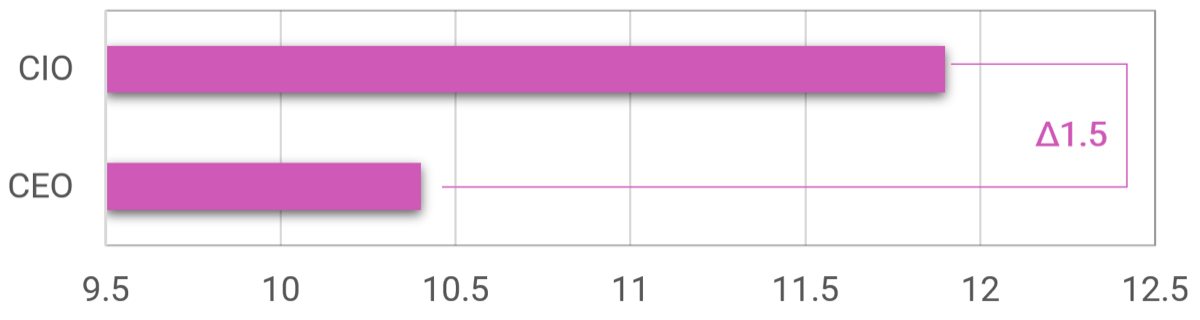

"Make sure your people understand the business they are trying to provide the solution for as well if not better than the business folks themselves." – Ken Piddington, CIO, MRE Consulting

Use Info-Tech’s Business Requirements Analyst job description template for sourcing the right talent

1.4 Business Requirements Analyst

If you don’t have a trained group of in-house BAs who can execute your requirements gathering process, consider sourcing the talent from internal candidates or calling for qualified applicants. Our Business Requirements Analyst job description template can help you quickly get the word out.

- Sometimes, you will have a dedicated set of BAs, and sometimes you won’t. In the latter case, the template covers:

- Job Title

- Description of Role

- Responsibilities

- Target Job Skills

- Target Job Qualifications

- The template is primarily designed for external hiring, but can also be used to find qualified internal candidates.

Info-Tech Deliverable

Download the Business Requirements Analyst job description template.

Standardizing process begins with establishing expectations

CASE STUDY

Industry Government

Source Info-Tech Workshop

Challenge

A mid-sized US municipality was challenged with managing stakeholder expectations for projects, including the collection and analysis of business requirements.

The lack of a consistent approach to requirements gathering was causing the IT department to lose credibility with department level executives, impacting the ability of the team to engage project stakeholders in defining project needs.

Solution

The City contracted Info-Tech to help build an SOP to govern and train all BAs on a consistent requirements gathering process.

The teams first set about establishing a consistent approach to defining project levels, defining six questions to be asked for each project. This framework would be used to assess the complexity, risk, and scope of each project, thereby defining the appropriate level of rigor and documentation required for each initiative.

Results

Once the project levels were defined, the team established a formalized set of steps, tools, and artifacts to be created for each phase of the project. These tools helped the team present a consistent approach to each project to the stakeholders, helping improve credibility and engagement for eliciting requirements.

The project level should set the level of control

Choose a level of control that facilitates success without slowing progress.

| No control

|

Right-sized control

|

Over-engineered control

|

| Final deliverable may not satisfy business or user requirements.

|

Control points and communication are set at appropriate stage-gates to allow for deliverables to be evaluated and assessed before proceeding to the next phase.

|

Excessive controls can result in too much time spent on stage-gates and approvals, which creates delays in the schedule and causes milestones to be missed.

|

Info-Tech Insight

Throughout the requirements gathering process, you need checks and balances to ensure that the projects are going according to plan. Now that we know our stakeholder, elicitation, and prioritization processes, we will set up the control points for each project level.

Plan your communication with stakeholders

Determine how you want to receive and distribute messages to stakeholders.

| Communication Milestones

|

Audience

|

Artifact

|

Final Goal

|

| Project Initiation

|

Project Sponsor

|

Project Charter

|

Communicate Goals and Scope of Project

|

| Elicitation Scheduling

|

Selected Stakeholders (SMEs, Power Users)

|

Proposed Solution

|

Schedule Elicitation Sessions

|

| Elicitation Follow-Up

|

Selected Stakeholders

|

Elicitation Notes

|

Confirm Accuracy of Notes

|

| First Pass Validation

|

Selected Stakeholders

|

Consolidated Requirements

|

Validate Aggregated Requirements

|

| Second Pass Validation

|

Selected Stakeholders

|

Prioritized Requirements

|

Validate Requirements Priority

|

| Eliminated Requirements

|

Affected Stakeholders

|

Out of Scope Requirements

|

Affected Stakeholders Understand Impact of Eliminated Requirements

|

| Solution Selection

|

High Authority/Expertise Stakeholders

|

Modeled Solutions

|

Select Solution

|

| Selected Solution

|

High Authority/Expertise Stakeholders and Project Sponsor

|

Requirements Package

|

Communicate Solution

|

| Requirements Sign-Off

|

Project Sponsor

|

Requirements Package

|

Obtain Sign-Off

|

Setting control points – approvals and sign-offs

# – Control Point: A decision requiring specific approval or sign-off from defined stakeholders involved with the project. Control points result in accepted or rejected deliverables/documents.

A – Plan Approval: This control point requires a review of the requirements gathering plan, stakeholders, and elicitation techniques.

B – Requirements Validation: This control point requires a review of the requirements documentation that indicates project and product requirements.

C – Prioritization Sign-Off: This requires sign-off from the business and/or user groups. This might be sign-off to approve a document, prioritization, or confirm that testing is complete.

D – IT or Peer Sign-Off: This requires sign-off from IT to approve technical requirements or confirm that IT is ready to accept a change.

Match control points to project level and identify these in your requirements business process models

1.2.5 – 45 minutes

Input

- Activity 1.2.4 business process diagram

Output

Materials

- Whiteboard

- Markers

- Sticky notes

Participants

- Business stakeholders

- BAs

Define all of the key control points, required documentation, and involved stakeholders.

- On the board, post the initial business process diagram built in exercise 1.2.4. Have participants suggest appropriate control points. Write the control point number on a sticky note and place it where the control point should be.

- Now that we have identified the control points, consider each control point and define who will be involved in each one, who provides the approval to move forward, the documentation required, and the overall goal.

Document the output from this exercise in section 6.1 of the Requirements Gathering SOP and BA Playbook.

A savvy BA should clarify and confirm project scope prior to embarking on requirements elicitation

Before commencing requirements gathering, it’s critical that your practitioners have a clear understanding of the initial business case and rationale for the project that they’re supporting. This is vital for providing the business context that elicitation activities must be geared towards.

- Prior to commencing the requirements gathering phase, the designated BA should obtain a clear statement of scope or initial project charter from the project sponsor. It’s also advisable for the BA to have an in-person meeting with the project sponsor(s) to understand the overarching strategic or tactical impetus for the project. This initial meeting should be less about eliciting requirements and more about understanding why the project is moving forward, and the business processes it seeks to enable or re-engineer (the target state).

- During this meeting, the BA should seek to develop a clear understanding of the strategic rationale for why the project is being undertaken (the anticipated business benefits) and why it is being undertaken at this time. If the sponsor has any business process models they can share, this would be a good time to review them.

During requirements gathering, BAs should steer clear of solutions and focus on capturing requirements. Focus on traceable, hierarchical, and testable requirements. Focusing on solution design means you are out of requirements mode.

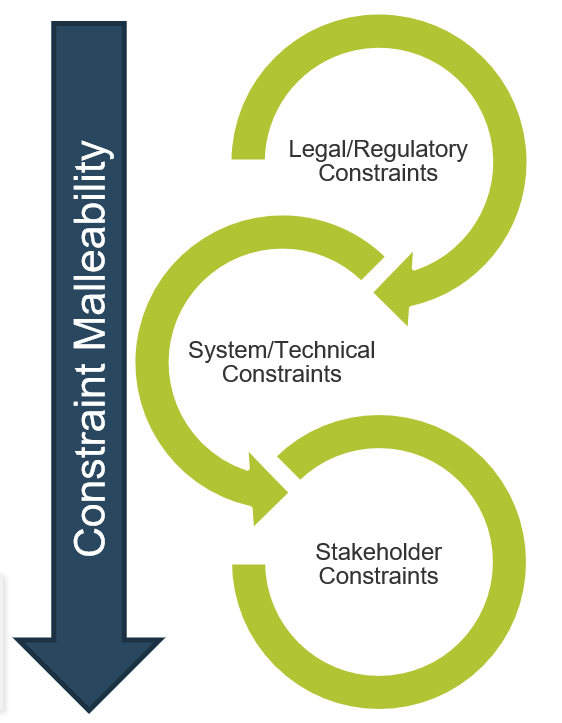

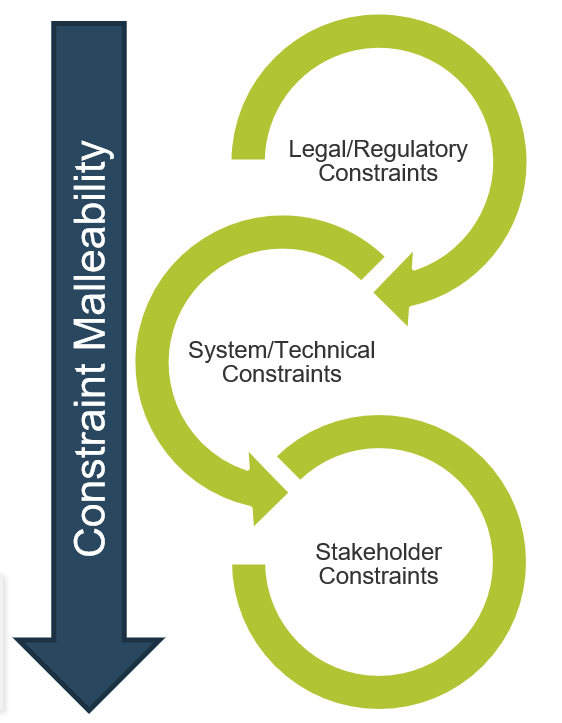

Identify constraints early and often, and ensure that they are adequately communicated to project sponsors and end users

Constraints come in many forms (i.e. financial, regulatory, and technological). Identifying these constraints prior to entering requirements gathering enables you to remain alert; you can separate what is possible from what is impossible, and set stakeholder expectations accordingly.

- Most organizations don’t inventory their constraints until after they’ve gathered requirements. This is dangerous, as clients may inadvertently signal to end users or stakeholders that an infeasible requirement is something they will pursue. As a result, stakeholders are disappointed when they don’t see it materialize.

- Organizations need to put advanced effort into constraint identification and management. Too much time is wasted pursuing requirements that aren't feasible given existing internal (e.g. budgets and system) and external (e.g. legislative or regulatory) constraints.

- Organizations need to manage diverse stakeholders for requirements analysis. Communication will not always be solely with internal teams, but also with suppliers, customers, vendors, and system integrators.

Stakeholder management is a critical aspect of the BA’s role. Part of the BA’s responsibility is prioritizing solutions and demonstrating to stakeholders the level of effort required and the value attained.

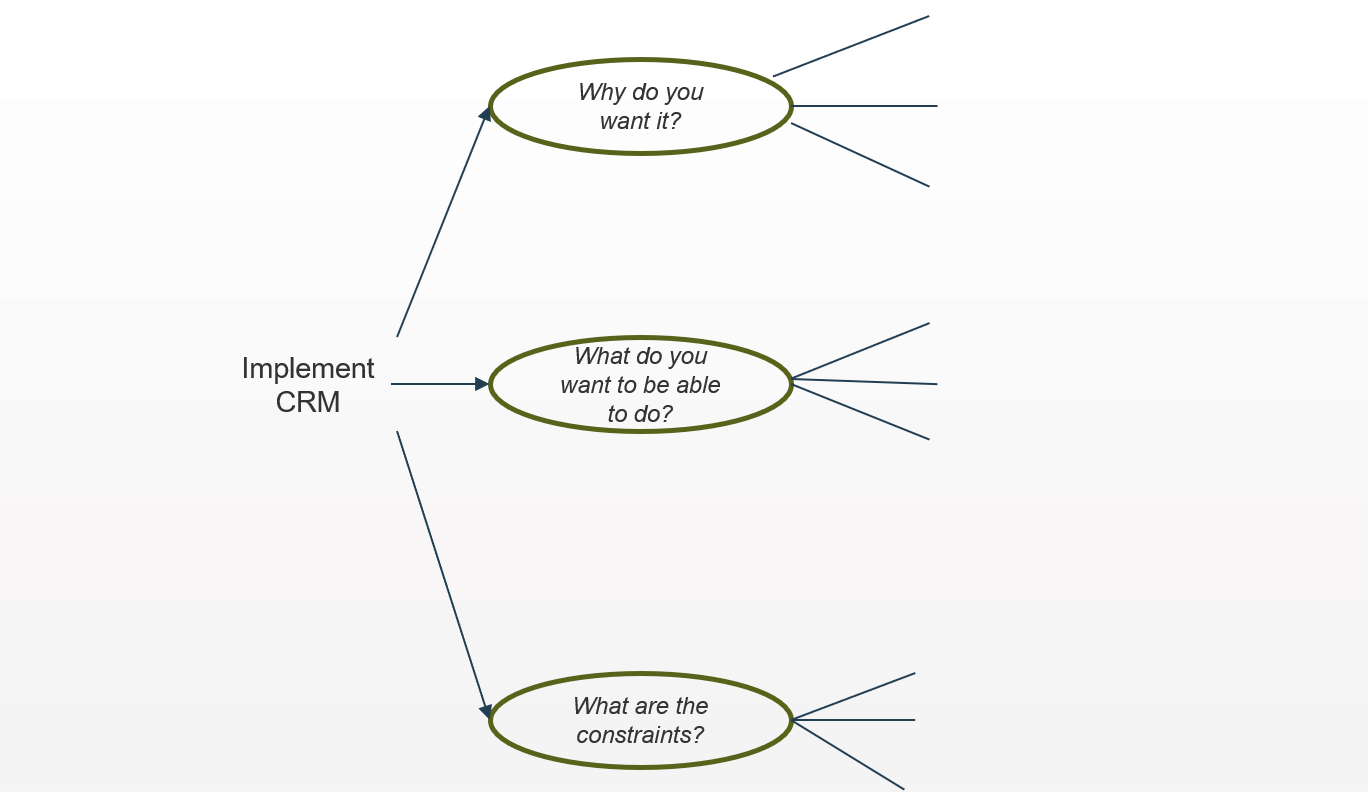

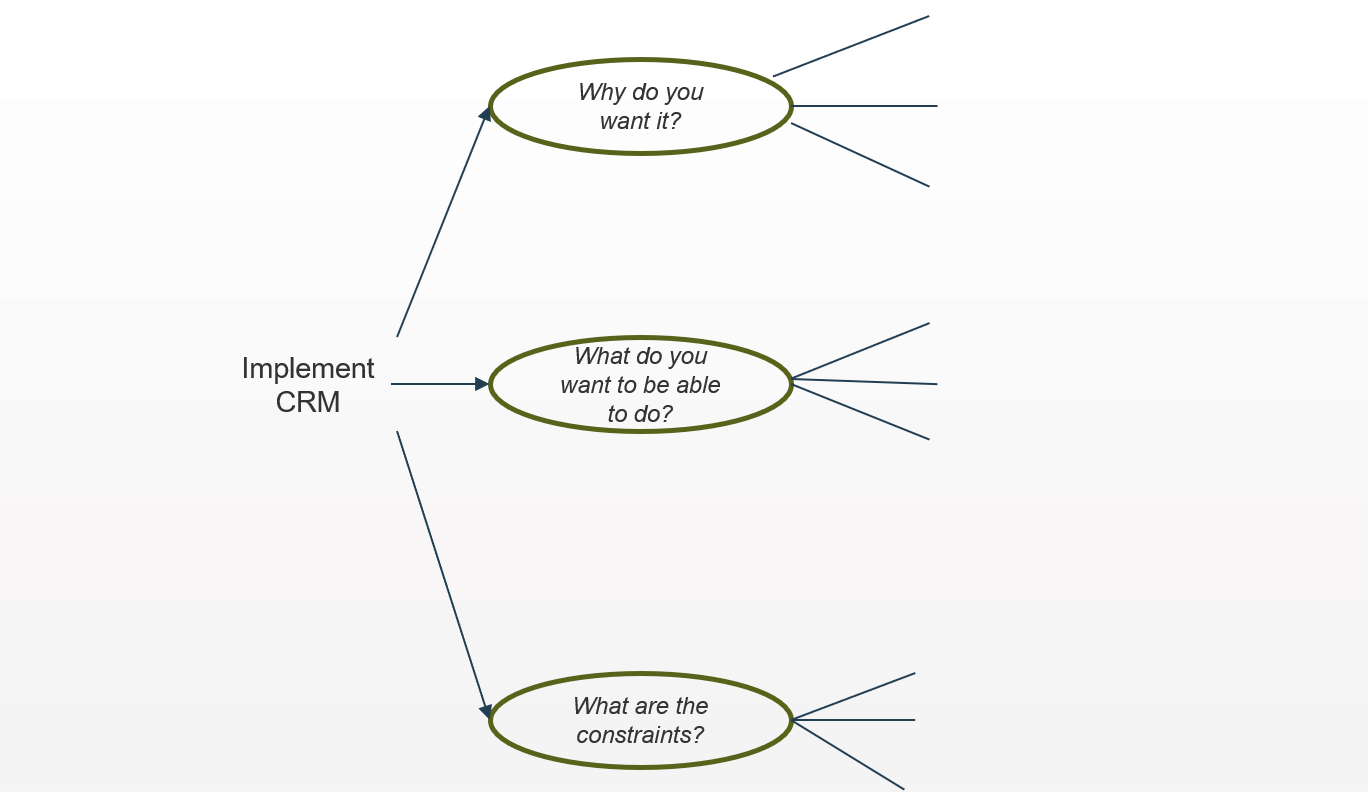

Conduct initial brainstorming on the scope of a selected enterprise application project (real or a sample of your choice)

1.2.6 – 30 minutes

Input

Output

Materials

Participants

Begin the requirements gathering process by conducting some initial scoping on why we are doing the project, the goals, and the constraints.

- Share the project intake form/charter with each member of the group, and give them a few minutes to read over the project details.

- On the board write the project topic and three sub-topics:

- Why does the business want this?

- What do you want customers (end users) to be able to do?

- What are the constraints?

- As a group, brainstorm answers to each of these questions and write them on the board.

Example: Conduct initial brainstorming on the project

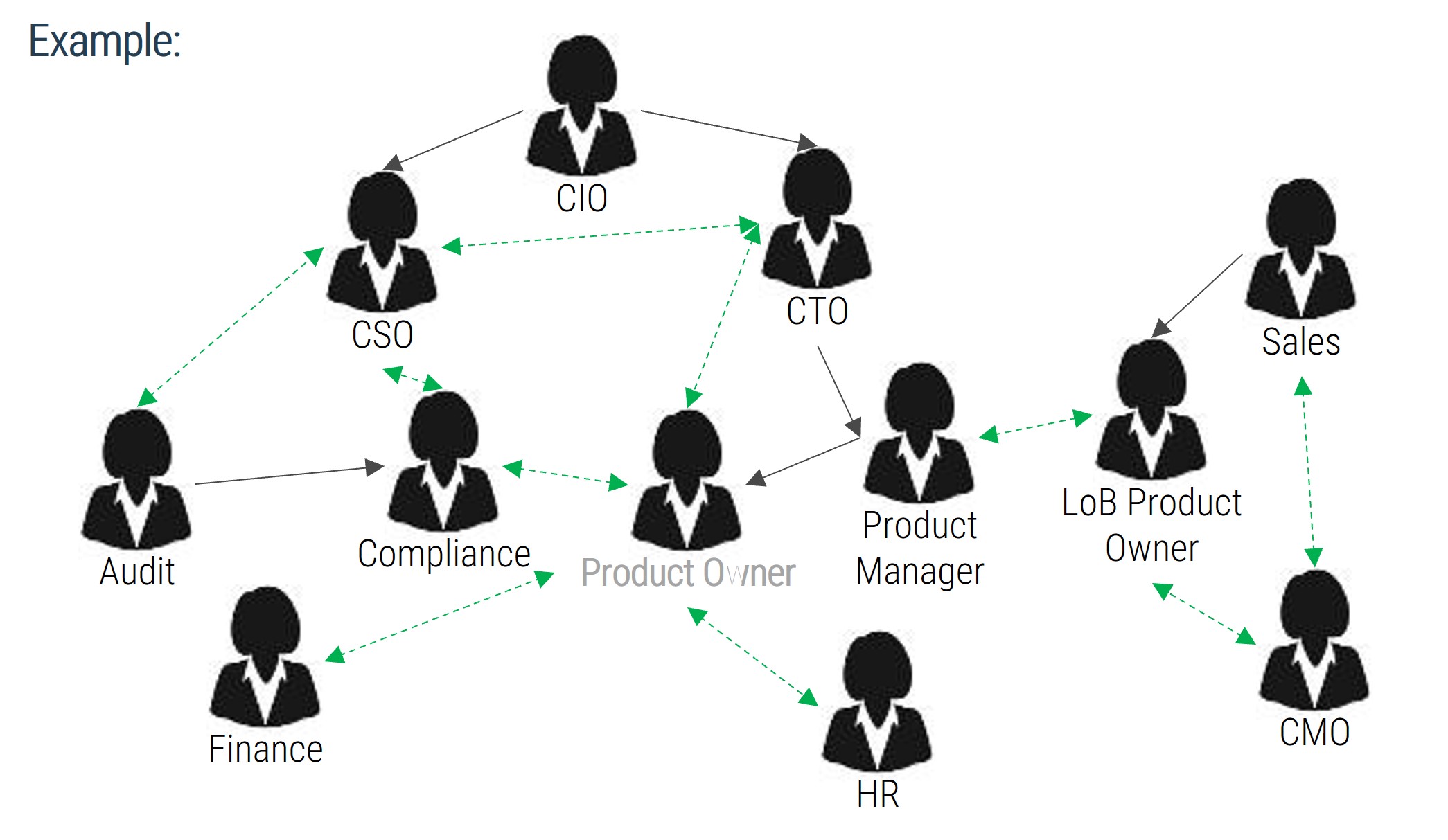

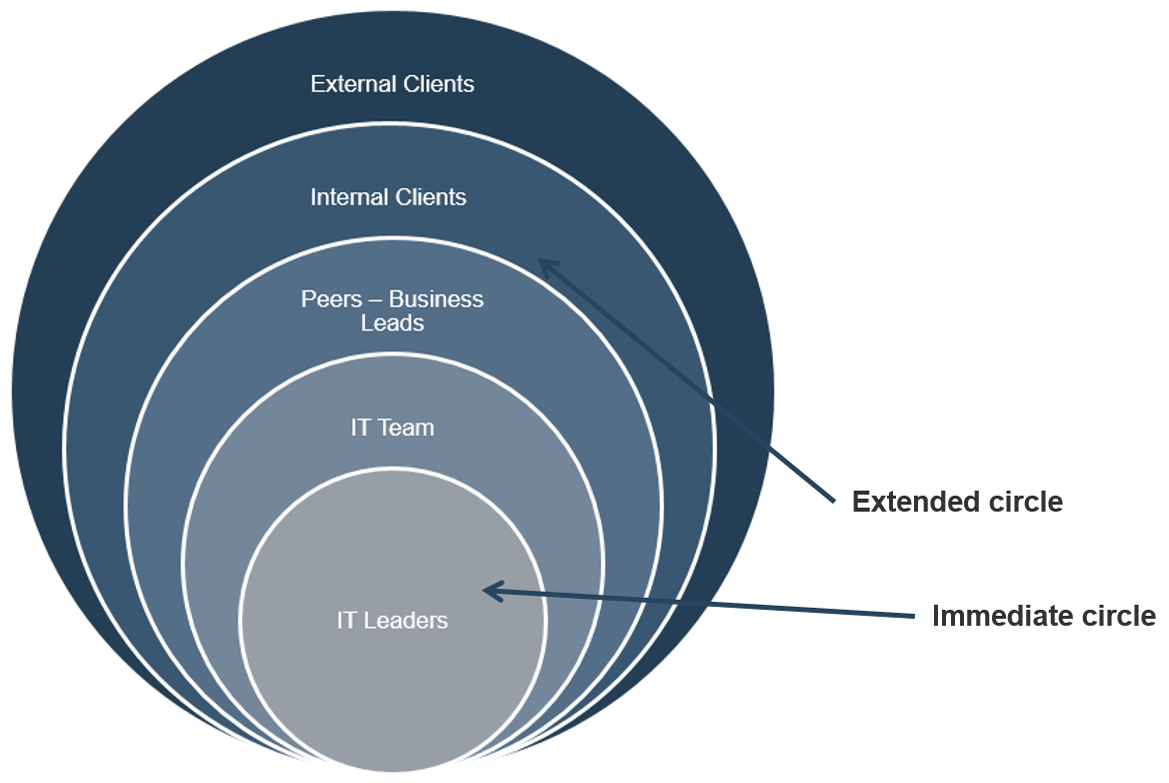

Identify stakeholders that must be consulted during the elicitation part of the process; get a good spectrum of subject matter experts (SMEs)

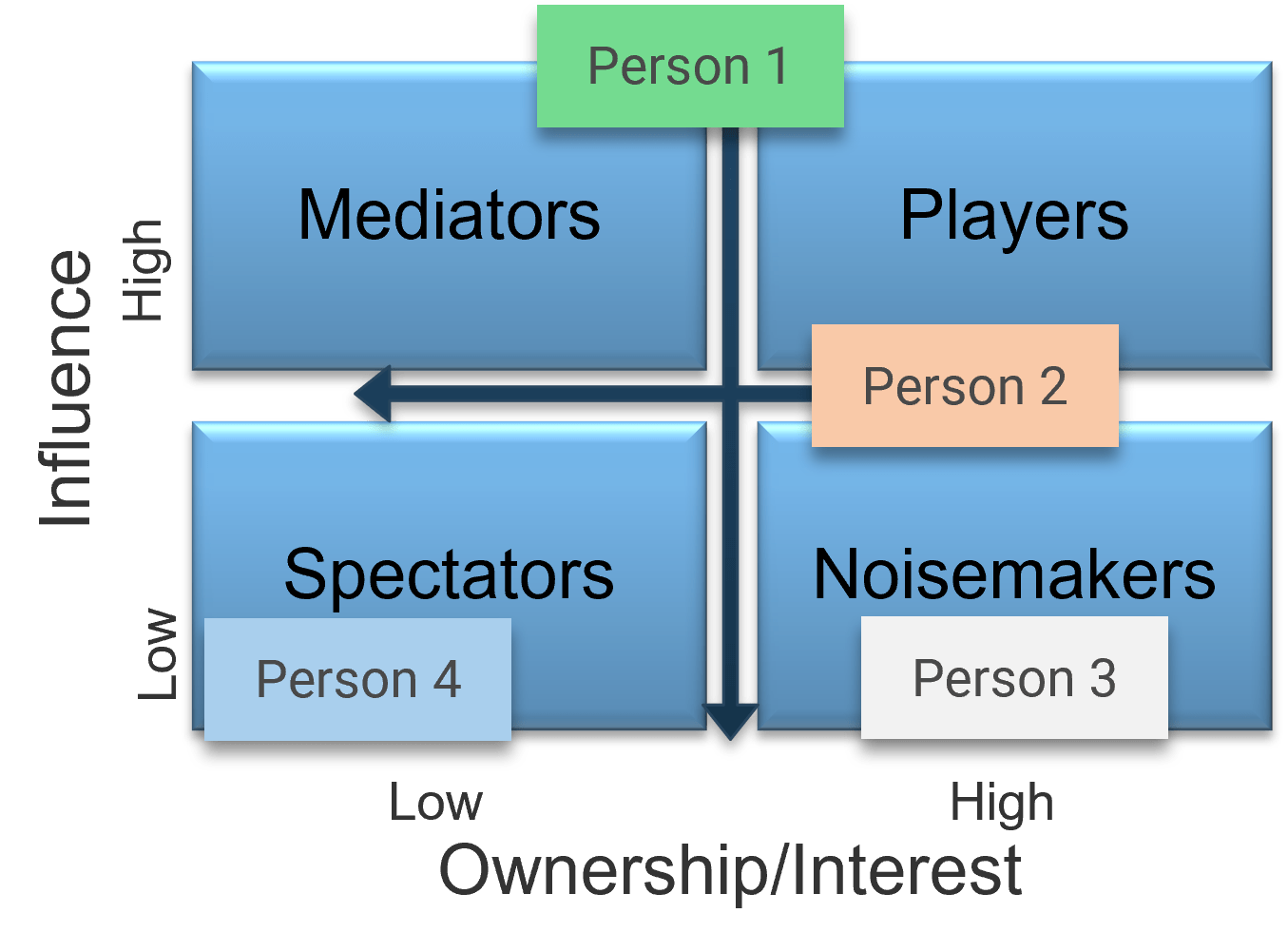

Before you can dive into most elicitation techniques, you need to know who you’re going to speak with – not all stakeholders hold the same value.

There are two broad categories of stakeholders:

Customers: Those who ask for a system/project/change but do not necessarily use it. These are typically executive sponsors, project managers, or interested stakeholders. They are customers in the sense that they may provide the funding or budget for a project, and may have requests for features and functionality, but they won’t have to use it in their own workflows.

Users: Those who may not ask for a system but must use it in their routine workflows. These are your end users, those who will actually interact with the system. Users don’t necessarily have to be people – they can also be other systems that will require inputs or outputs from the proposed solution. Understand their needs to best drive more granular functional requirements.

"The people you need to make happy at the end of the day are the people who are going to help you identify and prioritize requirements." – Director of IT, Municipal Utilities Provider

Need a hand with stakeholder identification? Leverage Info-Tech’s Stakeholder Planning Tool to catalog and prioritize the stakeholders your BAs will need to contact during the elicitation phase.

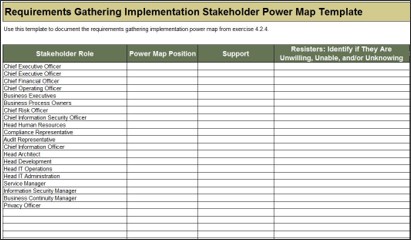

Exercise: Identify and analyze stakeholders for the application project prior to beginning formal elicitation

1.2.7 – 45 minutes

Input

Output

Materials

- Whiteboard

- Markers

- Sticky notes

Participants

Practice the process for identifying and analyzing key stakeholders for requirements gathering.

- As a group, generate a complete list of the project stakeholders. Consider who is involved in the problem and who will be impacted by the solution, and record the names of these stakeholders/stakeholder groups on a sticky note. Categories include:

- Who is the project sponsor?

- Who are the user groups?

- Who are the project architects?

- Who are the specialty stakeholders (SMEs)?

- Who is your project team?

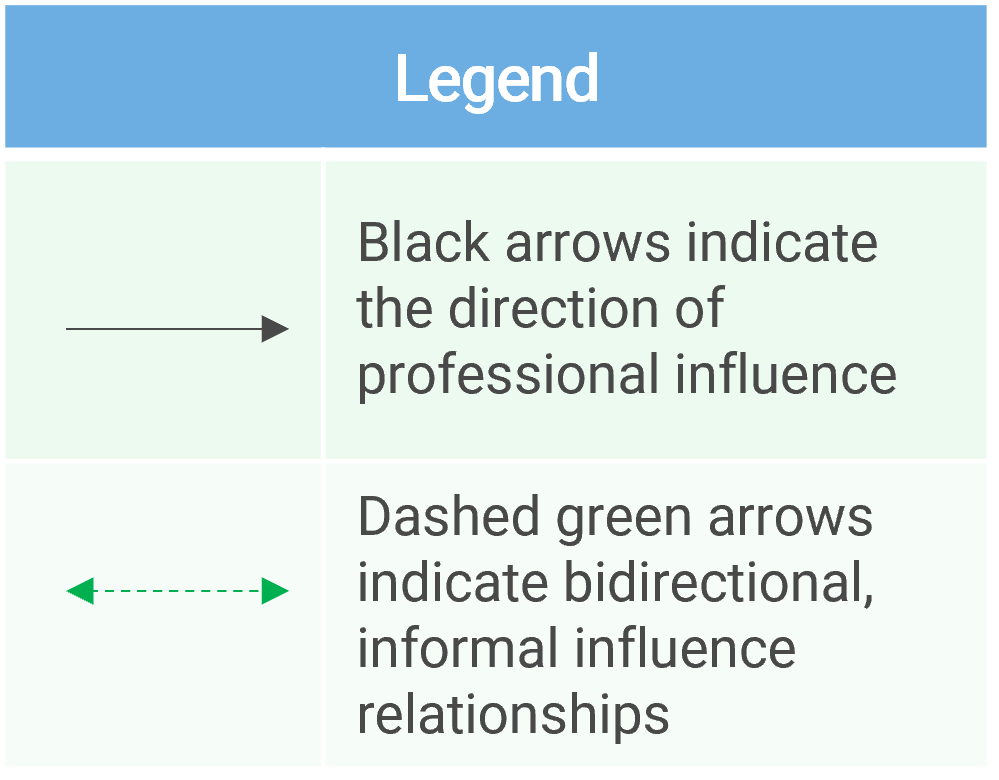

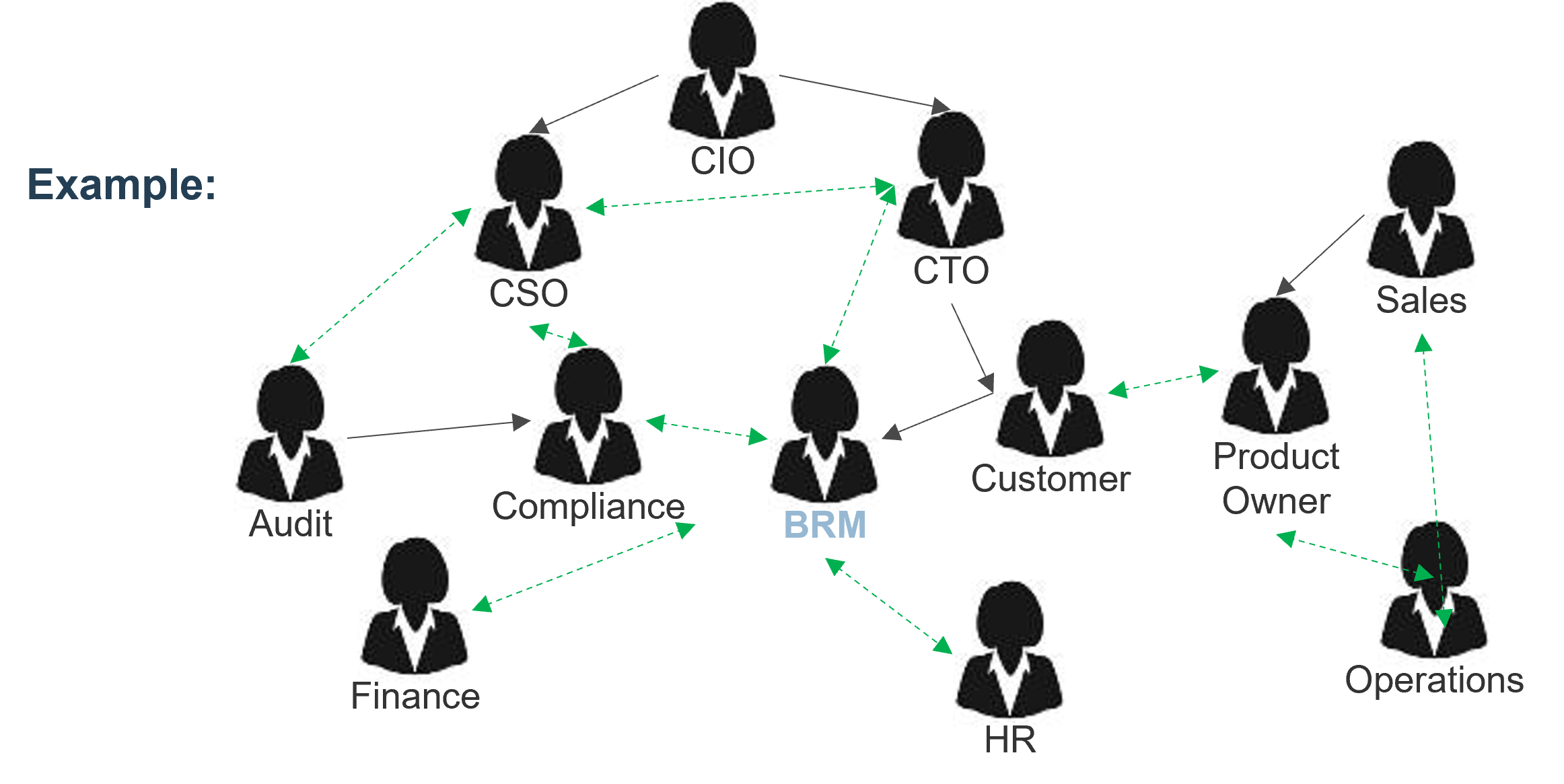

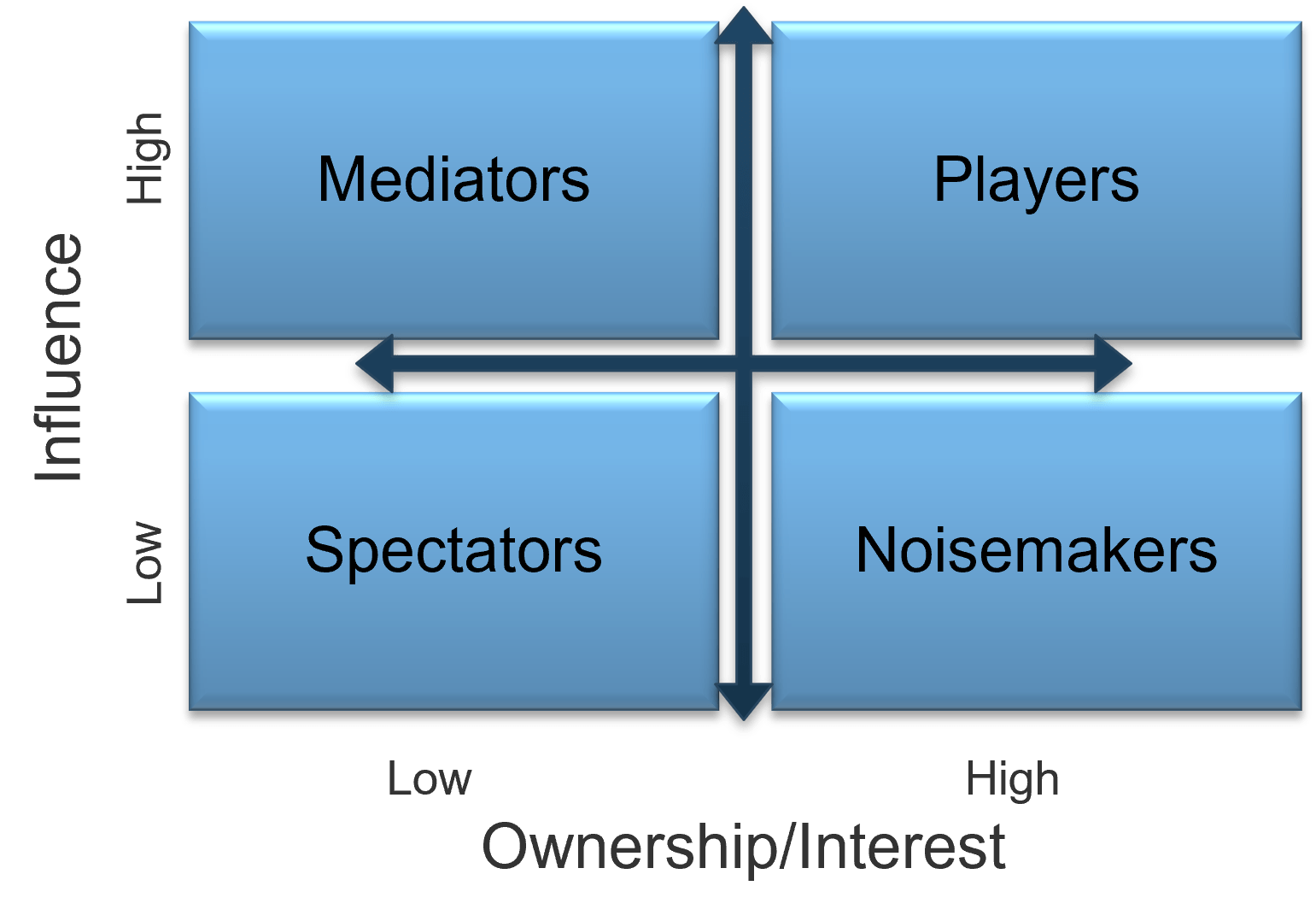

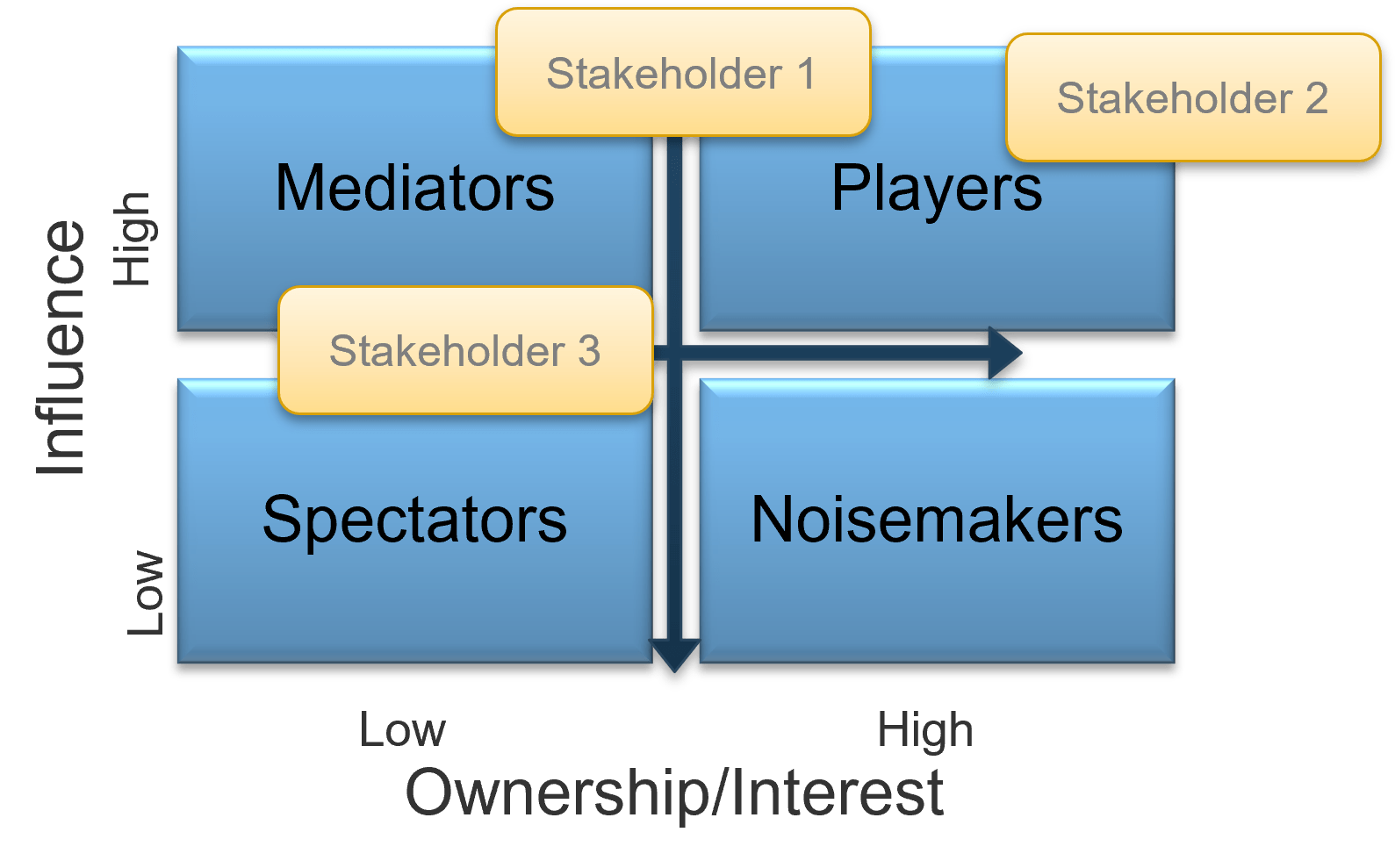

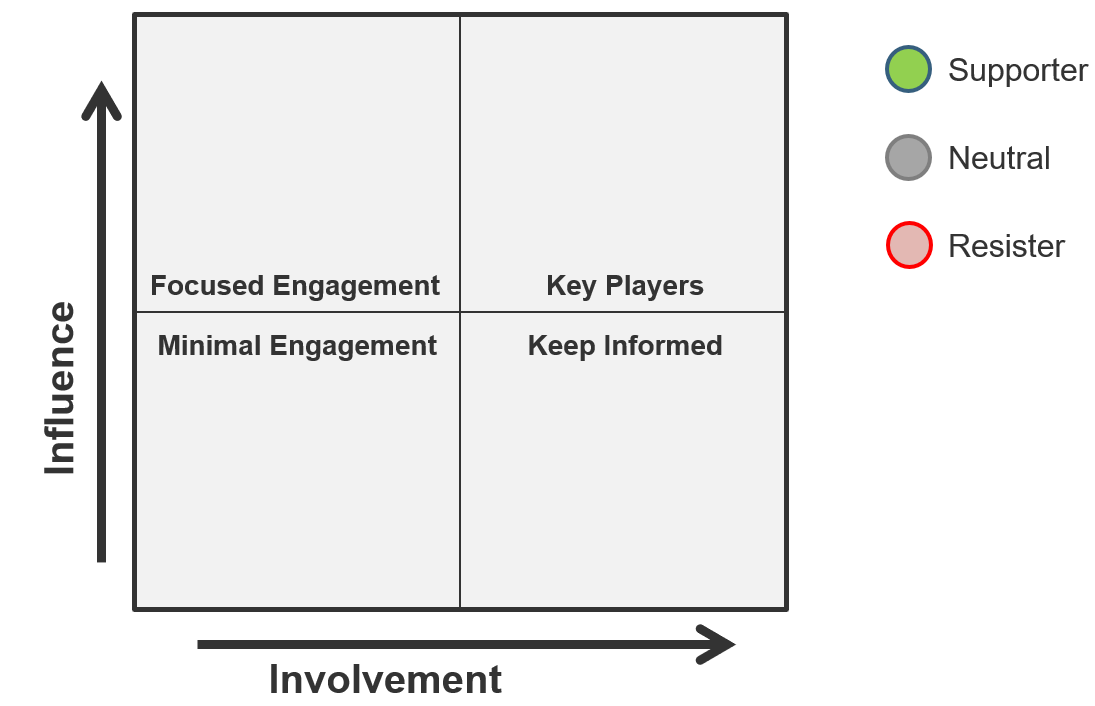

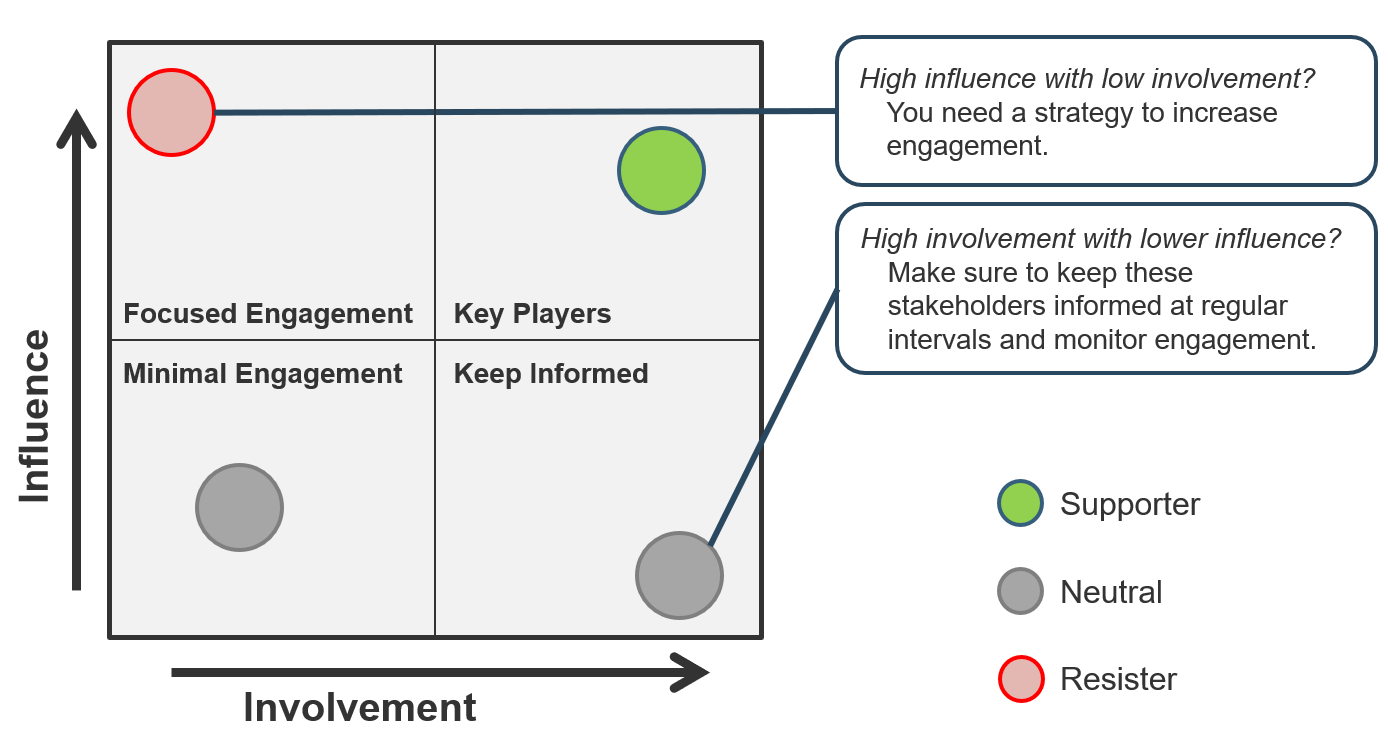

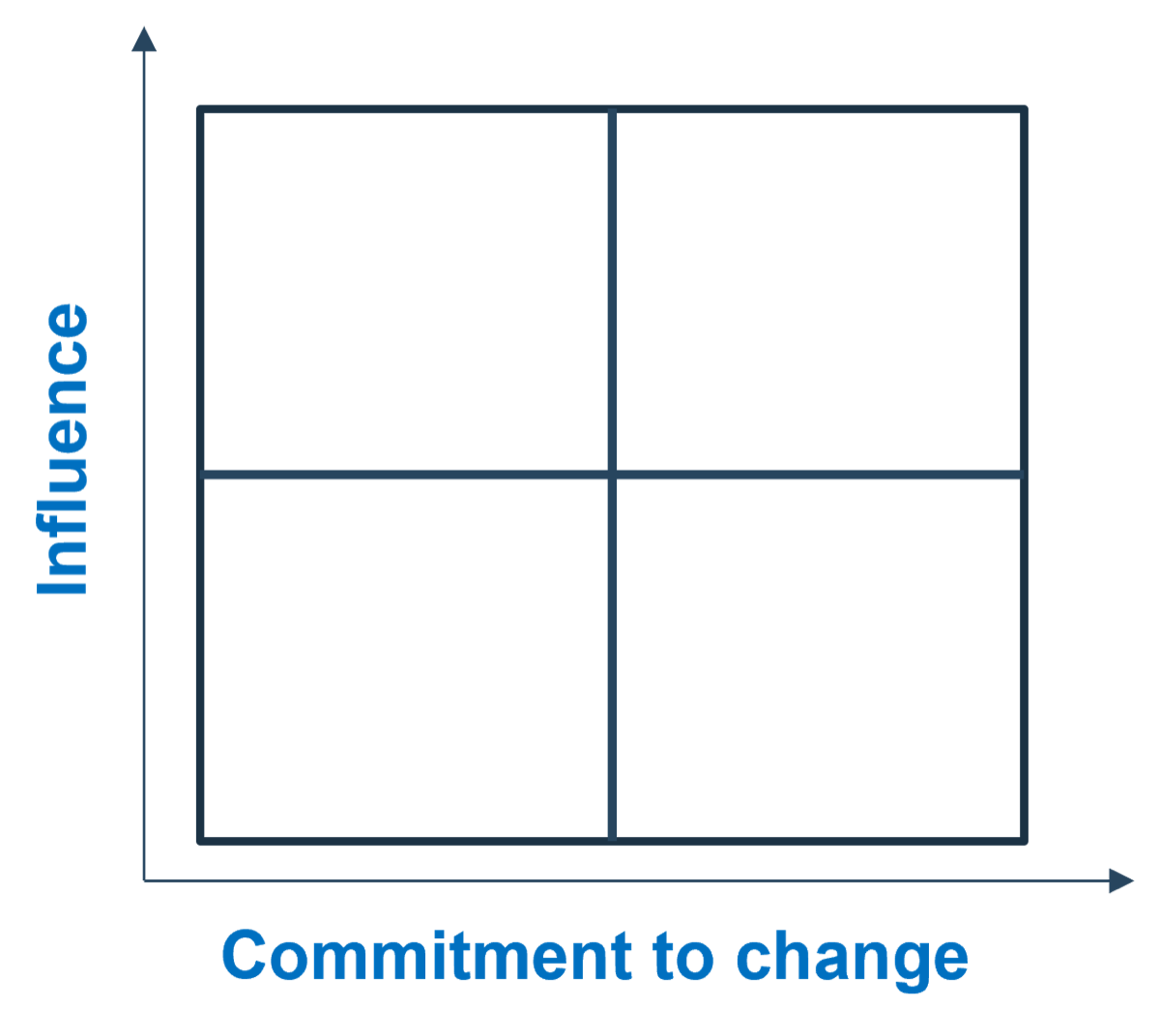

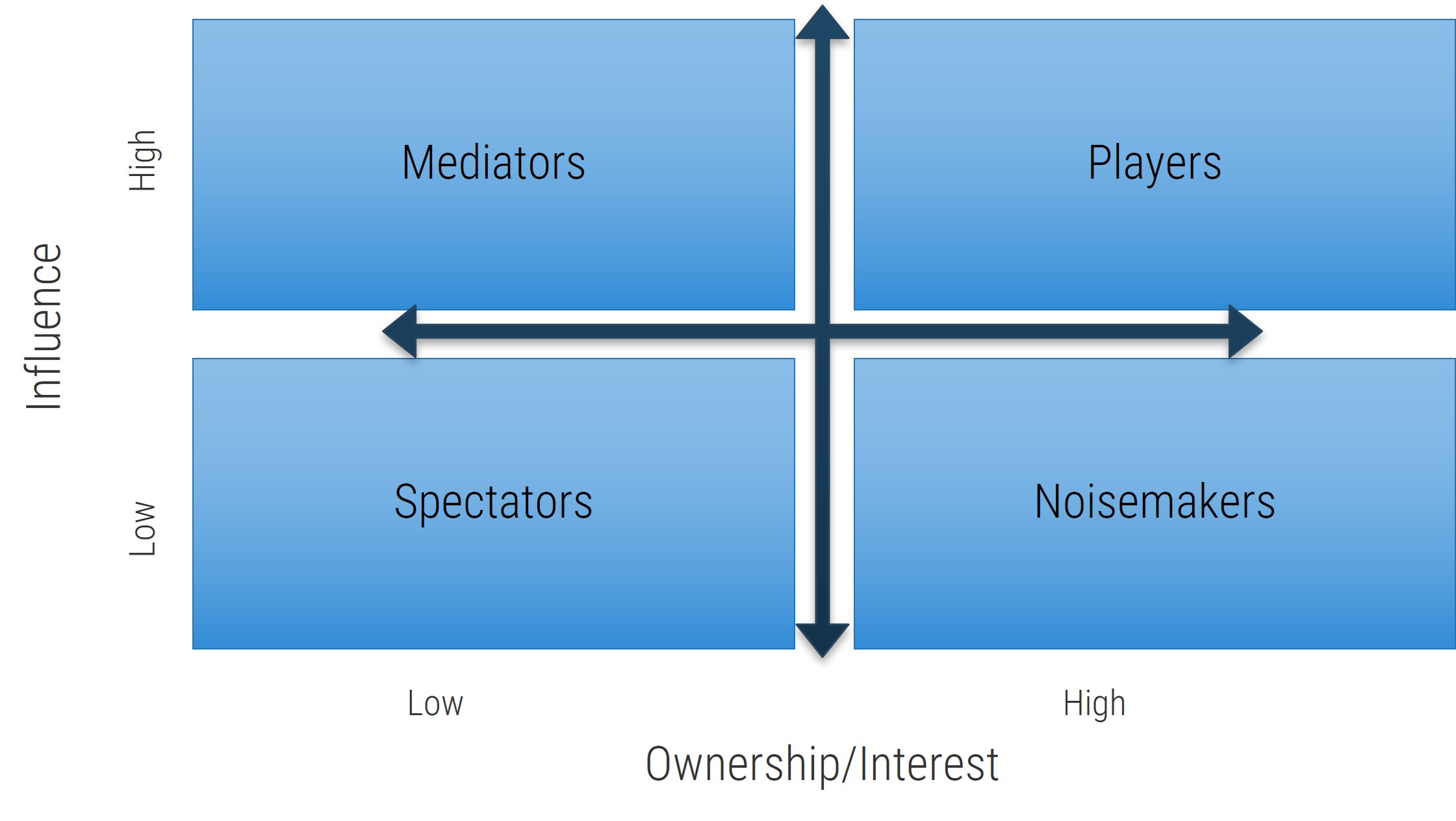

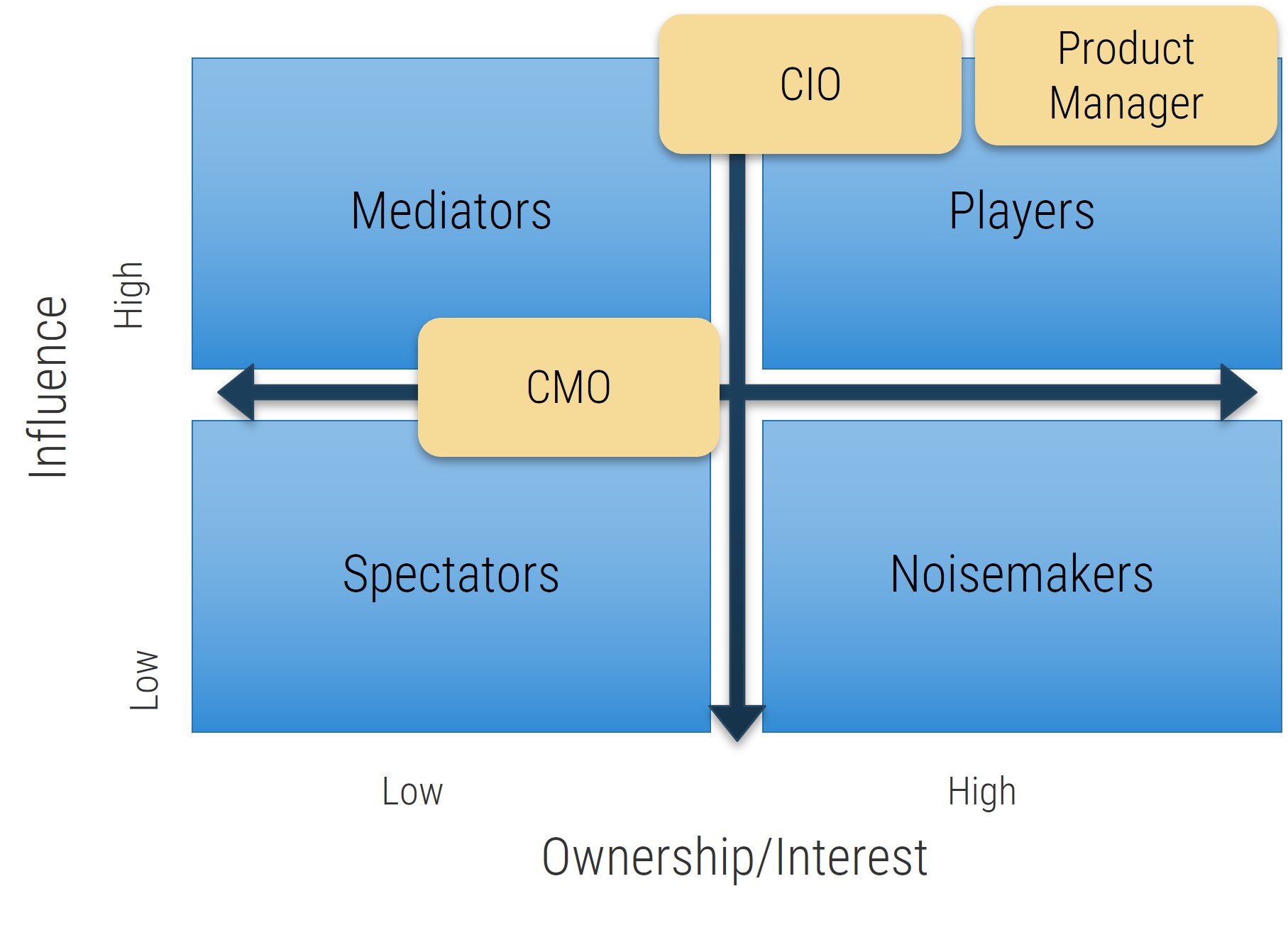

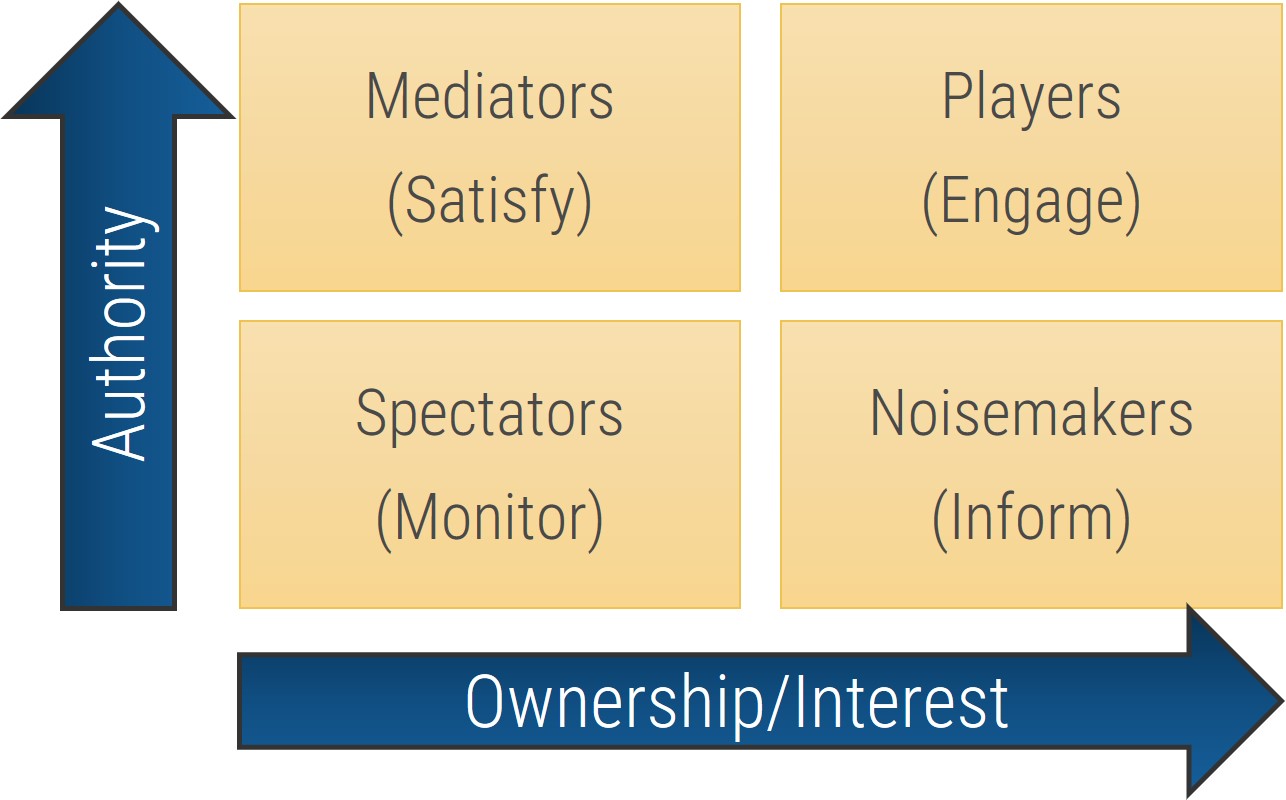

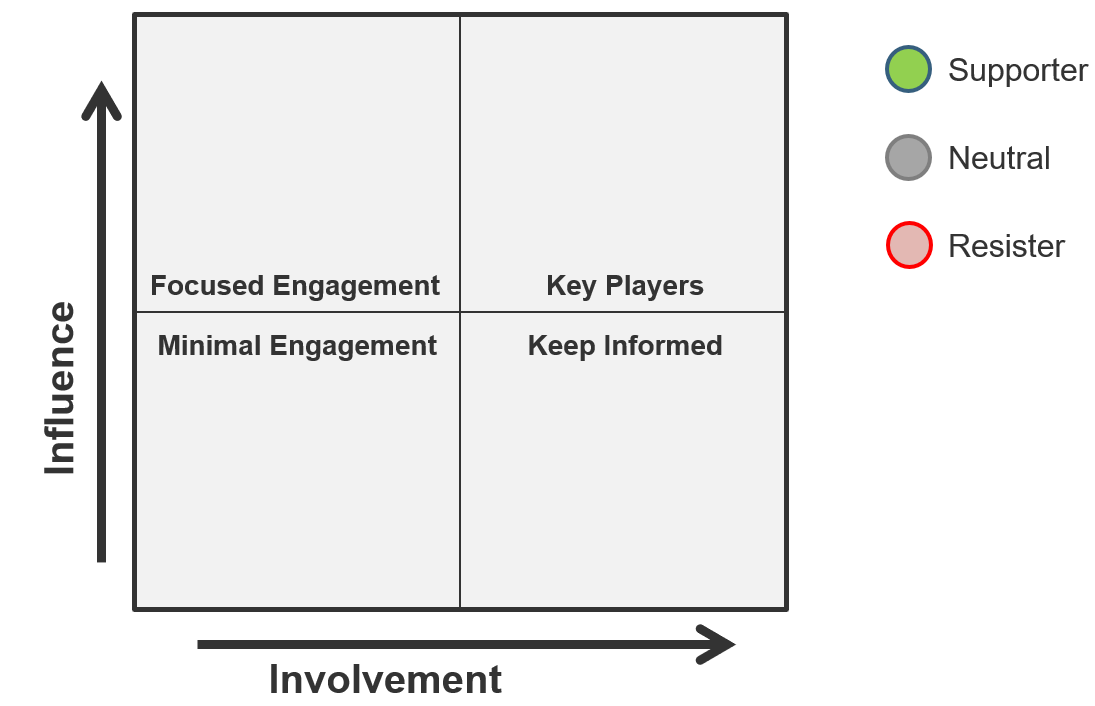

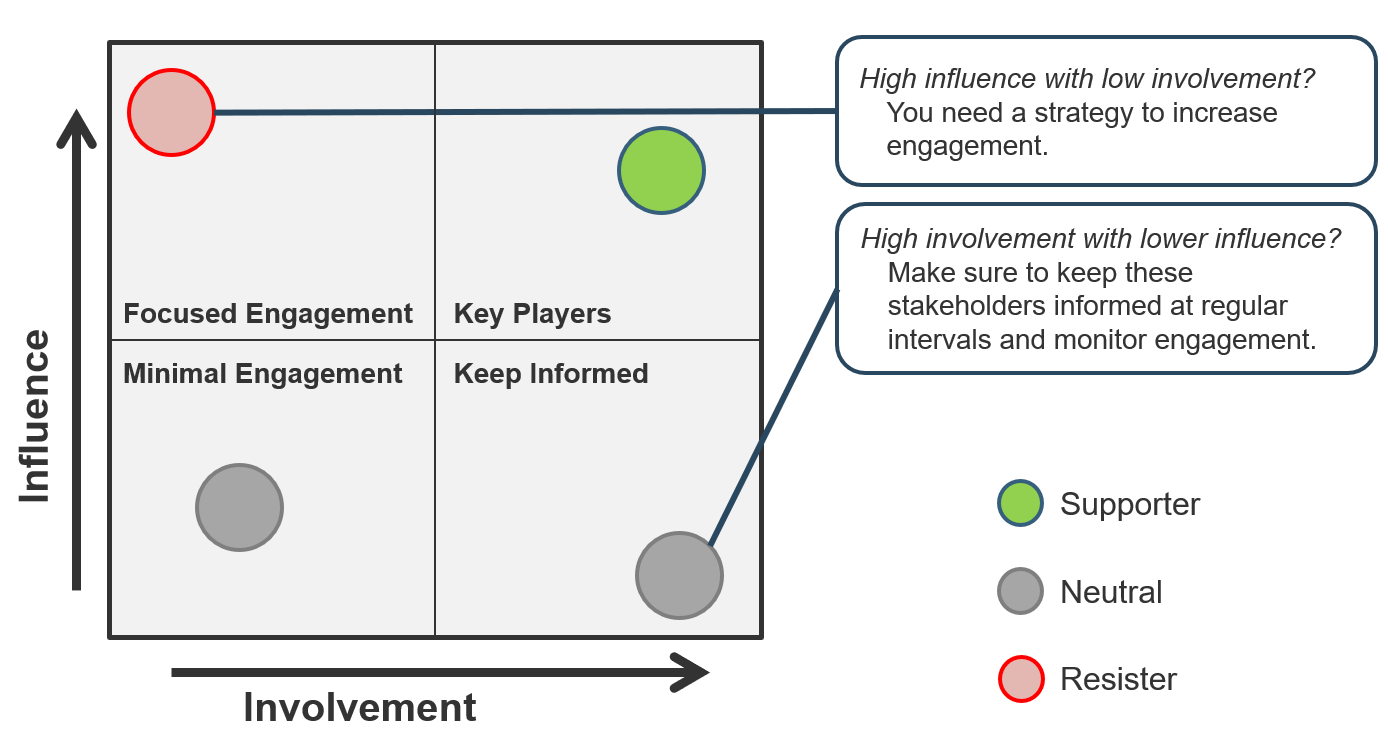

- Now that you’ve compiled a complete list, review each user group and indicate their level of influence against their level of involvement in the project to create a stakeholder power map by placing their sticky on a 2X2 grid.

- At the end of the day, record this list in the Requirements Gathering Communication Tracking Template.

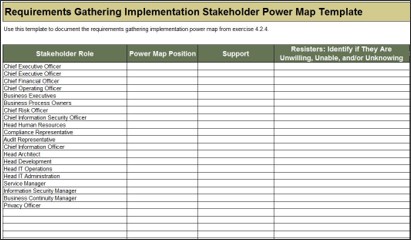

Use Info-Tech’s Requirements Gathering Communication Tracking Template

1.5 Requirements Gathering Communication Tracking Template

Use the Requirements Gathering Communication Tracking Template for structuring and managing ongoing communications among key requirements gathering implementation stakeholders.

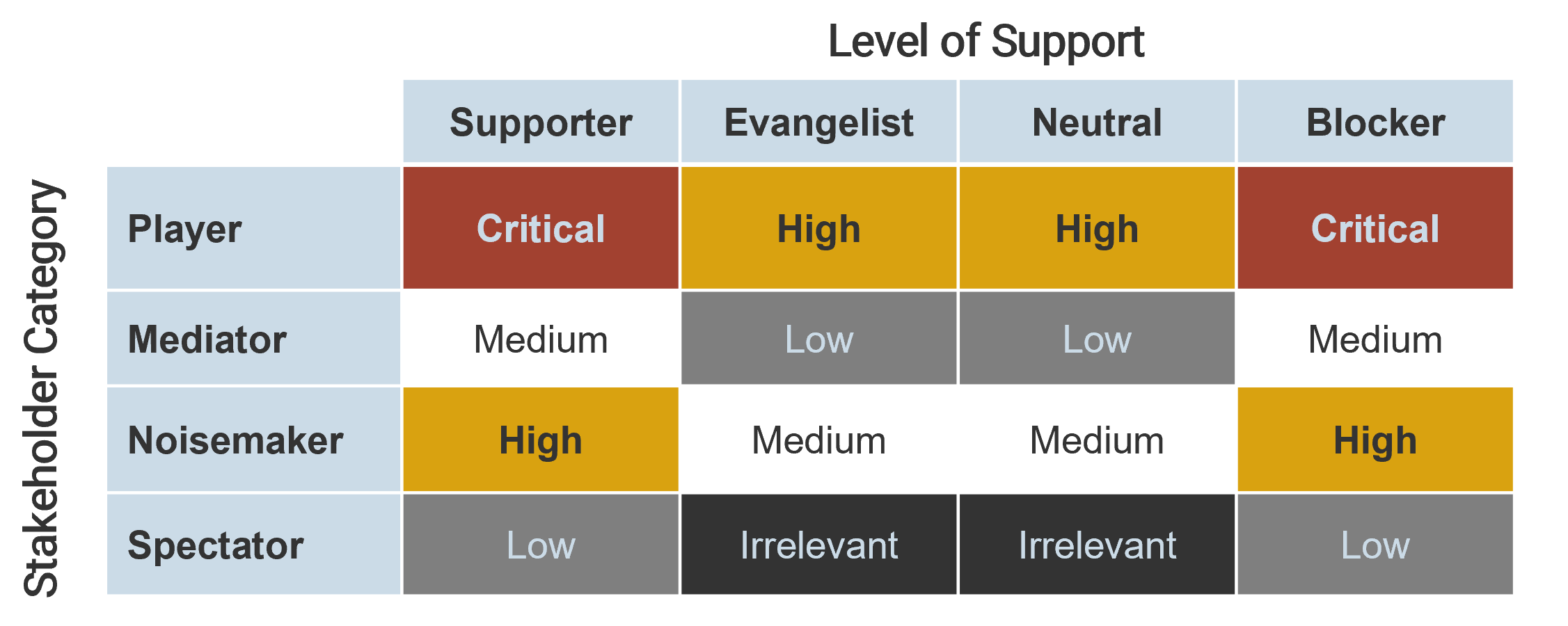

Use the Stakeholder Power Map tab to:

- Identify the stakeholder's name and role.

- Identify their position on the power map using the drop-down menu.

- Identify their level of support.

- Identify resisters' reasons for resisting as: unwilling, unable, and/or unknowing.

- Identify which committees they currently sit on, and which they will sit on in the future state.

- Identify any key objections the stakeholder may have.

Use the Communication Management Plan tab to:

- Identify the vehicle/communication medium (status update, meeting, training, etc.).

- Identify the audience for the communication.

- Identify the purpose for communication.

- Identify the frequency.

- Identify who is responsible for the communication.

- Identify how the communication will be distributed, and the level of detail.

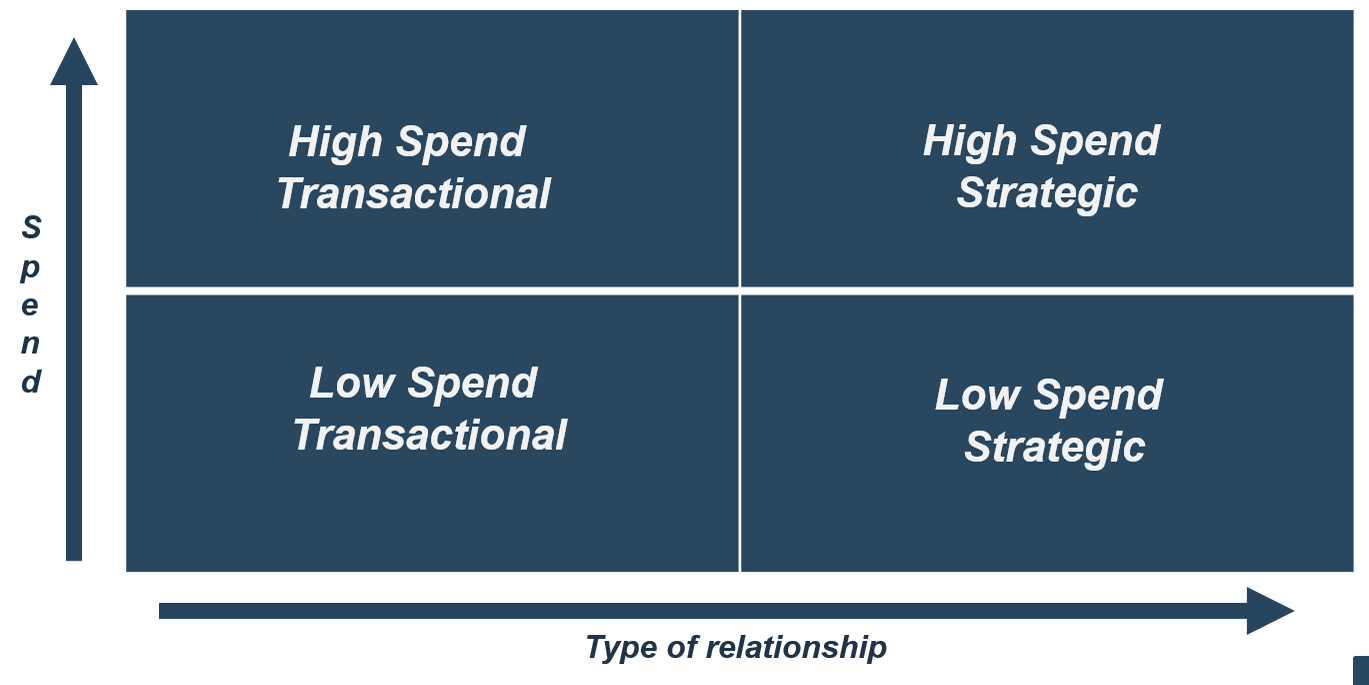

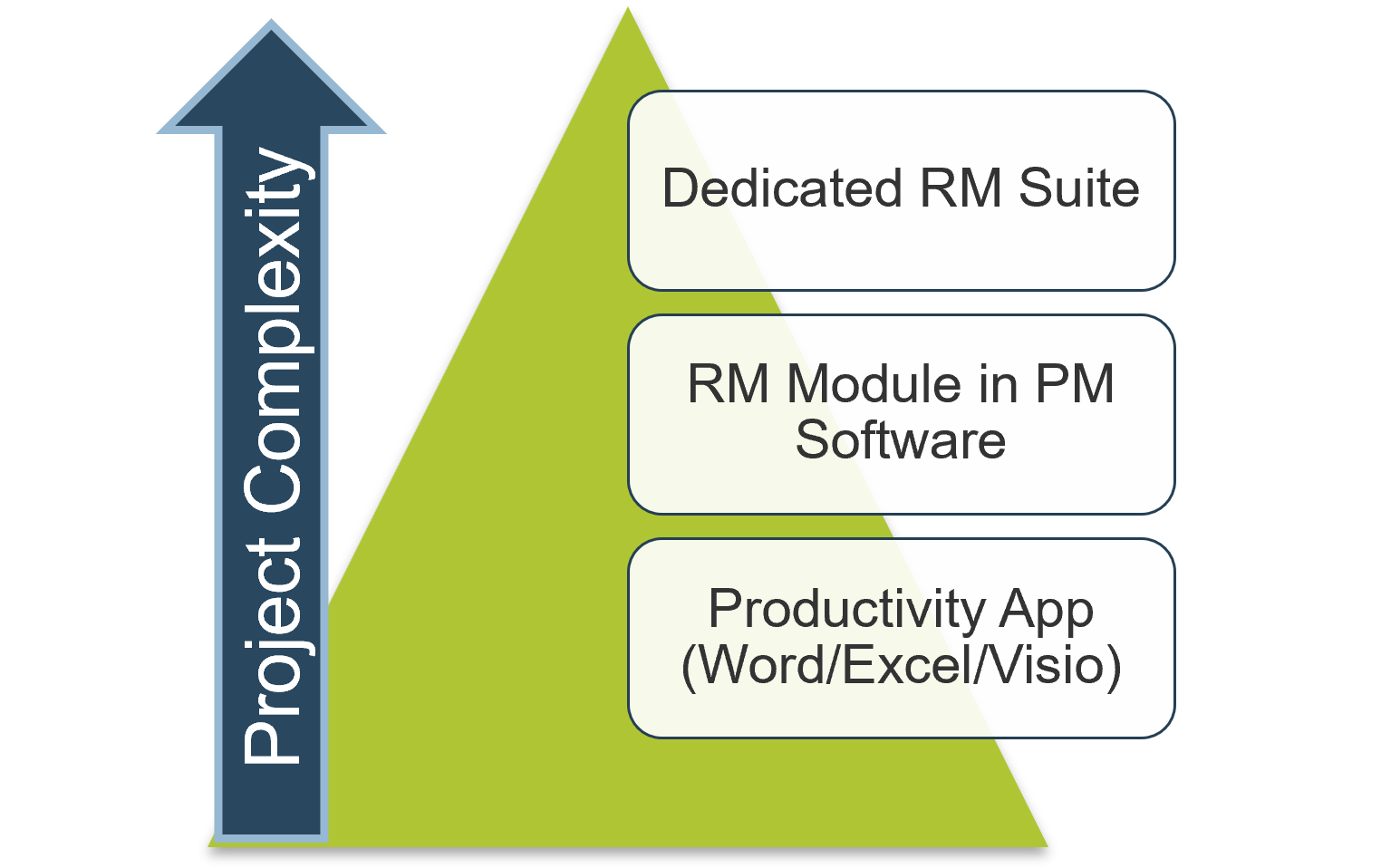

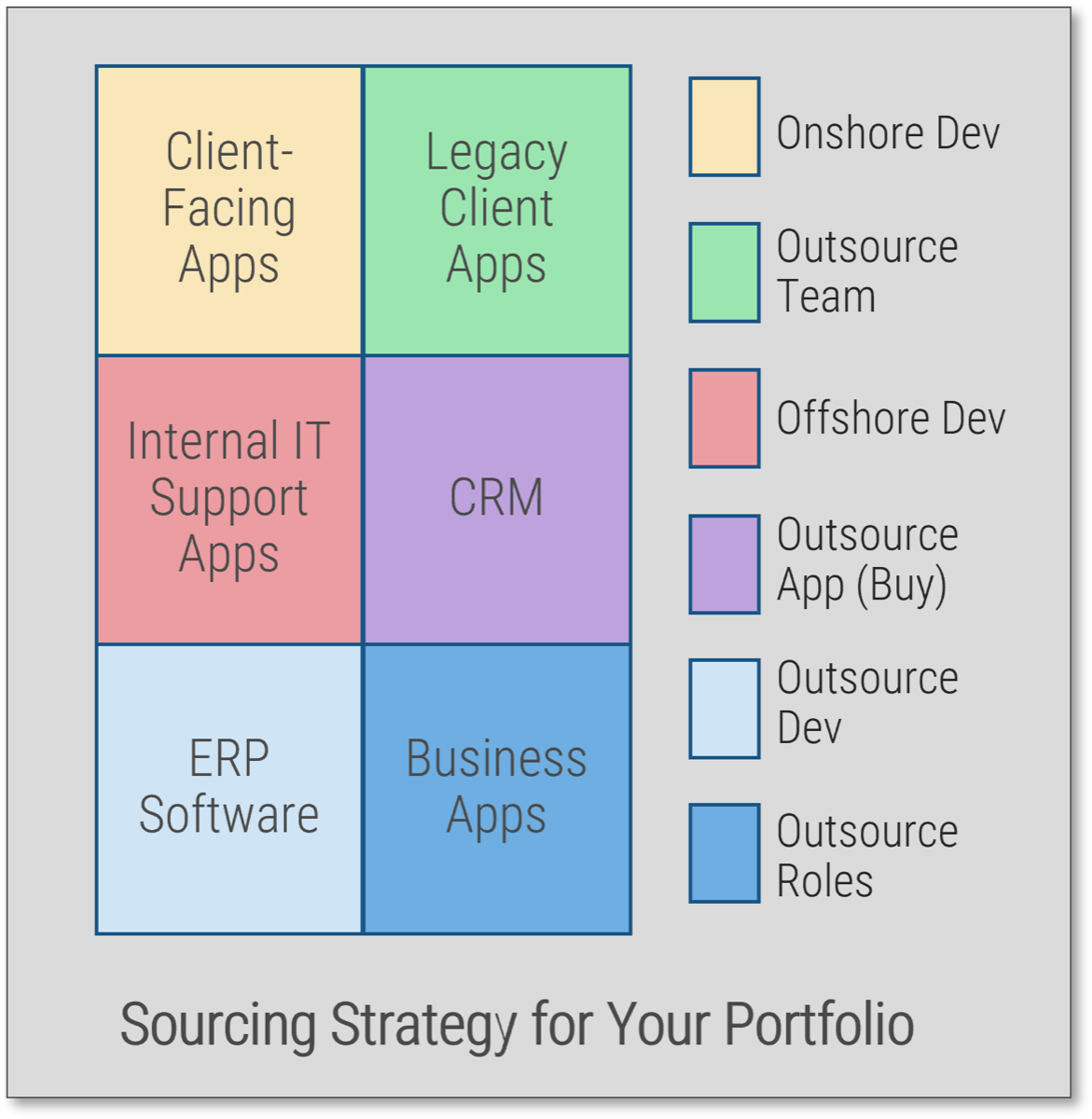

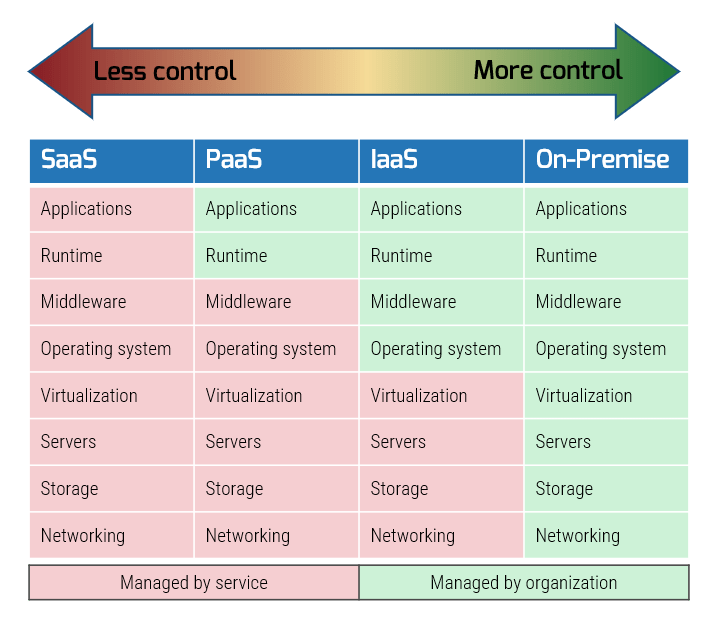

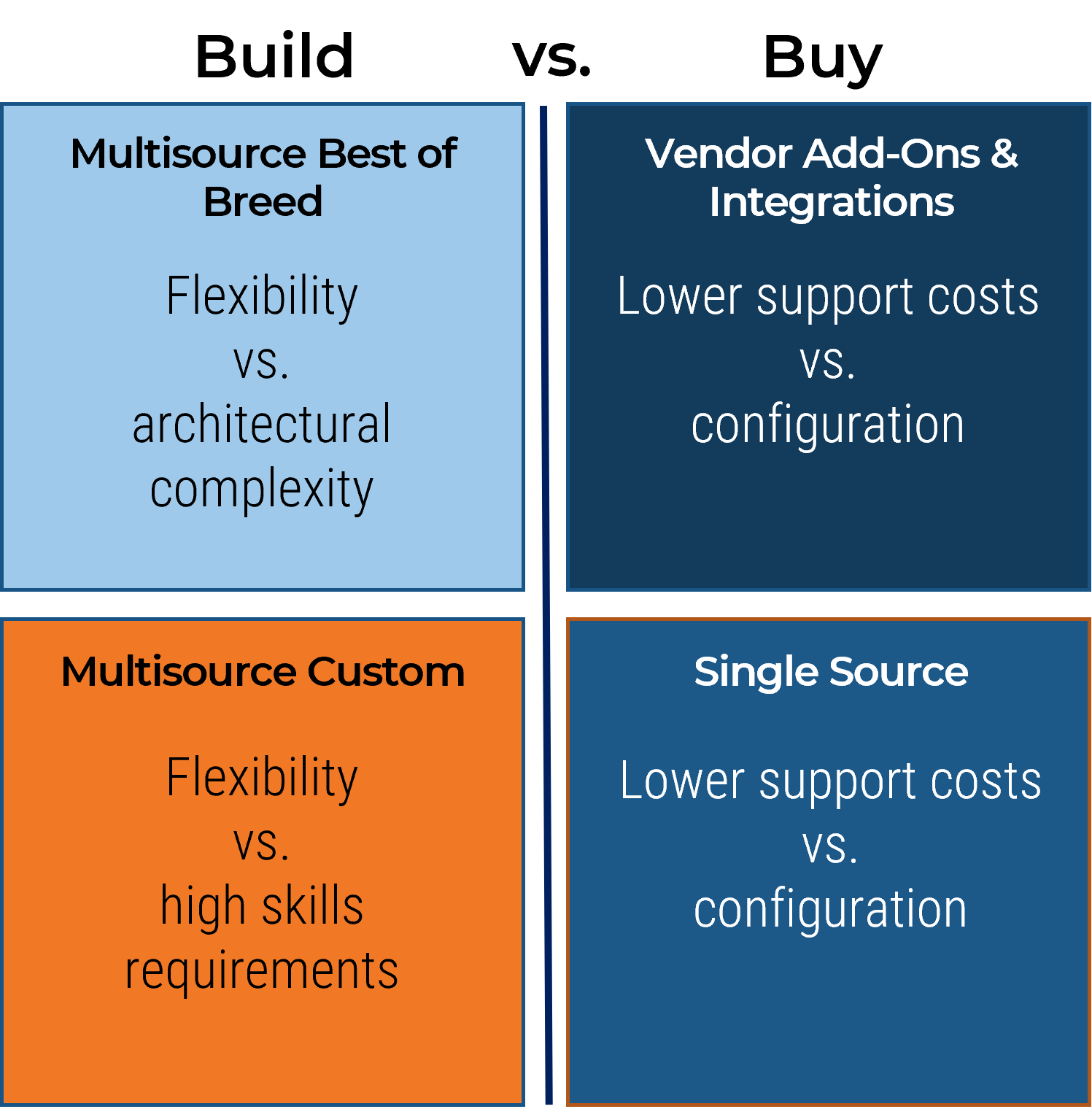

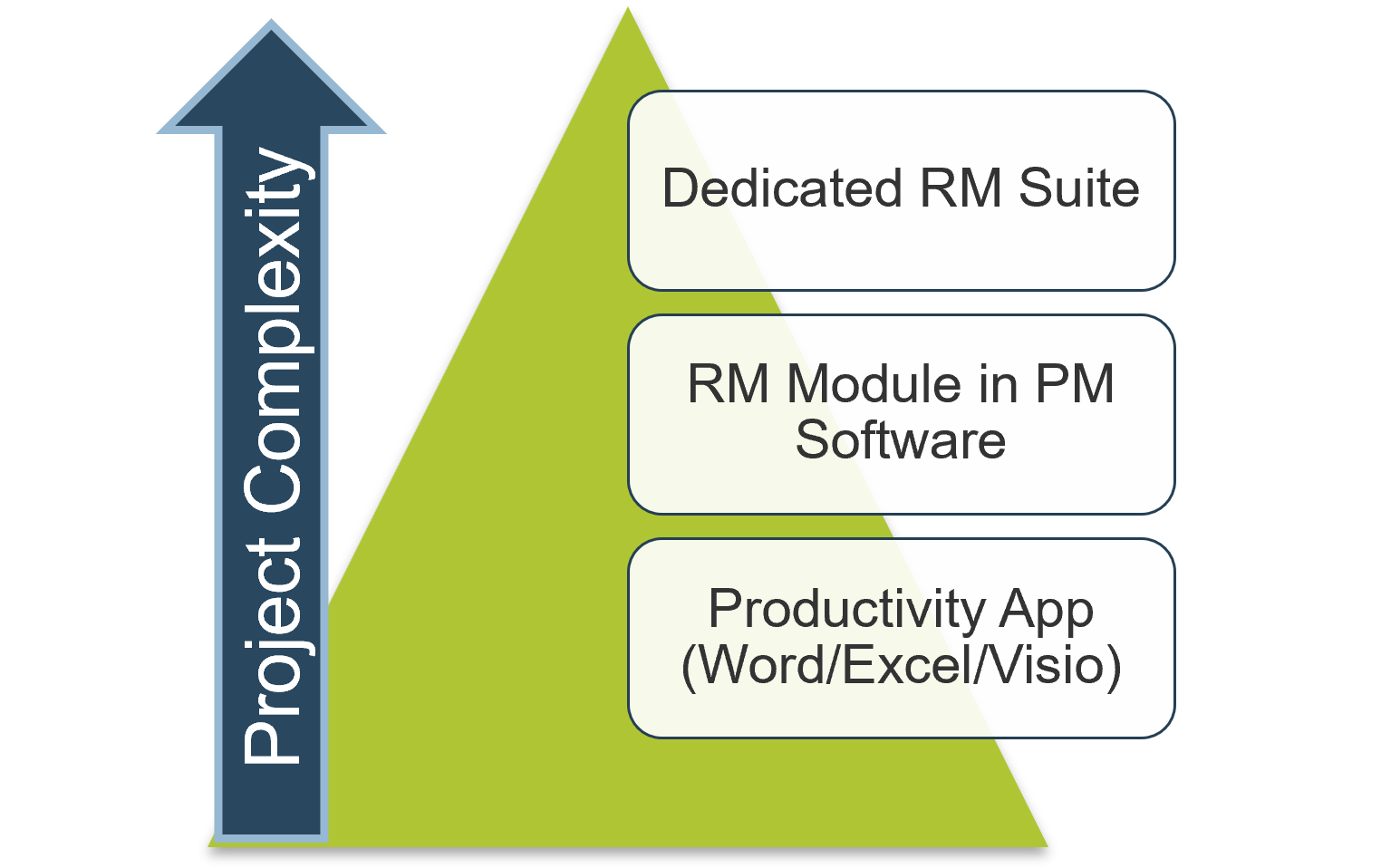

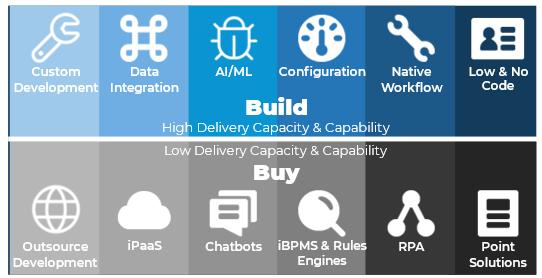

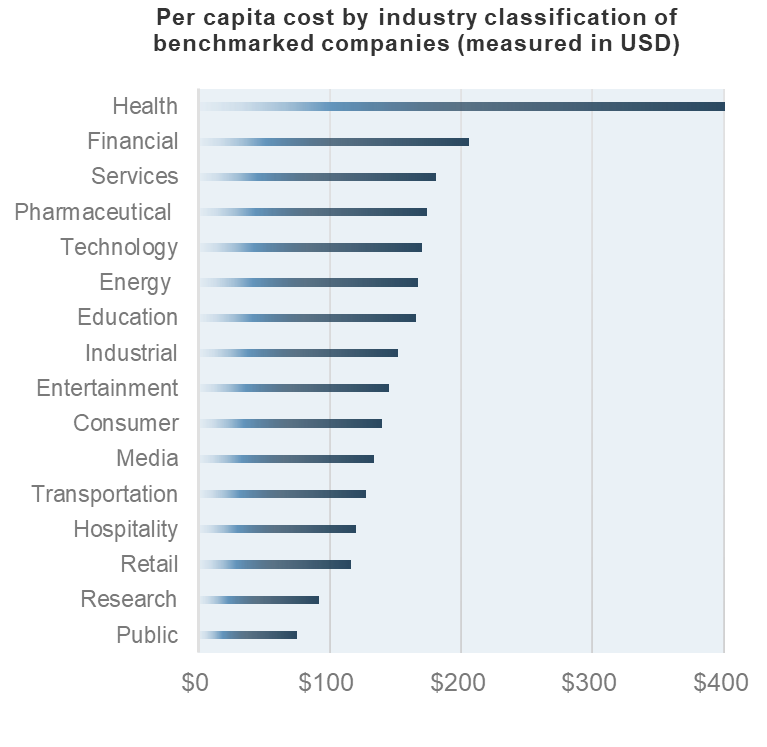

Right-size your investments in requirements management technology; sometimes the “suite spot” isn’t necessary

Recording and analyzing requirements needs some kind of tool, but don’t overinvest in a dedicated suite if you can manage with a more inexpensive solution (such as Word, Excel, and/or Visio). Top-tier solutions may be necessary for an enterprise ERP deployment, but you can use a low-cost solution for low-level productivity application.

- Many companies do things in the wrong order. Organizations need to right-size the approach that they take to recording and analyzing requirements. Taking the suite approach isn’t always better – often, inputting the requirements into Word or Excel will suffice. An RM suite won’t solve your problems by itself.

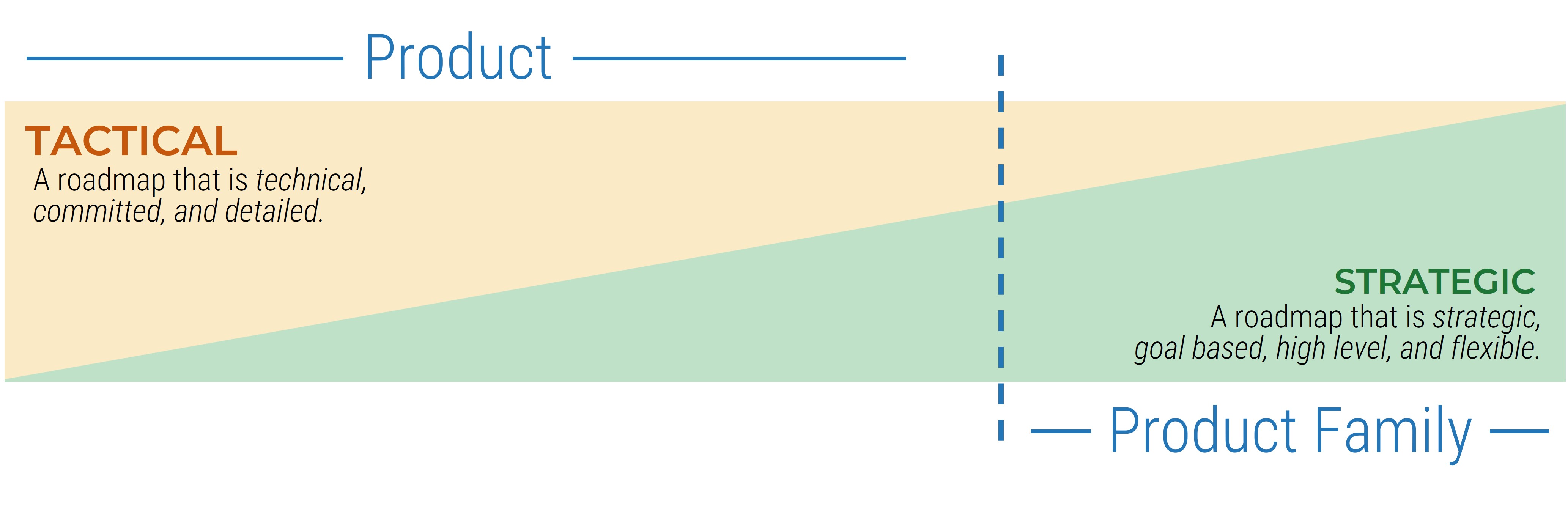

- If you’re dealing with strategic approach or calculated approach projects, their complexity likely warrants a dedicated RM suite that can trace system dependencies. If you’re dealing with primarily elementary or fundamental approach projects, use a more basic tool.

Your SOP guide should specify the technology platform that your analysts are expected to use for initial elicitation as well as analysis and validation. You don’t want them to use Word if you’ve invested in a full-out IBM RM solution.

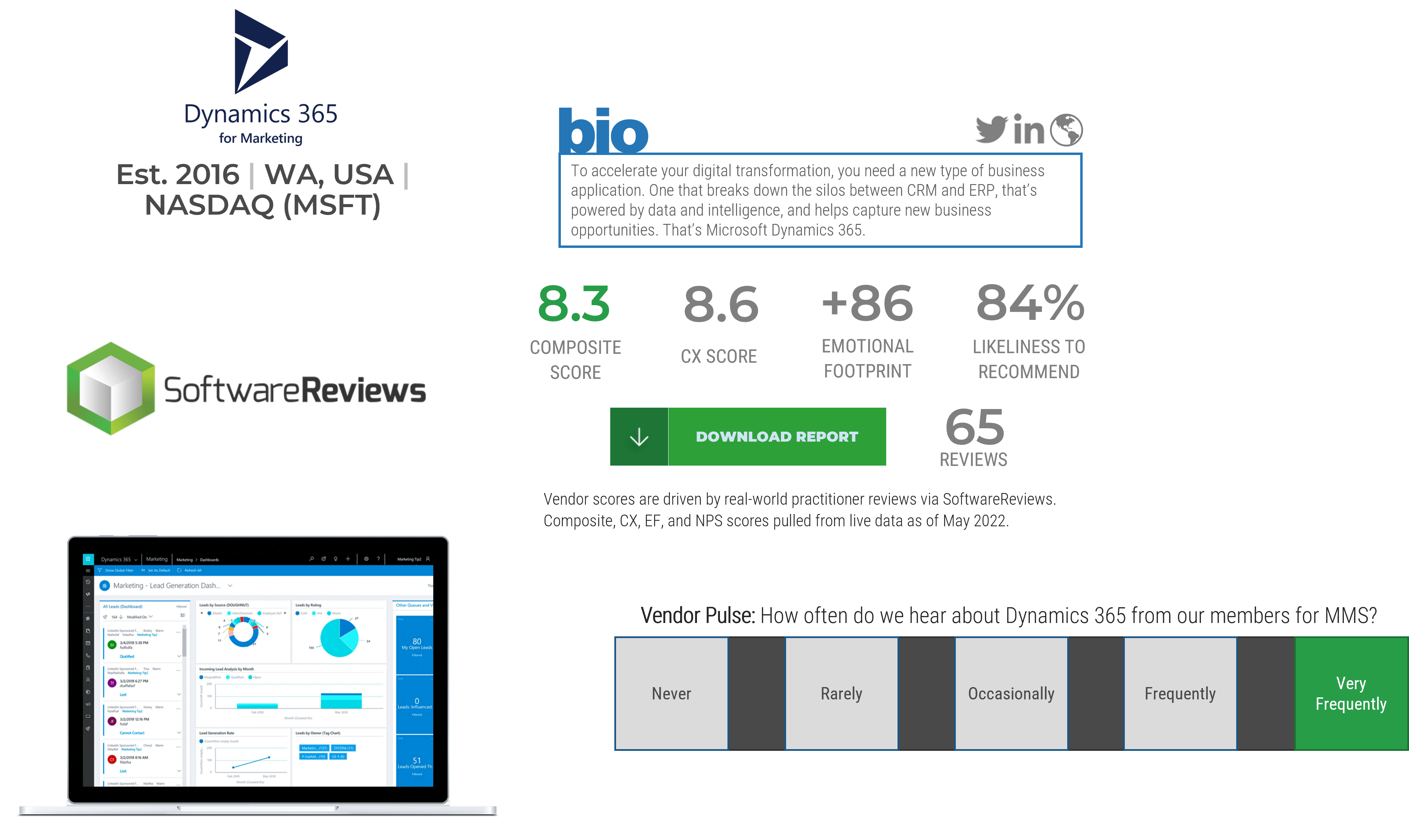

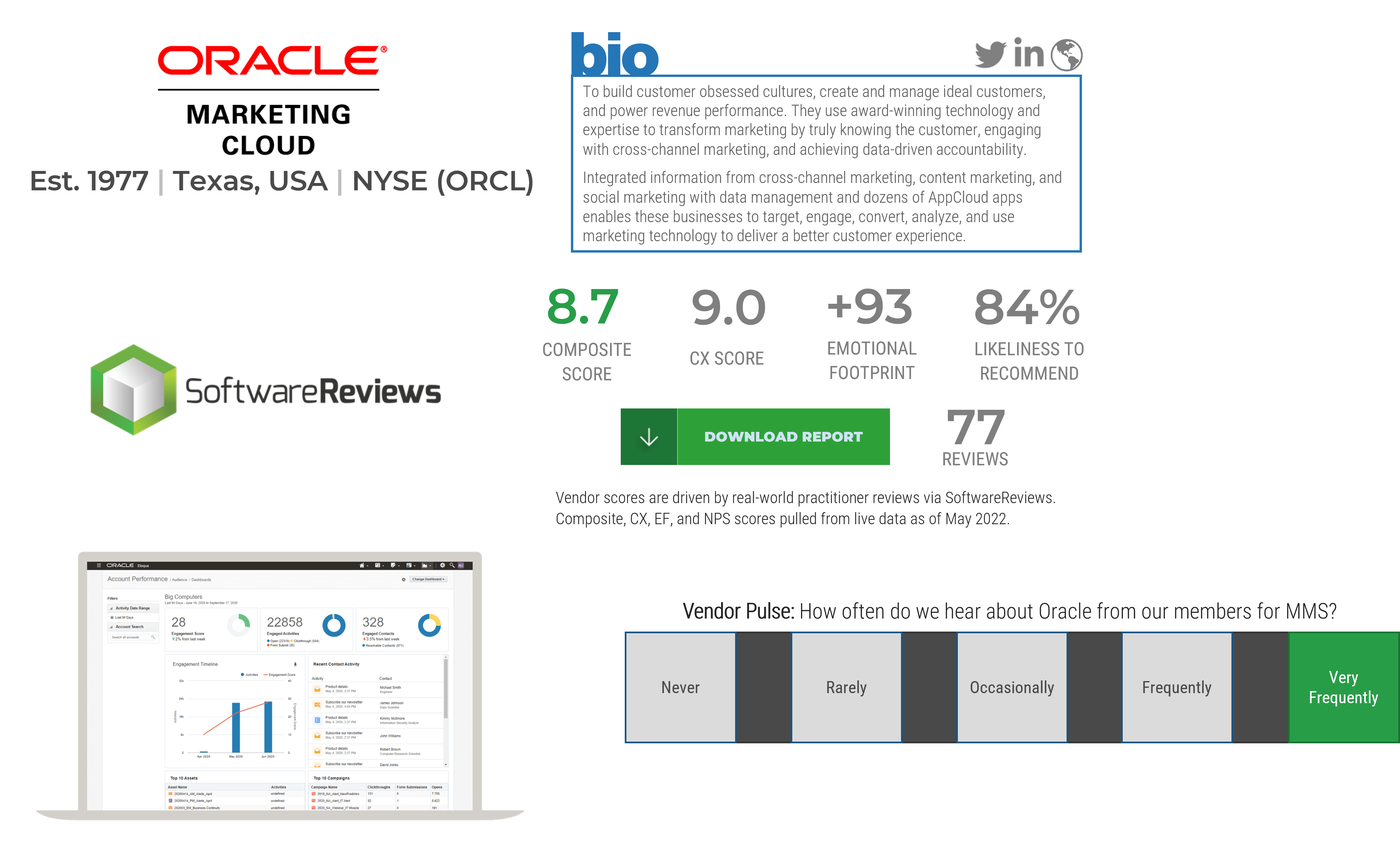

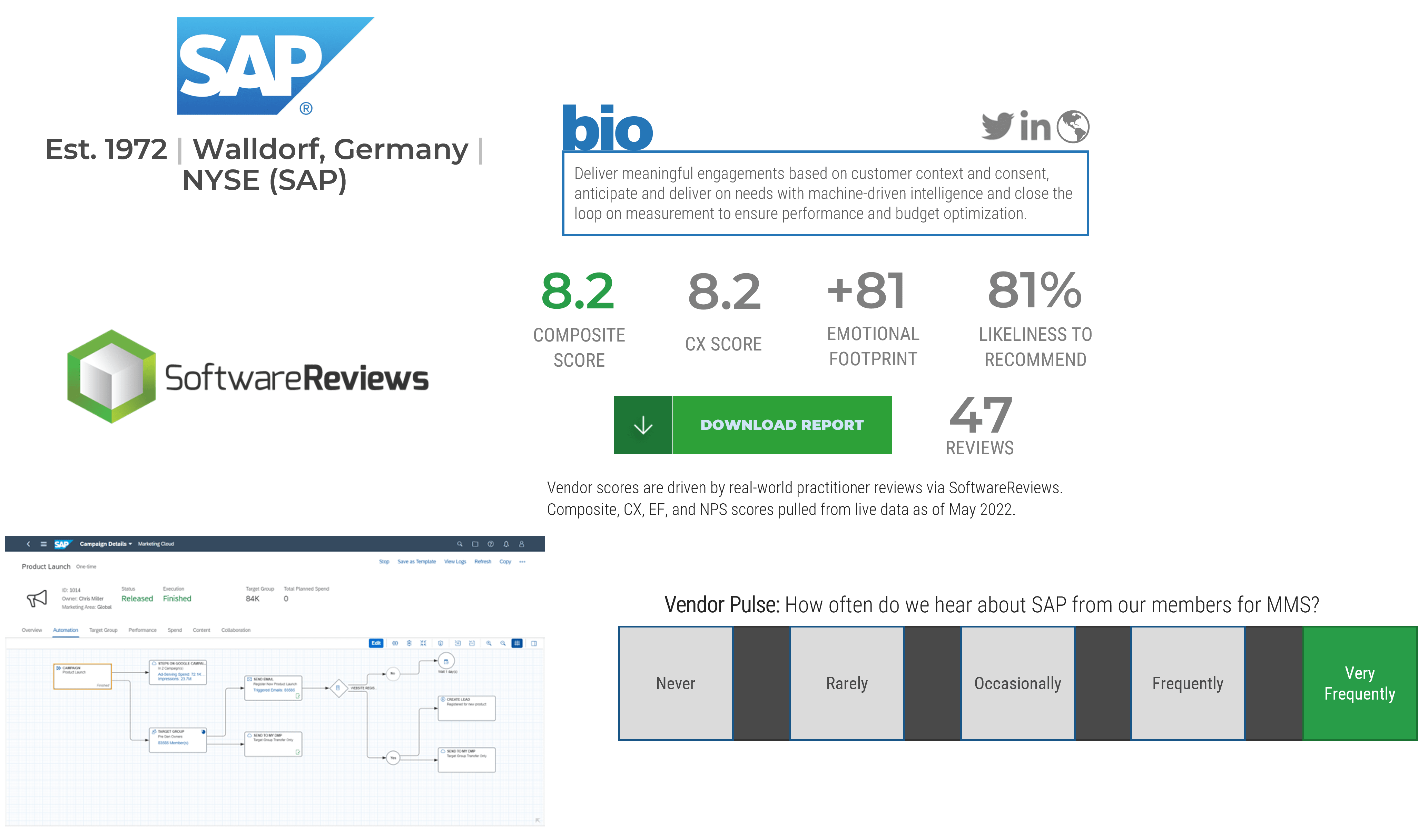

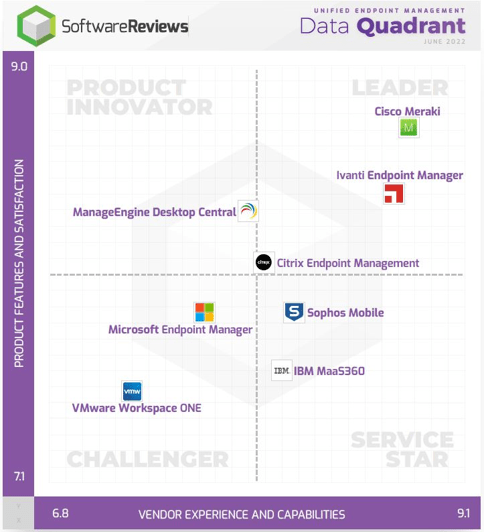

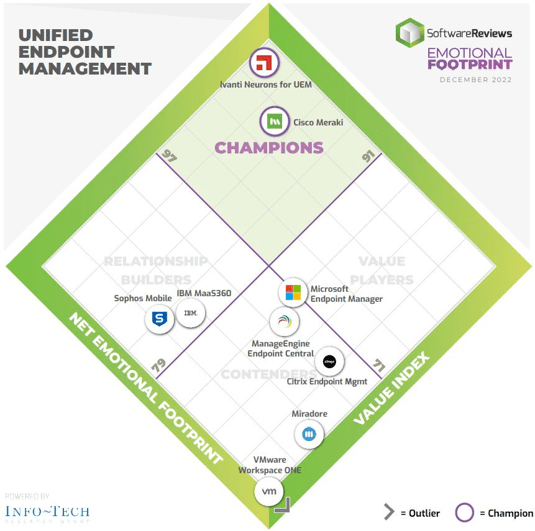

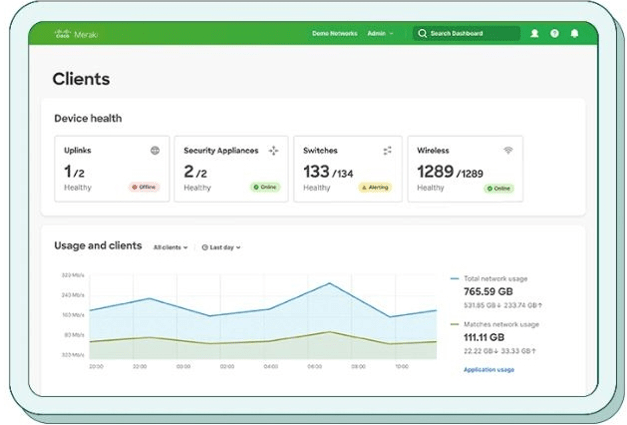

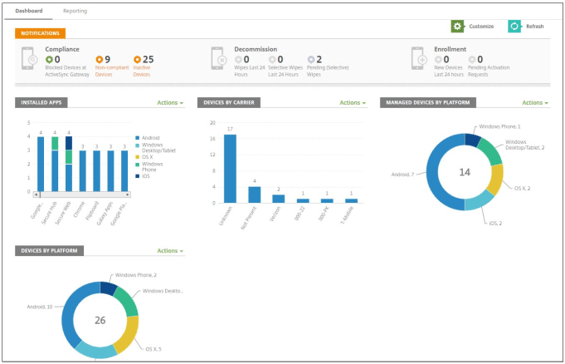

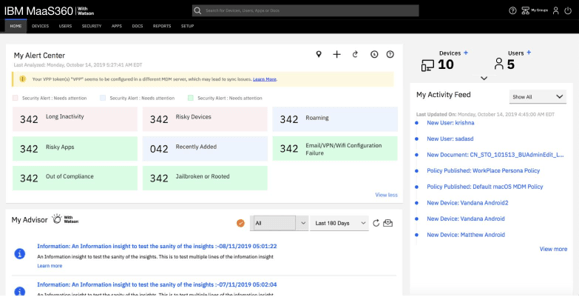

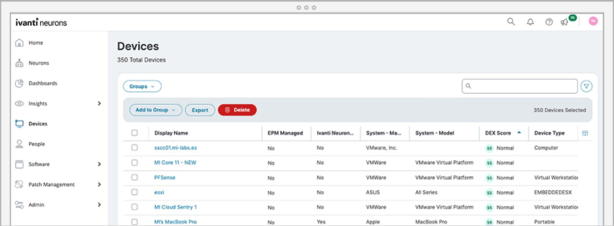

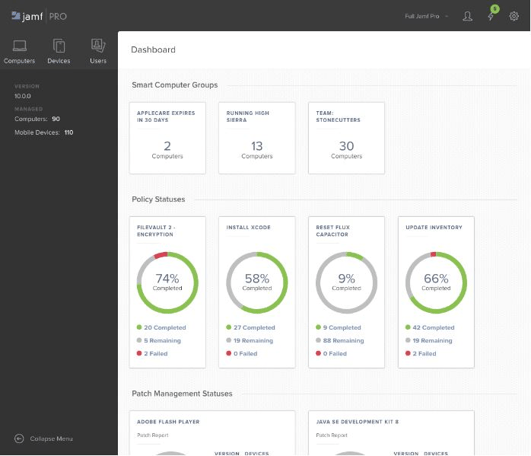

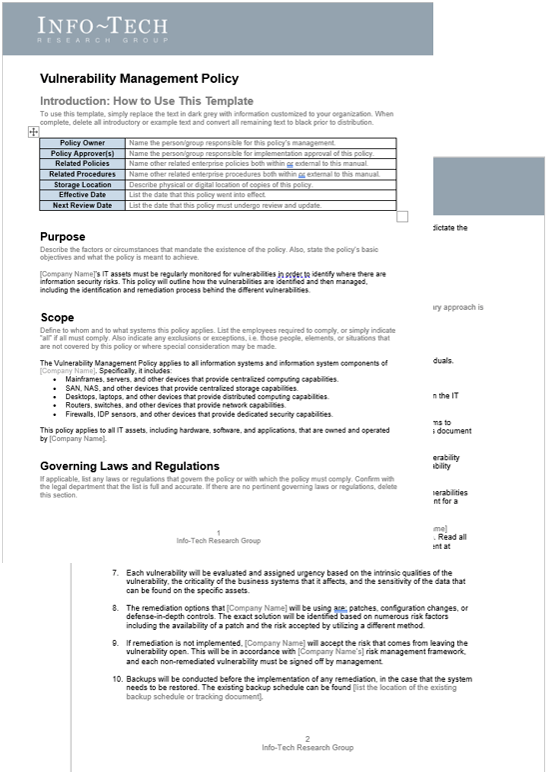

If you need to opt for a dedicated suite, these vendors should be strong contenders in your consideration set

Dedicated requirements management suites are a great (although pricey) way to have full control over recording, analysis, and hierarchical categorization of requirements. Consider some of the major vendors in the space if Word, Excel, and Visio aren’t suitable for you.

- Before you purchase a full-scale suite or module for requirements management, ensure that the following contenders have been evaluated for your requirements gathering technology strategy:

- Micro Focus Requirements Management

- IBM Requisite Pro

- IBM Rational DOORS

- Blueprint Requirements Management

- Jama Software

- Polarion Software (a Siemens Company)

A mid-sized consulting company overhauls its requirement gathering software to better understand stakeholder needs

CASE STUDY

Industry Consulting

Source Jama Software

Challenge

ArcherPoint is a leading Microsoft Partner responsible for providing business solutions to its clients. Its varied customer base now requires a more sophisticated requirements gathering software.

Its process was centered around emailing Word documents, creating versions, and merging issues. ArcherPoint recognized the need to enhance effectiveness, efficiency, and accuracy of requirements gathering through a prescriptive set of elicitation procedures.

Solution

The IT department at ArcherPoint recognized that a strong requirements gathering process was essential to delivering value to stakeholders. It needed more scalable and flexible requirements gathering software to enhance requirements traceability. The company implemented SaaS solutions that included traceability and seamless integration features.

These features reduced the incidences of repetition, allowed for tracing of requirements relationships, and ultimately led to an exhaustive understanding of stakeholders’ needs.

Results

Projects are now vetted upon an understanding of the business client’s needs with a thorough requirements gathering collection and analysis.

A deeper understanding of the business needs also allows ArcherPoint to better understand the roles and responsibilities of stakeholders. This allows for the implementation of structures and policies which makes the requirements gathering process rigorous.

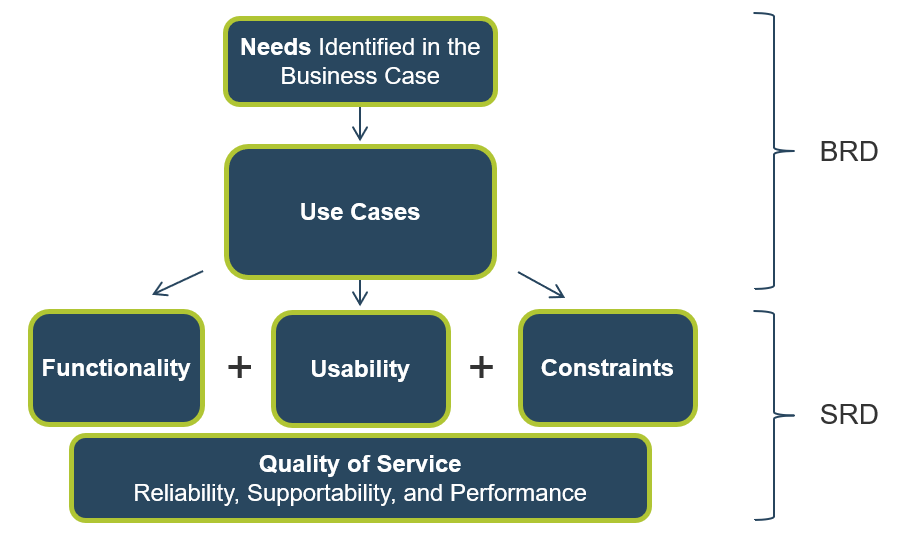

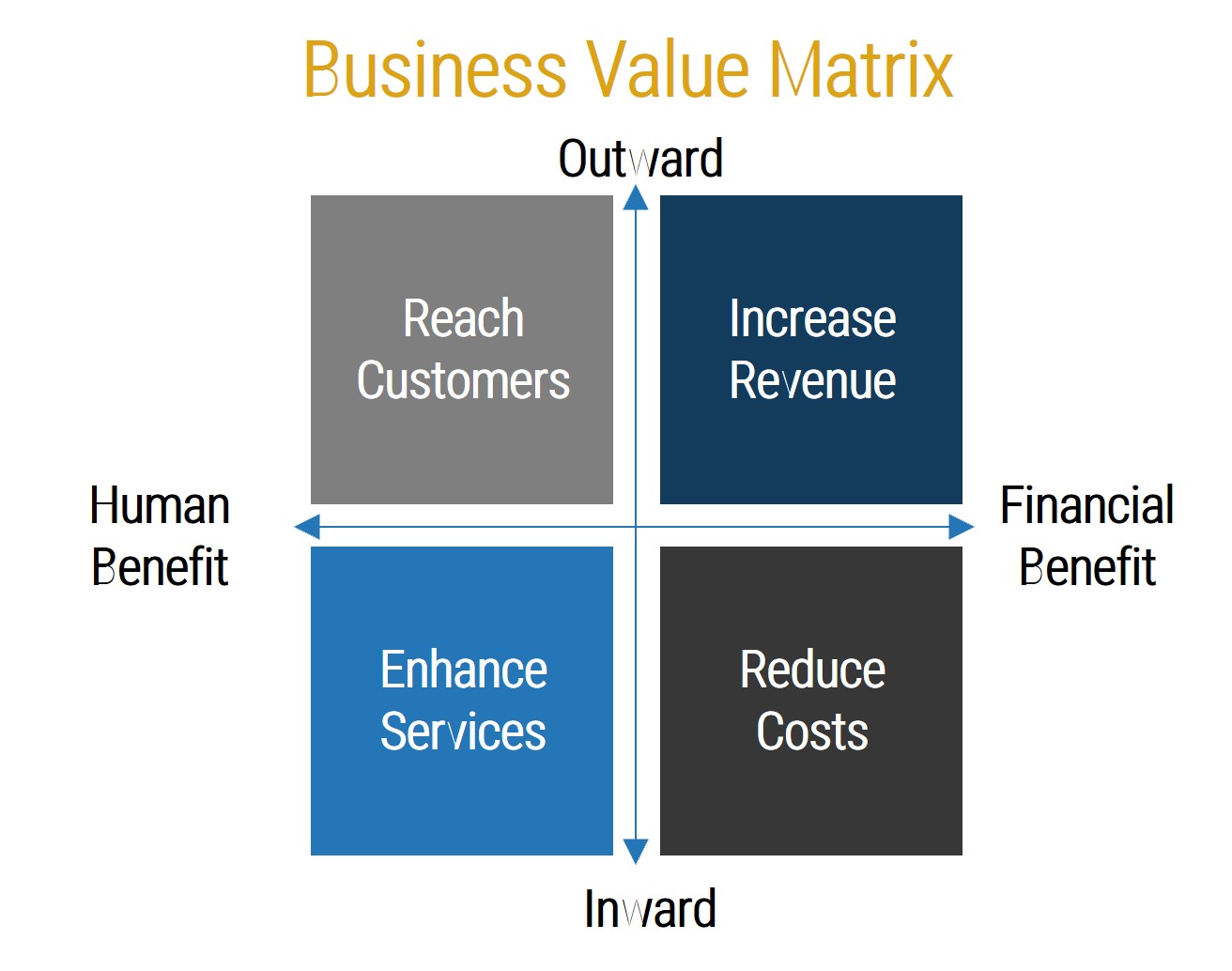

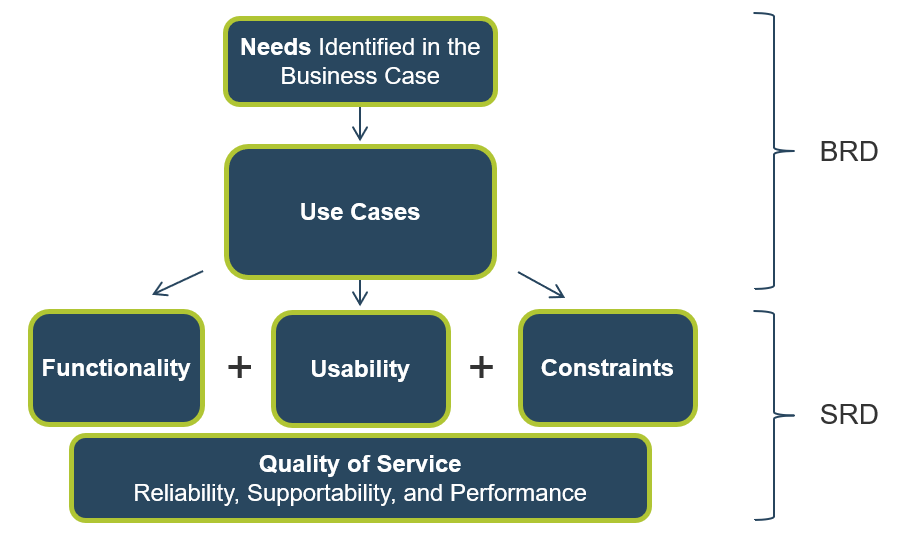

There are different types of requirements that need to be gathered throughout the elicitation phase

Business Requirements

- Higher-level statements of the goals, objectives, or needs of the enterprise.

- Describe the reasons why a project has been initiated, the objectives that the project will achieve, and the metrics that will be used to measure its success.

- Business requirements focus on the needs of the organization as a whole, not stakeholders within it.

- Business requirements provide the foundation on which all further requirements analysis is based:

- Ultimately, any detailed requirements must map to business requirements. If not, what business need does the detailed requirement fulfill?

Stakeholder Requirements

- Statements of the needs of a particular stakeholder or class of stakeholders, and how that stakeholder will interact with a solution.

- Stakeholder requirements serve as a bridge between business requirements and the various classes of solution requirements.

- When eliciting stakeholder requirements, other types of detailed requirements may be identified. Record these for future use, but keep the focus on capturing the stakeholders’ needs over detailing solution requirements.

Solution options or preferences are not requirements. Be sure to identify these quickly to avoid being forced into untimely discussions and sub-optimal solution decisions.

Requirement types – a quick overview (continued)

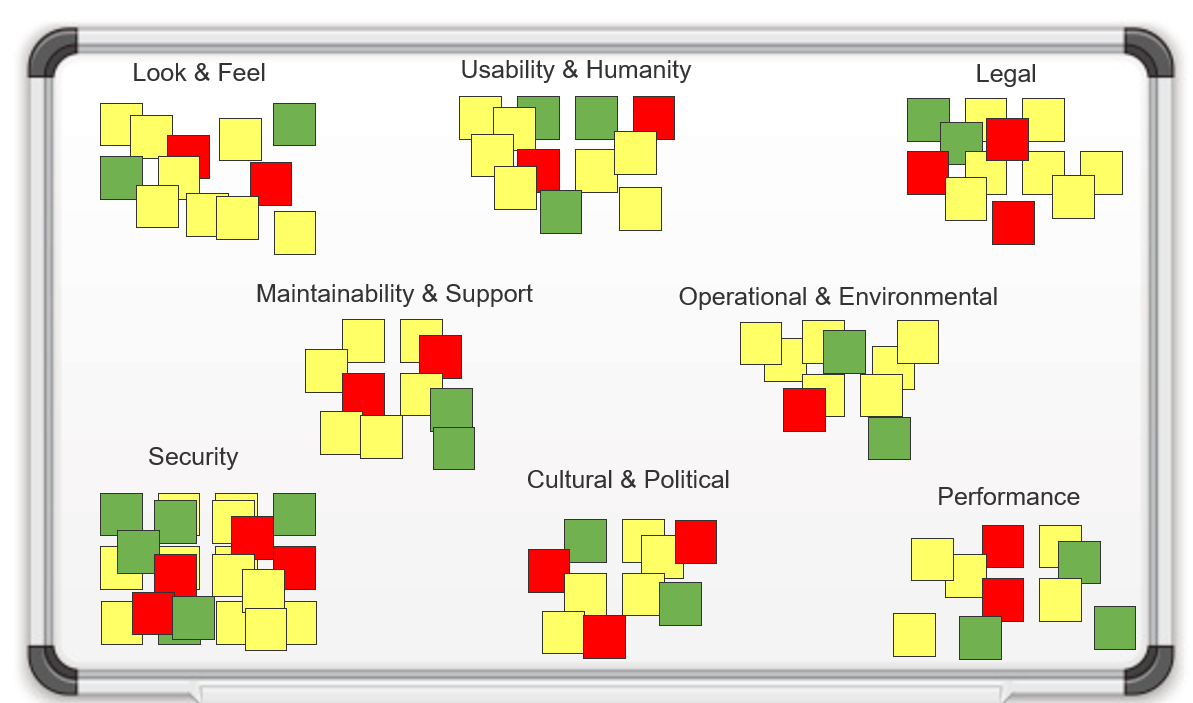

Solution Requirements: Describe the characteristics of a solution that meet business requirements and stakeholder requirements. They are frequently divided into sub-categories, particularly when the requirements describe a software solution:

Functional Requirements

- Describe the behavior and information that the solution will manage. They describe capabilities the system will be able to perform in terms of behaviors or operations, i.e. specific information technology application actions or responses.

- Functional requirements are not detailed solution specifications; rather, they are the basis from which specifications will be developed.

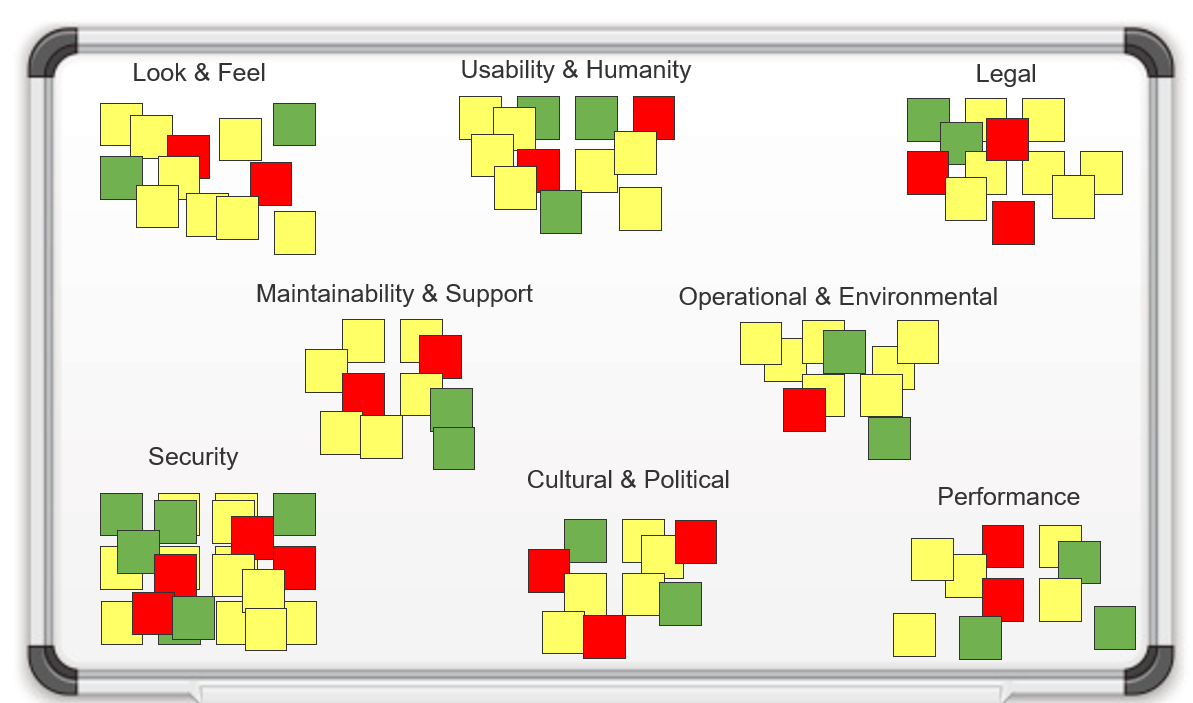

Non-Functional Requirements

- Capture conditions that do not directly relate to the behavior or functionality of the solution, but rather describe environmental conditions under which the solution must remain effective or qualities that the systems must have. These can include requirements related to capacity, speed, security, availability, and the information architecture and presentation of the user interface.

- Non-functional requirements often represent constraints on the ultimate solution. They tend to be less negotiable than functional requirements.

- For IT solutions, technical requirements would fit in this category.

Info-Tech Insight

Remember that solution requirements are distinct from solution specifications; in time, specifications will be developed from the requirements. Don’t get ahead of the process.

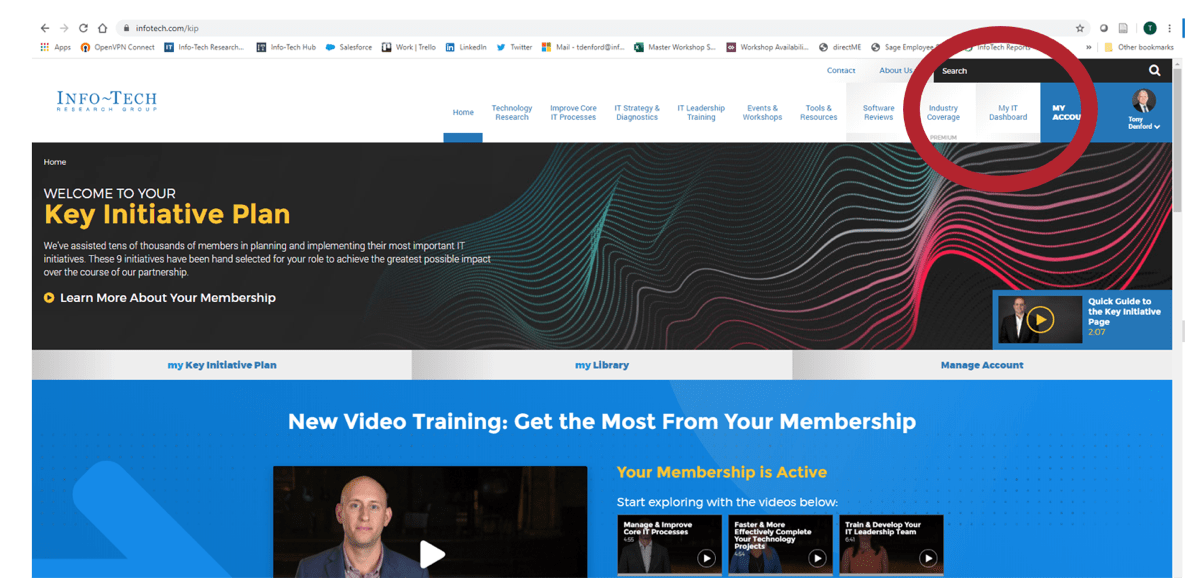

If you want additional support, have our analysts guide you through this phase as part of an Info-Tech workshop

Book a workshop with our Info-Tech analysts:

- To accelerate this project, engage your IT team in an Info-Tech workshop with an Info-Tech analyst team.

- Info-Tech analysts will join you and your team onsite at your location or welcome you to Info-Tech’s historic Toronto office to participate in an innovative onsite workshop.

- Contact your account manager (www.infotech.com/account), or email Workshops@InfoTech.com for more information.

The following are sample activities that will be conducted by Info-Tech analysts with your team:

1.2.1 Conduct current and target state analysis

An analyst will facilitate a discussion to assess the maturity of your requirements gathering process and identify any gaps in the current state.

1.2.2 Establish requirements gathering performance metrics

Speak to an analyst to discuss and determine key metrics for measuring the effectiveness of your requirements gathering processes.

1.2.4 Identify your requirements gathering business process model

An analyst will facilitate a discussion to determine the ideal target business process flow for your requirements gathering.

1.2.3; 1.2.5 Define control levels and match control points

An analyst will assist you with determining the appropriate requirements gathering approach for different project levels. The discussion will highlight key control points and define stakeholders who will be involved in each one.

1.2.6; 1.2.7 Conduct initial scoping and identify key stakeholders

An analyst will facilitate a discussion to highlight the scope of the requirements gathering optimization project as well as identify and analyze key stakeholders in the process.

Phase 2: Define the Elicitation Process

Phase 2 outline

Call 1-888-670-8889 or email GuidedImplementations@InfoTech.com for more information.

Complete these steps on your own, or call us to complete a guided implementation. A guided implementation is a series of

2-3 advisory calls that help you execute each phase of a project. They are included in most advisory memberships.

Guided Implementation 2: Define the Elicitation Process

Proposed Time to Completion: 2 weeks

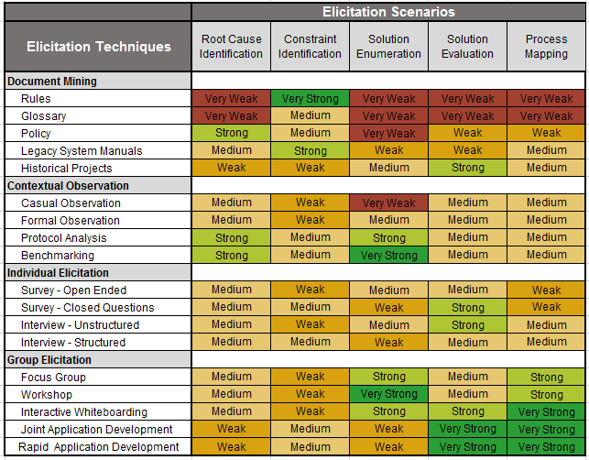

Step 2.1: Determine Elicitation Techniques

Start with an analyst kick off call:

- Understand and assess elicitation techniques.

- Determine best fit to projects and business environment.

Then complete these activities…

- Understand different elicitation techniques.

- Record the approved elicitation techniques.

Step 2.2: Structure Elicitation Output

Review findings with analyst:

- Review options for structuring the output of requirements elicitation.

- Build the requirements gathering operating model.

Then complete these activities…

- Build use case model.

- Use table-top testing to build use case models.

- Build the operating model.

With these tools & templates:

- Business Requirements Document Template

- Scrum Documentation Template

Phase 2 Results & Insights:

- Best practices for conducting and structuring elicitation.

Step 2.1: Determine Elicitation Techniques

Phase 1

1.1 Understand the Benefits of Requirements Optimization

1.2 Determine Your Target State for Requirements Gathering

Phase 2

2.1 Determine Elicitation Techniques

2.2 Structure Elicitation Output

Phase 3

3.1 Create Analysis Framework

3.2 Validate Business Requirements

Phase 4

4.1 Create Control Processes for Requirements Changes

4.2 Build Requirements Governance and Communication Plan

This step will walk you through the following activities:

- Understand requirements elicitation techniques.

This step involves the following participants:

- BAs

- Business stakeholders

Outcomes of this step

- Select and record best-fit elicitation techniques.

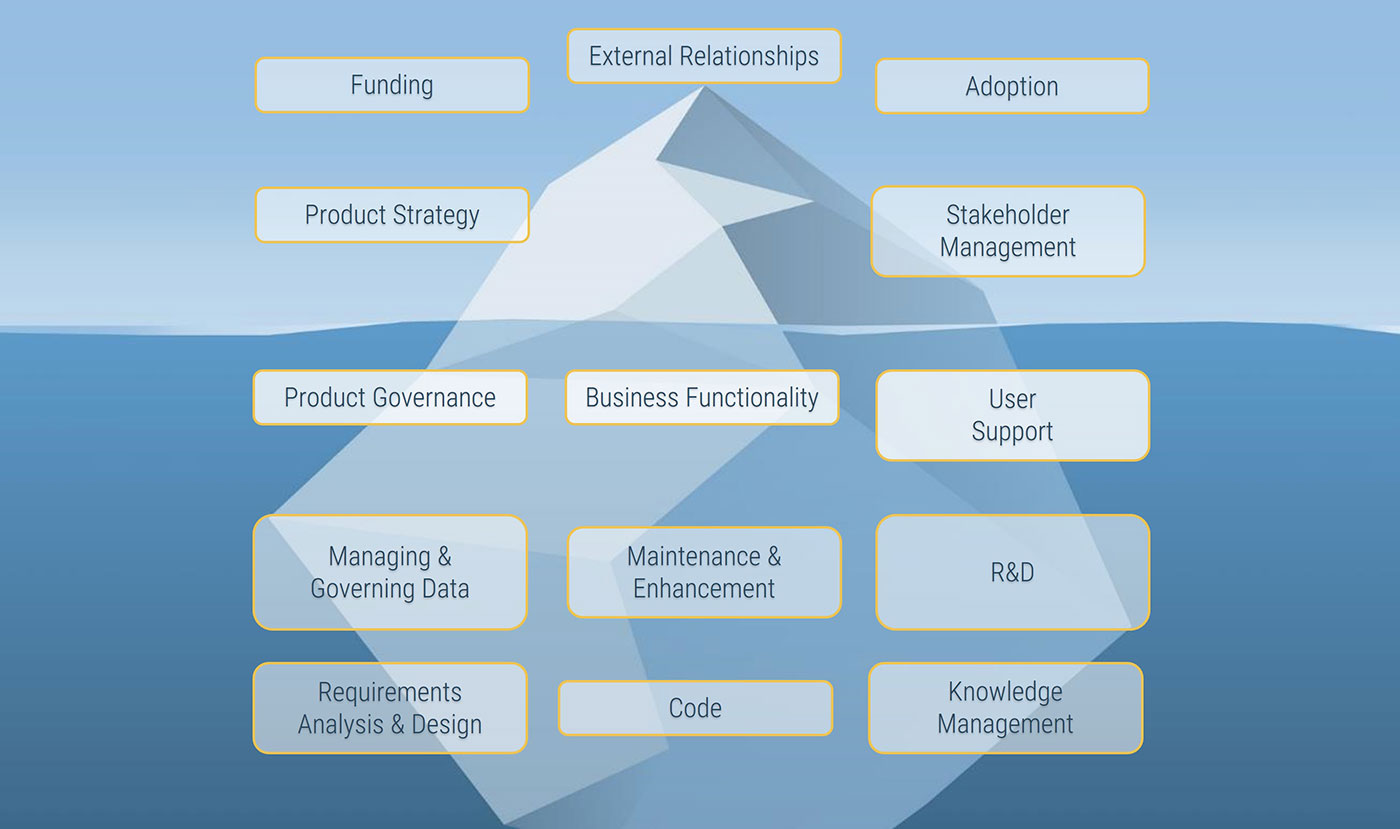

Eliciting requirements is all about effectively creating the initial shortlist of needs the business has for an application

The elicitation phase is where the BAs actually meet with project stakeholders and uncover the requirements for the application. Major tasks within this phase include stakeholder identification, selecting elicitation techniques, and conducting the elicitation sessions. This phase involves the most information gathering and therefore requires a significant amount of time to be done properly.

Good requirements elicitation leverages a strong elicitation framework and executes the right elicitation techniques

A mediocre requirements practitioner takes an order taker approach to elicitation: they elicit requirements by showing up to a meeting with the stakeholder and asking, “What do you want?” This approach frequently results in gaps in requirements, as most stakeholders cannot free-form spit out an accurate inventory of their needs.

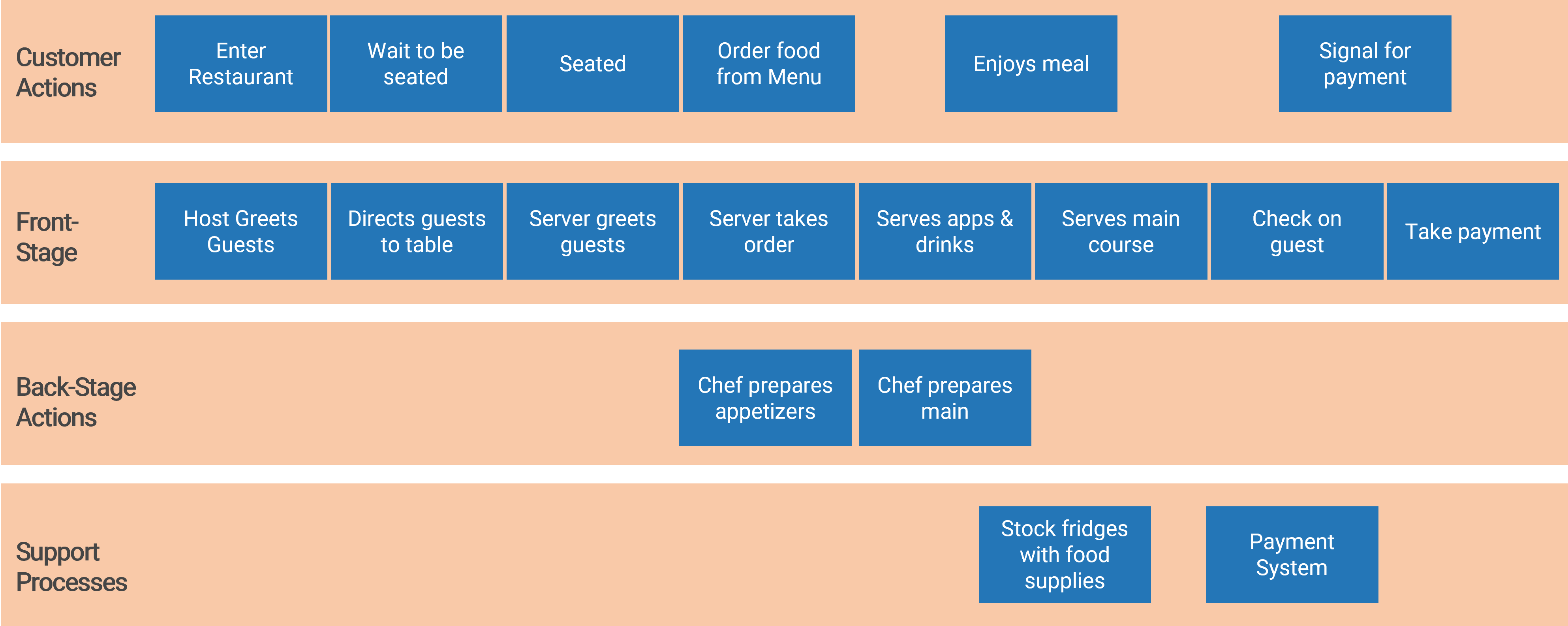

A strong requirements practitioner first decides on an elicitation framework – a mechanism to anchor the discussion about the business requirements. Info-Tech recommends using business process modelling (BPM) as the most effective framework. The BA can now work through several key questions:

- What processes will this application need to support?

- What does the current process look like?

- How could we improve the process?

- In a target state process map, what are the key functional requirements necessary to support this?

The second key element to elicitation is using the right blend of elicitation techniques: the tactical approach used to actually collect the requirements. Interviews are the most popular means, but focus groups, JAD sessions, and observational techniques can often yield better results – faster. This section will touch on BPM/BPI as an elicitation framework, then do deep dive on different elicitation techniques.

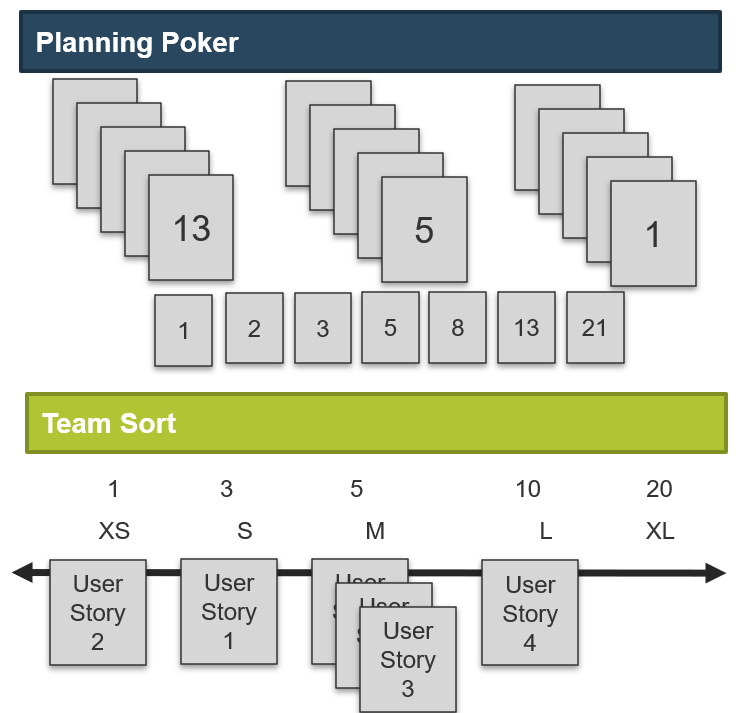

The elicitation phase of most enterprise application projects follows a similar four-step approach

Prepare

Stakeholders must be identified, and elicitation frameworks and techniques selected. Each technique requires different preparation. For example, brainstorming requires ground rules; focus groups require invitations, specific focus areas, and meeting rooms (perhaps even cameras). Look at each of these techniques and discuss how you would prepare.